Elkeid - Bytedance Cloud Workload Protection Platform

Elkeid is an open source solution that can meet the security requirements of various workloads such as hosts, containers and K8s, and serverless. It is derived from ByteDance's internal best practices.

With the business development of enterprises, the situation of multi-cloud, cloud-native, and coexistence of multiple workloads has become more and more prominent. We hope that there can be a set of solutions that can meet the security requirements under different workloads, so Elkeid was born.

Introduction

Elkeid has the following key capabilities:

-

Elkeid not only has the traditional HIDS (Host Intrusion Detection System) ability for host layer intrusion detection and malicious file identification, but also can well identify malicious behaviors in containers. The host can meet the anti-intrusion security requirements of the host and the container on it, and the powerful kernel-level data collection capability at the bottom of Elkeid can satisfy the desire of most security analyst for host-level data.

-

For the running business Elkeid has the RASP capability and can be injected into the business process for anti-intrusion protection, not only the operation and maintenance personnel do not need to install another Agent, but also the business does not need to restart.

-

For K8s itself, Elkeid supports collection to K8s Audit Log to perform intrusion detection and risk identification on the K8s system.

-

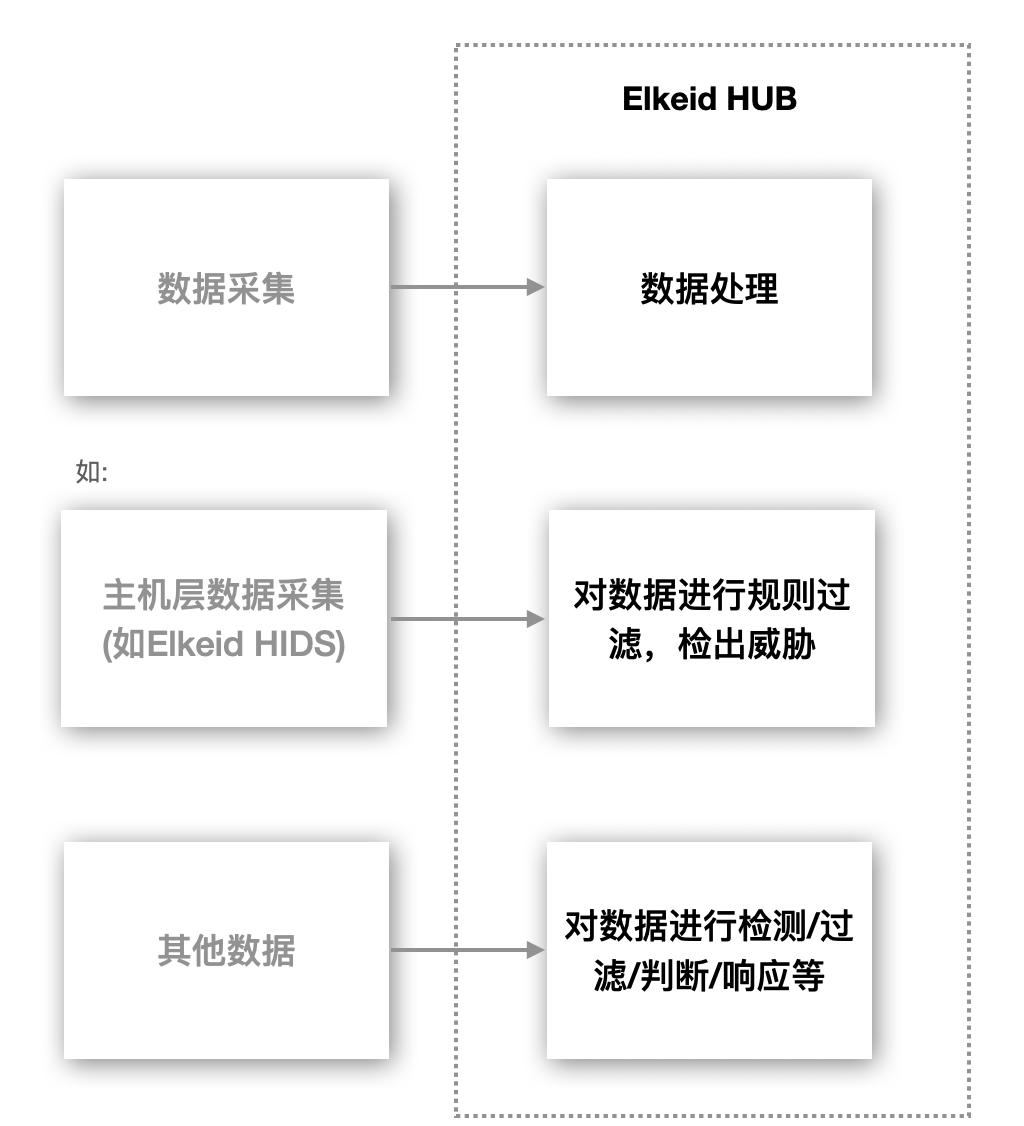

Elkeid's rule engine Elkeid HUB can also be well linked with external multiple systems.

Ekeid integrates these capabilities into one platform to meet the complex security requirements of different workloads, while also achieving multi-component capability association. What is even more rare is that each component undergoes massive byte-beating. Data and years of combat testing.

Elkeid Community Edition Description

It should be noted that there are differences between the Elkeid open source version and the full version. The current open source capabilities mainly include:

- All on-device capabilities, that is, on-device data/asset/partial collection capabilities, kernel-state data collection capabilities, RASP probe parts, etc., and are consistent with the internal version of ByteDance;

- All backend capabilities, namely Agent Center, service discovery, etc., are consistent with the internal version of ByteDance;

- Provide a community edition rule engine, namely Elkeid HUB, and use it as an example with a small number of strategies;

- Provides community version of Elkeid Console and some supporting capabilities.

Therefore, it is necessary to have complete anti-intrusion and risk perception capabilities, and it is also necessary to construct policies based on Elkeid HUB and perform secondary processing of the data collected by Elkeid.

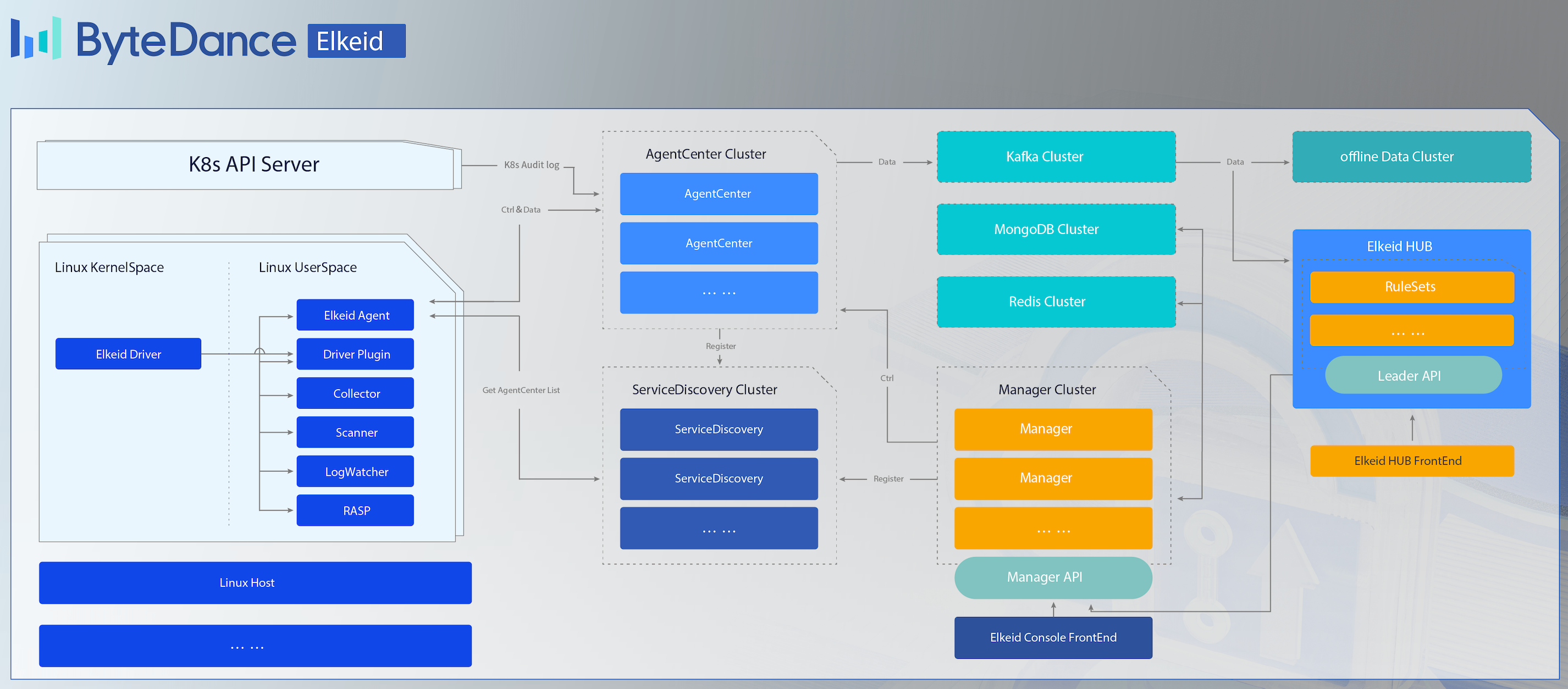

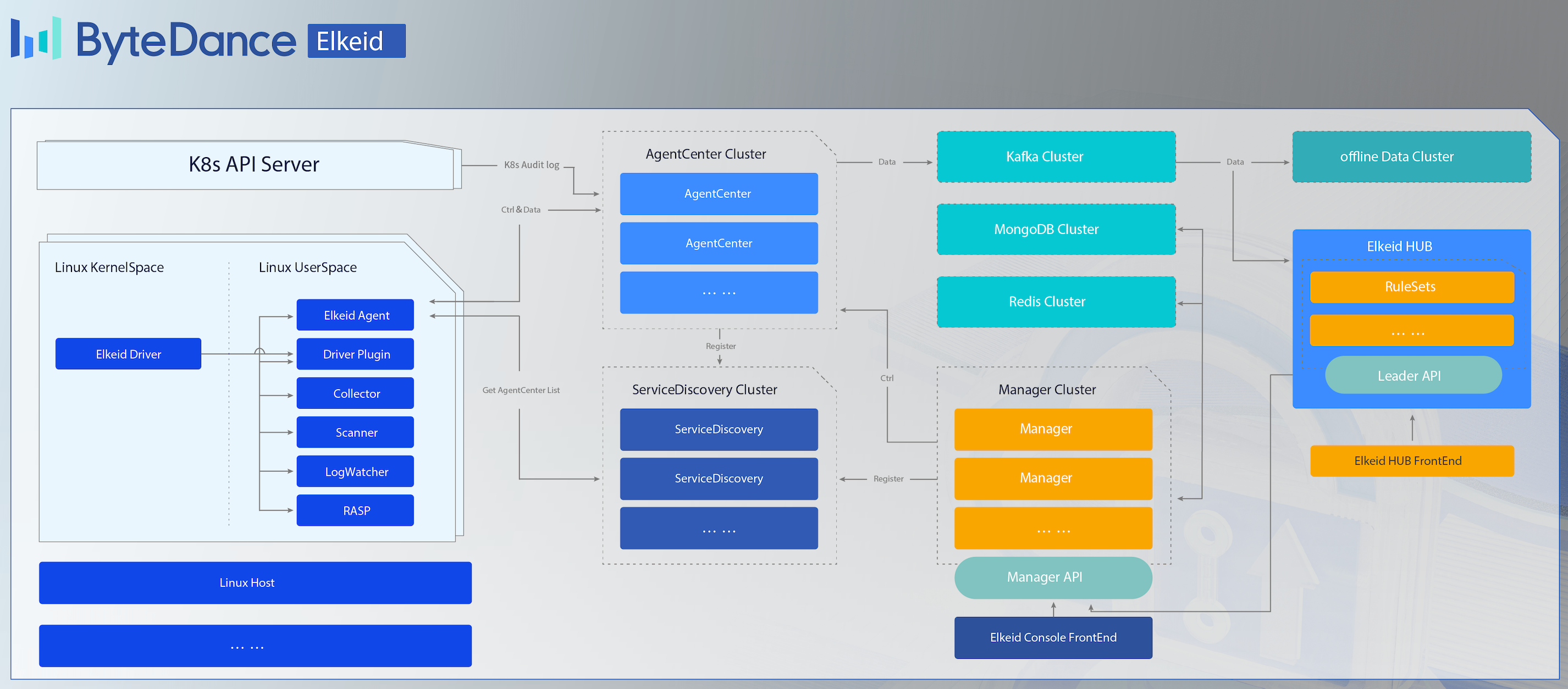

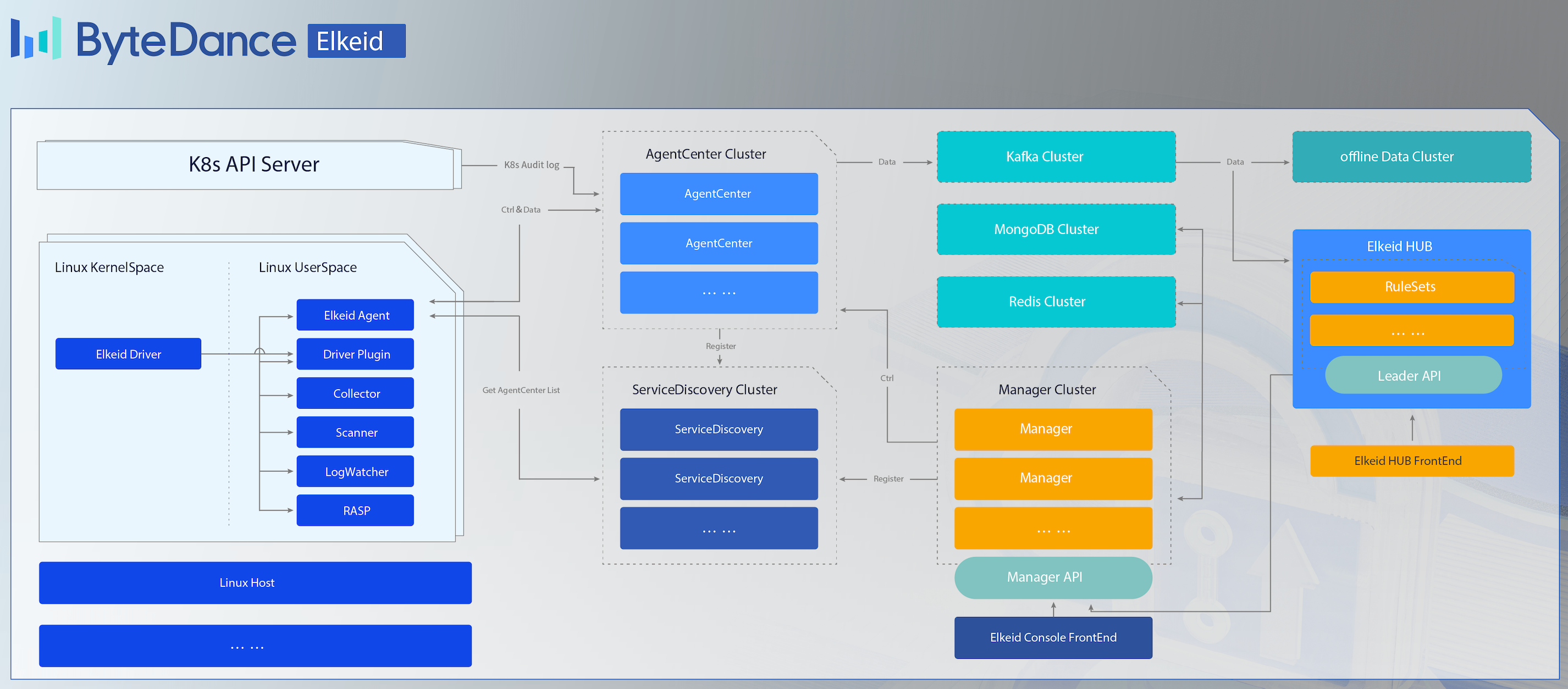

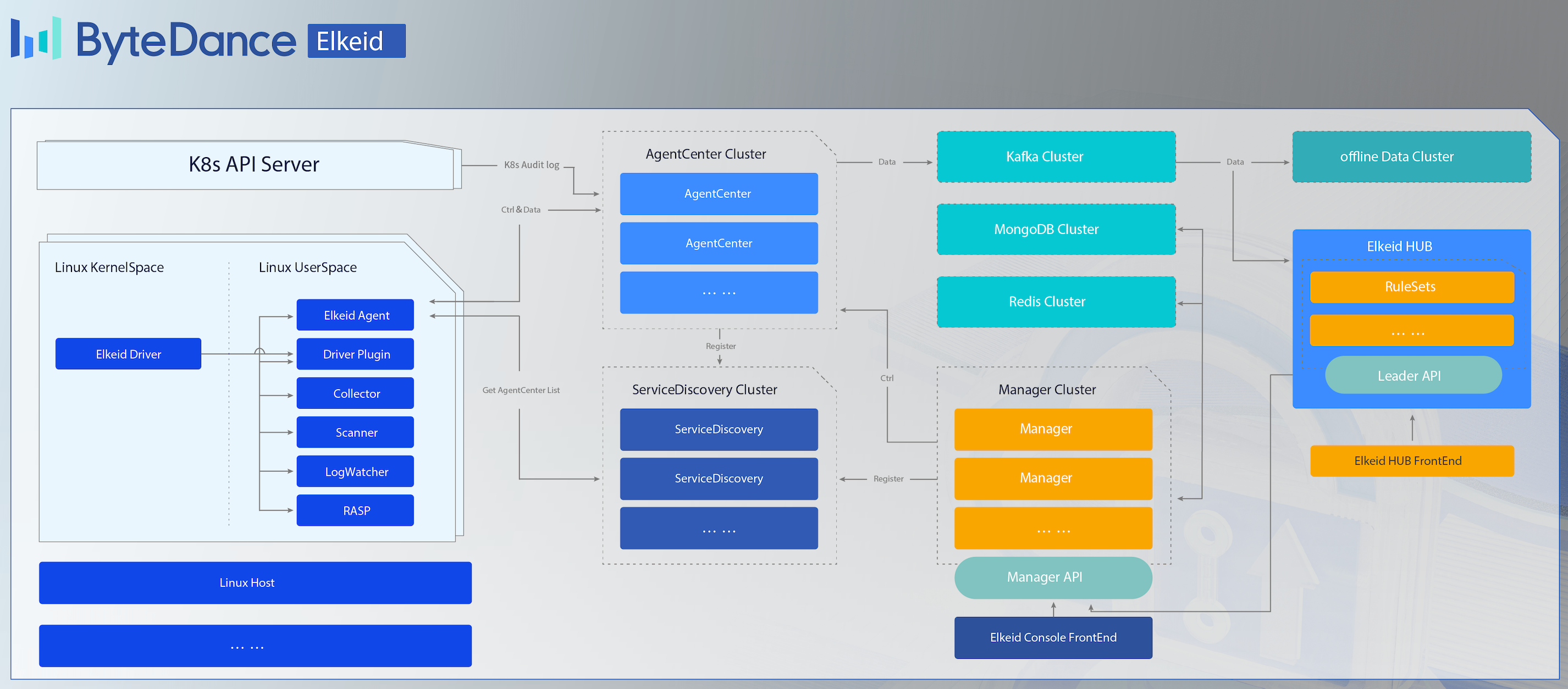

Elkeid Architecture

Elkeid Host Ability

- Elkeid Agent Linux userspace agent,responsible for managing various plugin, communication with Elkeid Server.

- Elkeid Driver Driver can collect data on Linux Kernel, support container runtime , communication with Elkeid Driver Plugin.

- Elkeid RASP Support CPython、Golang、JVM、NodeJS、PHP runtime probe, supports dynamic injection into the runtime.

- Elkeid Agent Plugin List

- Driver Plugin: Responsible for managing Elkeid Driver, and process the driver data.

- Collector Plugin: Responsible for the collection of assets/log information on the Linux System, such as user list, crontab, package information, etc.

- Journal Watcher: Responsible for monitoring systemd logs, currently supports ssh related log collection and reporting.

- Scanner Plugin: Responsible for static detection of malicious files on the host, currently supports yara.

- RASP Plugin: Responsible for managing RASP components and processing data collected from RASP.

- Baseline Plugin: Responsible for detecting baseline risks based on baseline check policies.

- Elkeid Data Format

- Elkeid Data Usage Tutorial

Elkeid Backend Ability

- Elkeid AgentCenter Responsible for communicating with the Agent, collecting Agent data and simply processing it and then summing it into the MQ, is also responsible for the management of the Agent, including Agent upgrade, configuration modification, task distribution, etc.

- Elkeid ServiceDiscovery Each component in the background needs to register and synchronize service information with the component regularly, so as to ensure that the instances in each service module are visible to each other and facilitate direct communication.

- Elkeid Manager Responsible for the management of the entire backend, and provide related query and management API.

- Elkeid Console Elkeid Front-end

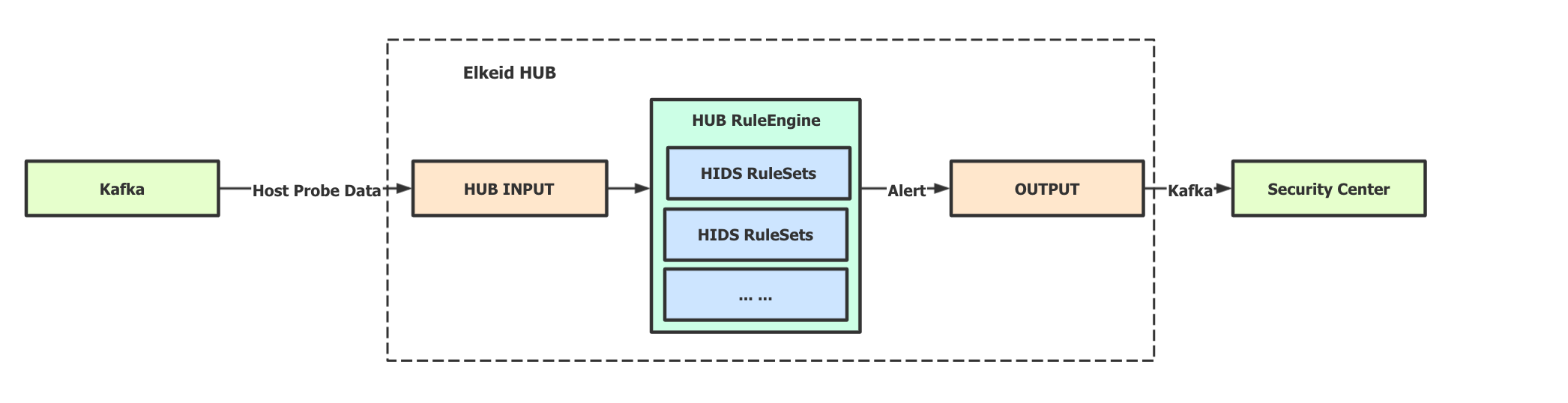

- Elkeid HUB Elkeid HIDS RuleEngine

Elkeid Function List

| Ability List | Elkeid Community Edition | Elkeid Enterprise Edition |

|---|---|---|

| Linux runtime data collection | :white_check_mark: | :white_check_mark: |

| RASP probe | :white_check_mark: | :white_check_mark: |

| K8s Audit Log collection | :white_check_mark: | :white_check_mark: |

| Agent control plane | :white_check_mark: | :white_check_mark: |

| Host Status and Details | :white_check_mark: | :white_check_mark: |

| Extortion bait | :ng_man: | :white_check_mark: |

| Asset collection | :white_check_mark: | :white_check_mark: |

| Asset Collection Enhancements | :ng_man: | :white_check_mark: |

| K8s asset collection | :white_check_mark: | :white_check_mark: |

| Exposure and Vulnerability Analysis | :ng_man: | :white_check_mark: |

| Host/Container Basic Intrusion Detection | few samples | :white_check_mark: |

| Host/Container Behavioral Sequence Intrusion Detection | :ng_man: | :white_check_mark: |

| RASP Basic Intrusion Detection | few samples | :white_check_mark: |

| RASP Behavioral Sequence Intrusion Detection | :ng_man: | :white_check_mark: |

| K8S Basic Intrusion Detection | few samples | :white_check_mark: |

| K8S Behavioral Sequence Intrusion Detection | :ng_man: | :white_check_mark: |

| K8S Threat Analysis | :ng_man: | :white_check_mark: |

| Alarm traceability (behavior traceability) | :ng_man: | :white_check_mark: |

| Alarm traceability (resident traceability) | :ng_man: | :white_check_mark: |

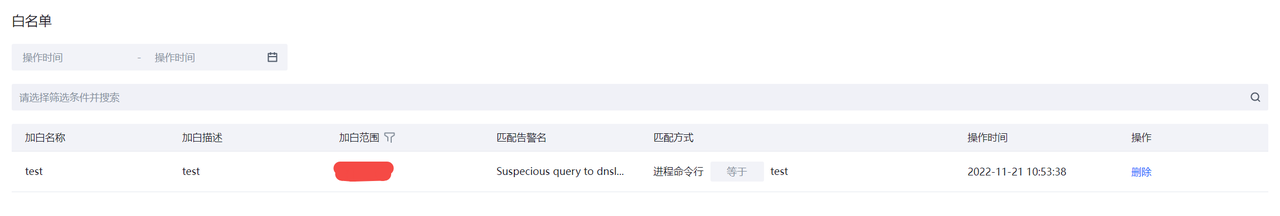

| Alert Whitelist | :white_check_mark: | :white_check_mark: |

| Multi-alarm aggregation capability | :ng_man: | :white_check_mark: |

| Threat Repsonse (Process) | :ng_man: | :white_check_mark: |

| Threat Repsonse (Network) | :ng_man: | :white_check_mark: |

| Threat Repsonse (File) | :ng_man: | :white_check_mark: |

| File isolation | :ng_man: | :white_check_mark: |

| Vulnerability discovery | few vuln info | :white_check_mark: |

| Vulnerability information hot update | :ng_man: | :white_check_mark: |

| Baseline check | few baseline rules | :white_check_mark: |

| Application Vulnerability Hotfix | :ng_man: | :white_check_mark: |

| Virus scan | :white_check_mark: | :white_check_mark: |

| User behavior log analysis | :ng_man: | :white_check_mark: |

| Agent Plugin management | :white_check_mark: | :white_check_mark: |

| System monitoring | :white_check_mark: | :white_check_mark: |

| System Management | :white_check_mark: | :white_check_mark: |

| Windows Support | :ng_man: | :white_check_mark: |

| Honey pot | :ng_man: | :oncoming_automobile: |

| Active defense | :ng_man: | :oncoming_automobile: |

| Cloud virus analysis | :ng_man: | :oncoming_automobile: |

| File-integrity monitoring | :ng_man: | :oncoming_automobile: |

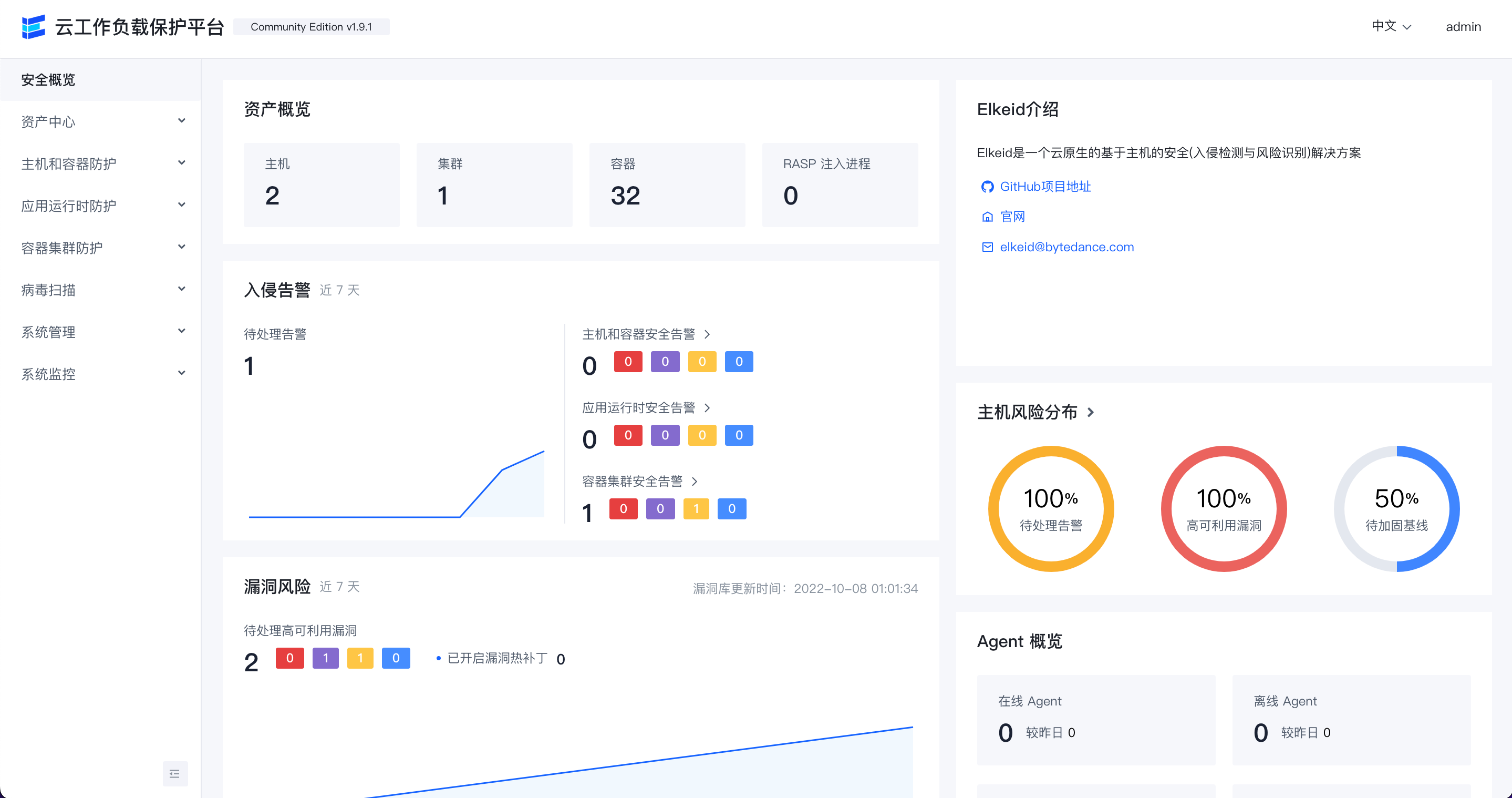

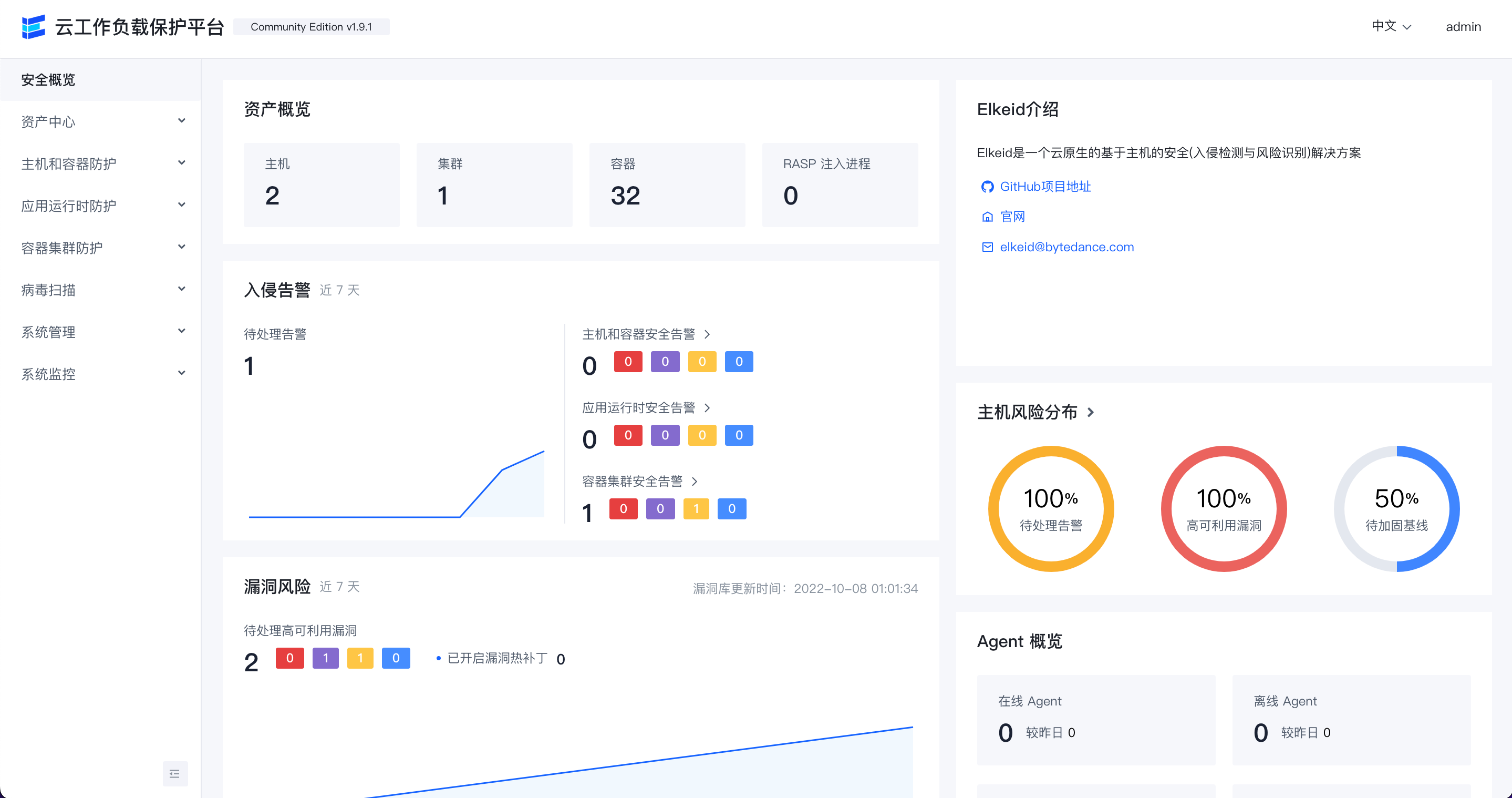

Front-end Display (Community Edition)

Security overview

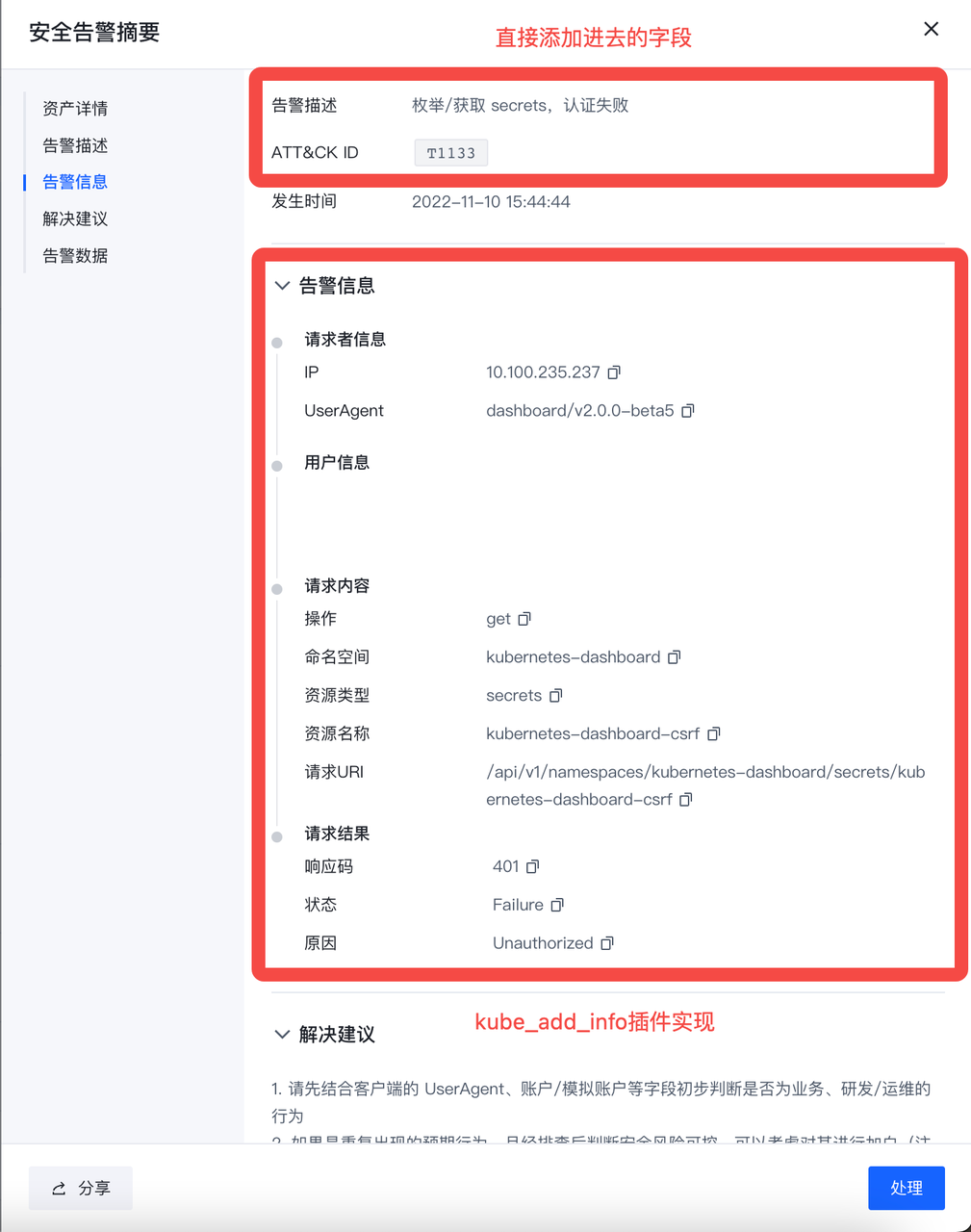

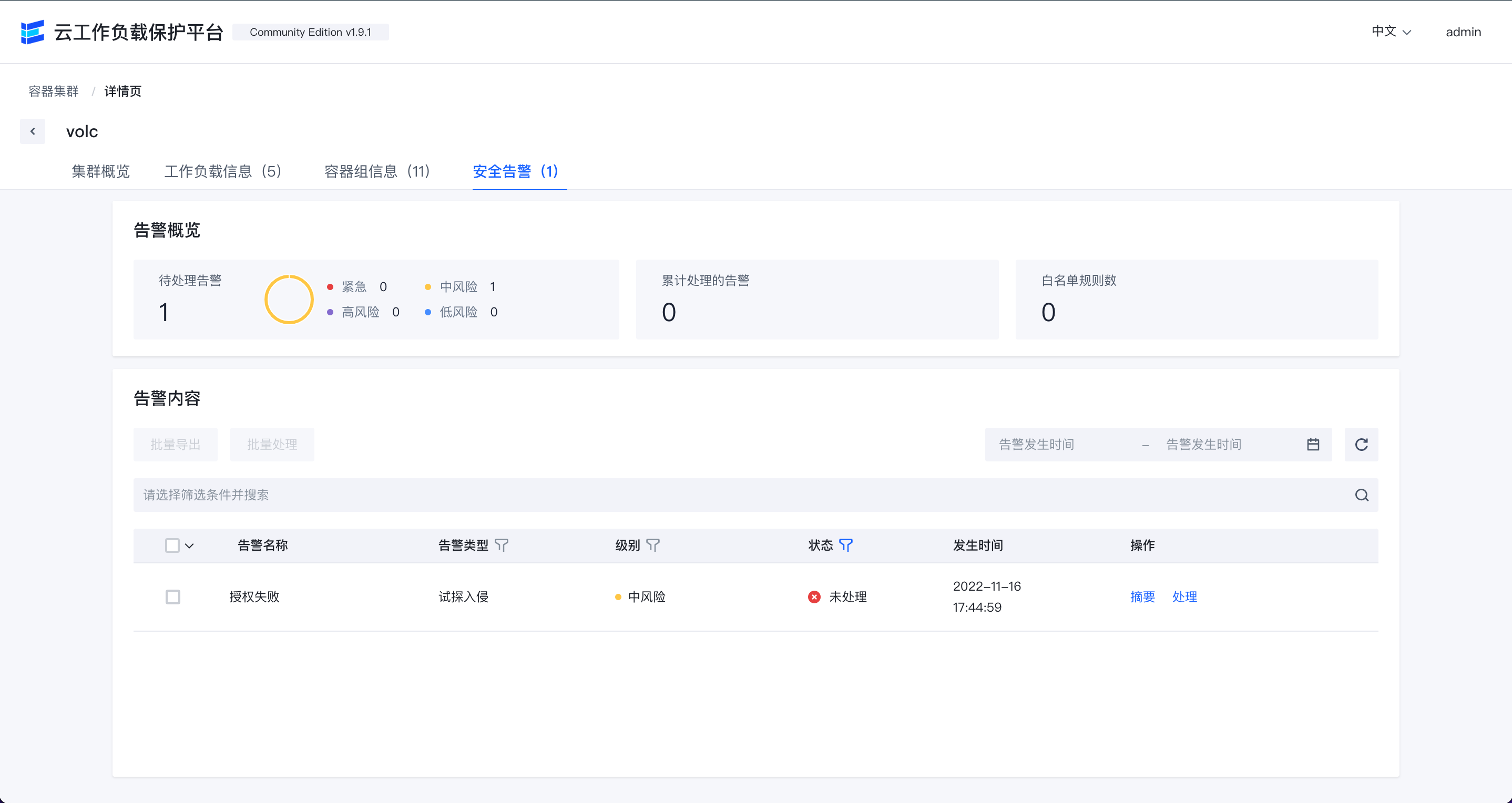

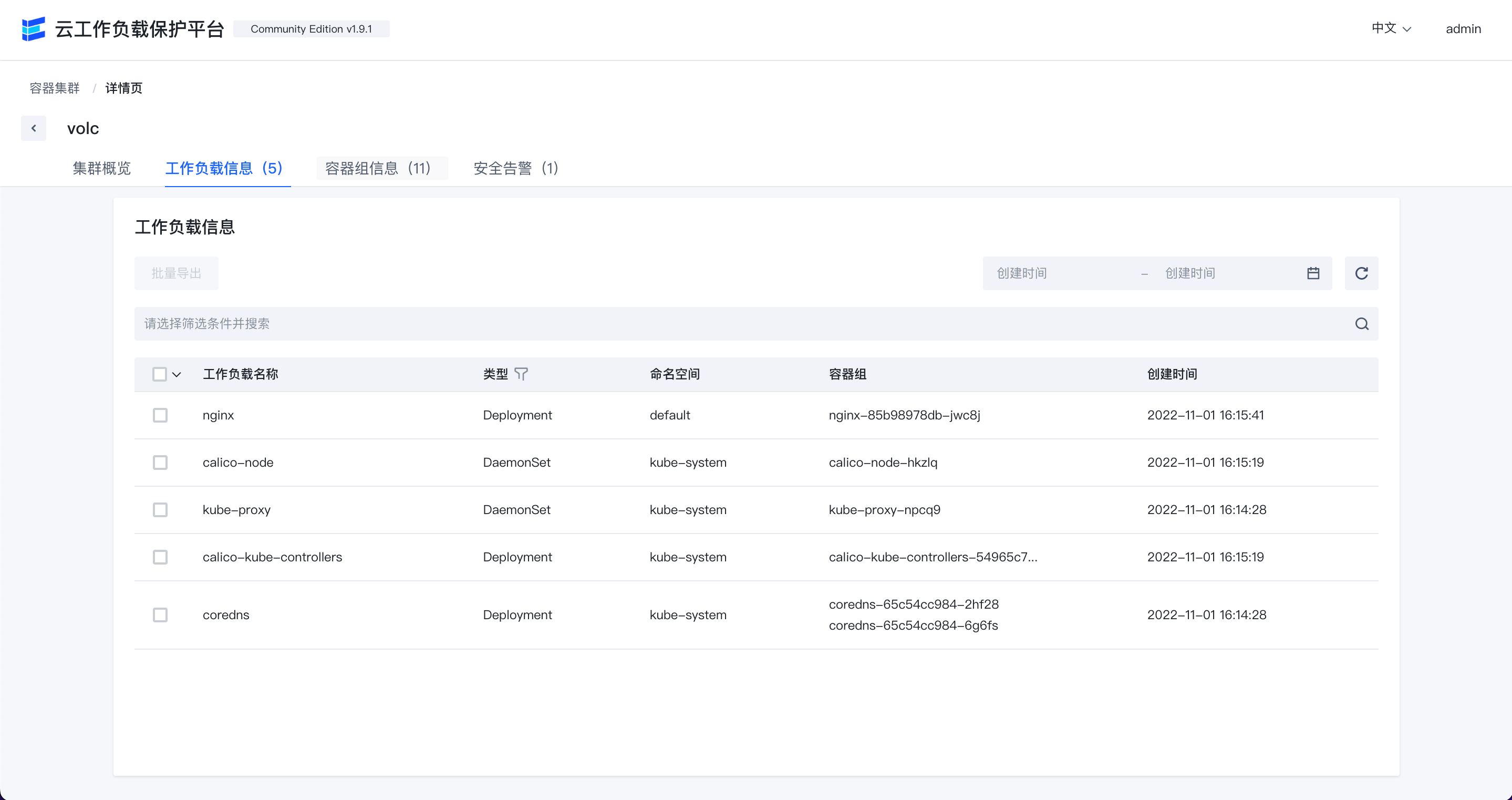

K8s security alert list

K8s pod list

Host overview

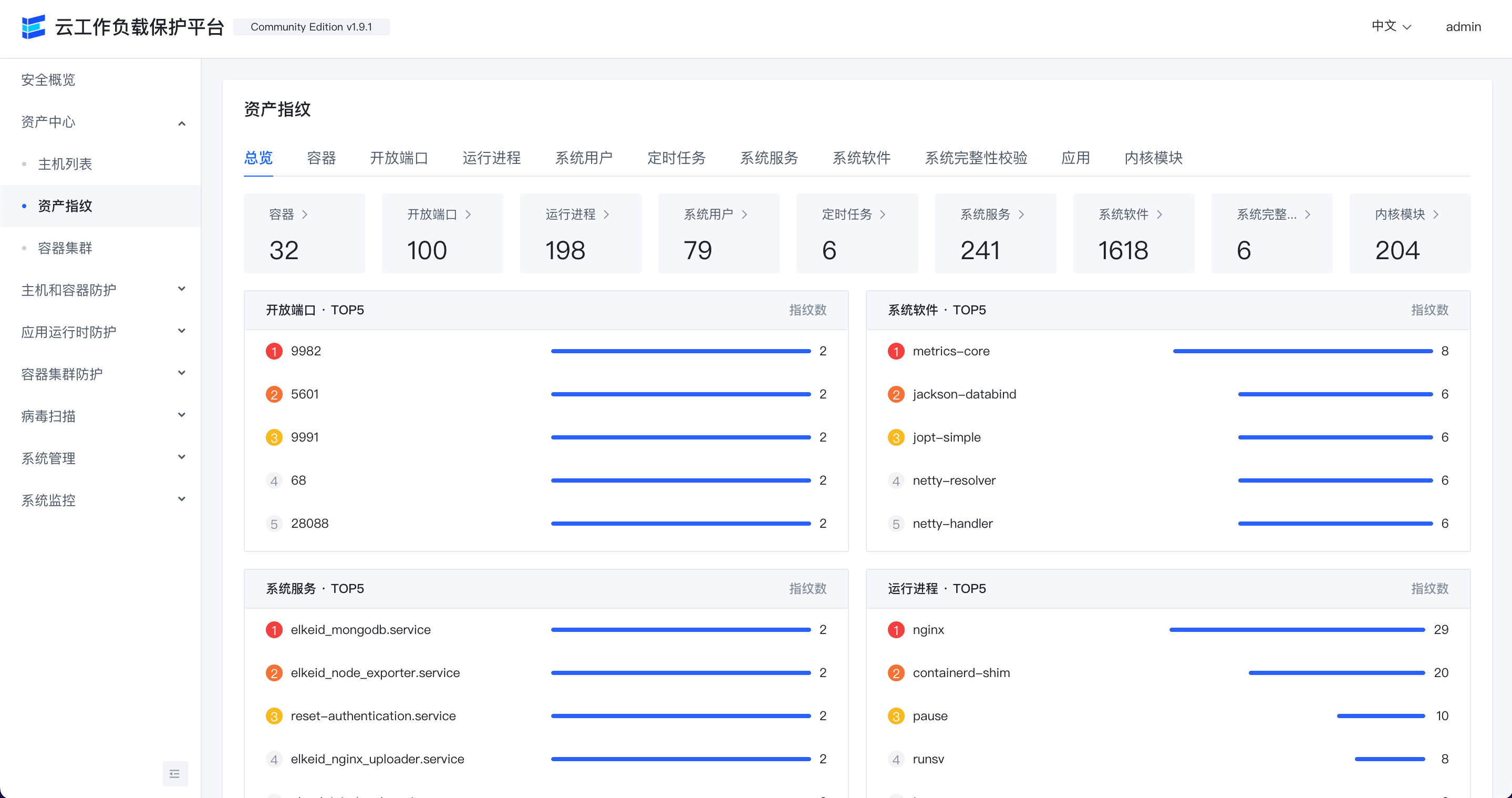

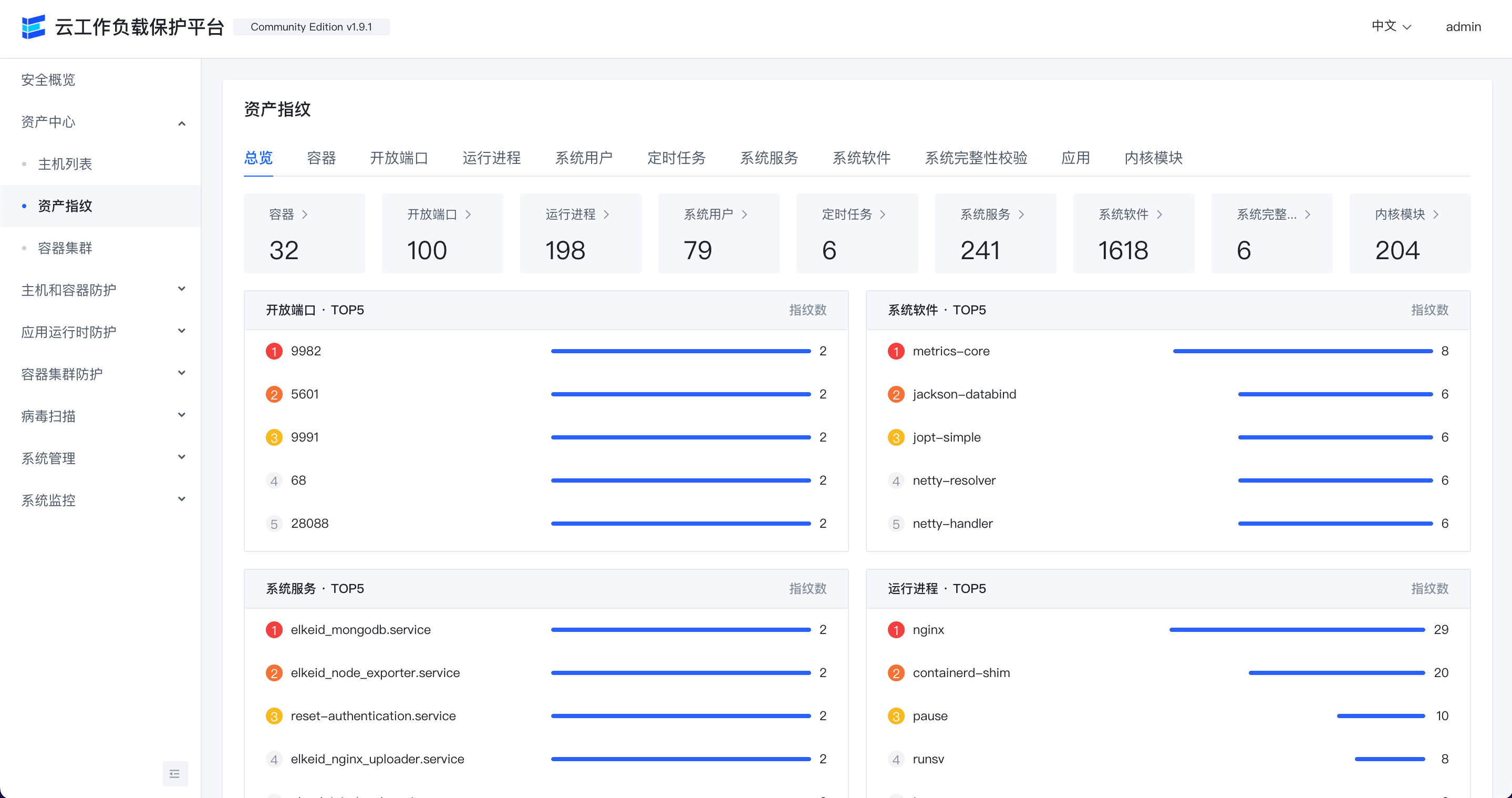

Resource fingerprint

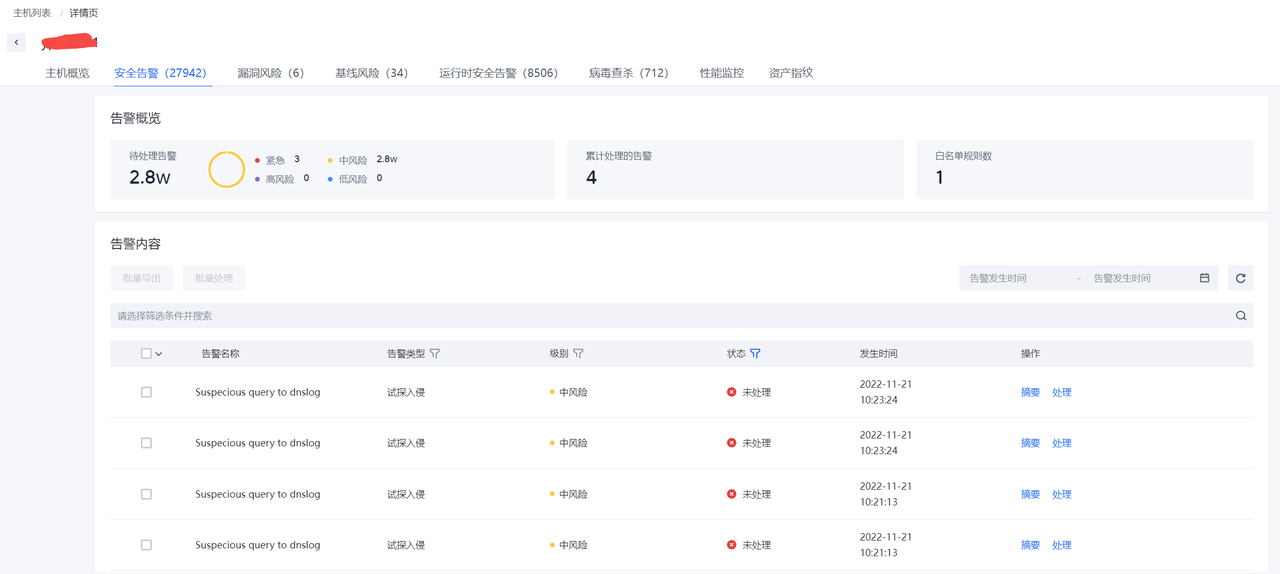

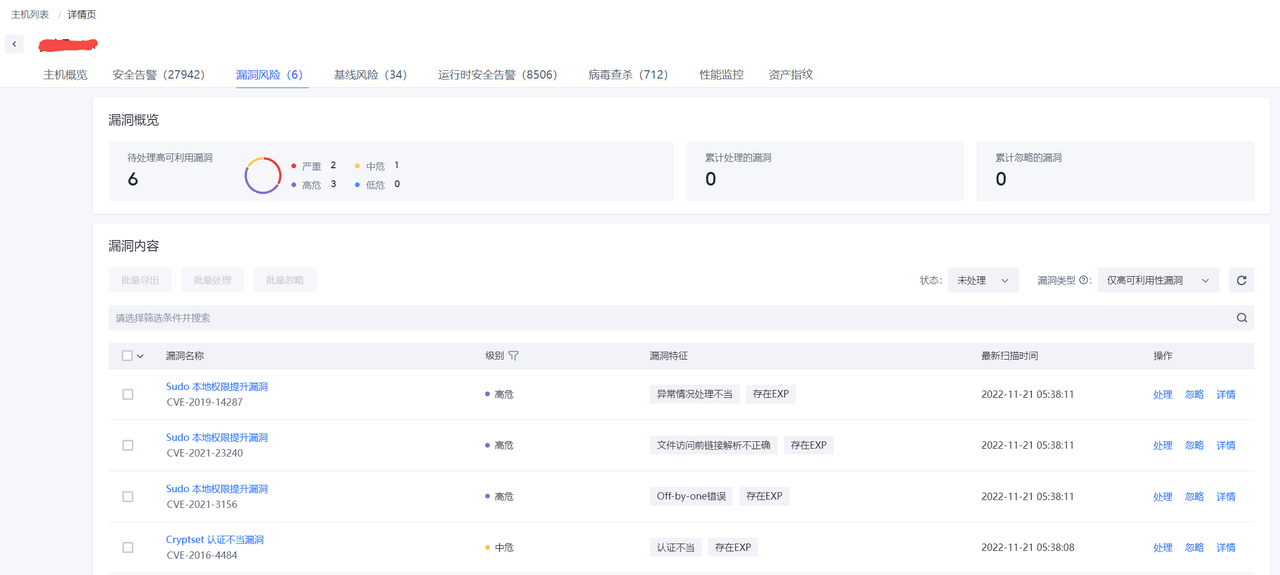

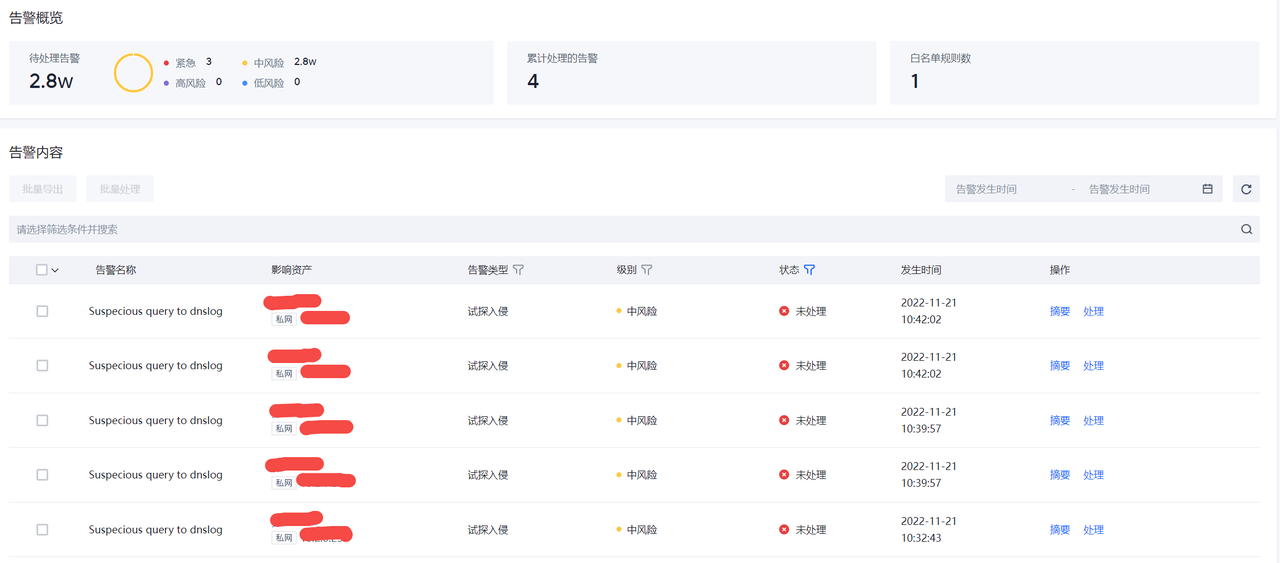

intrusion alert overwiew

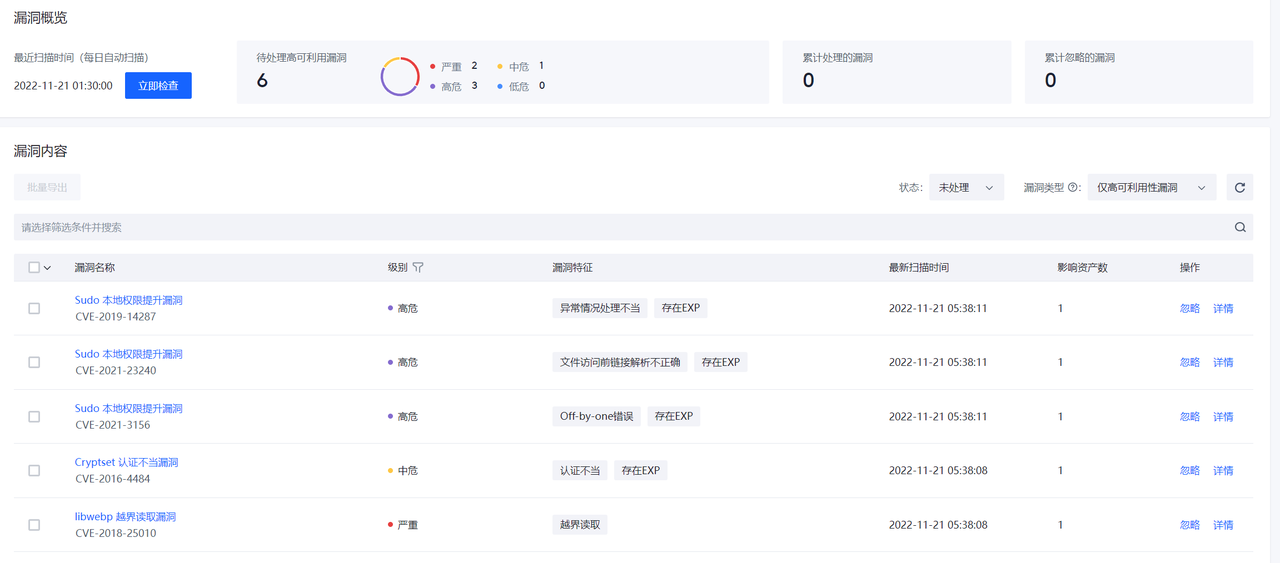

Vulnerability

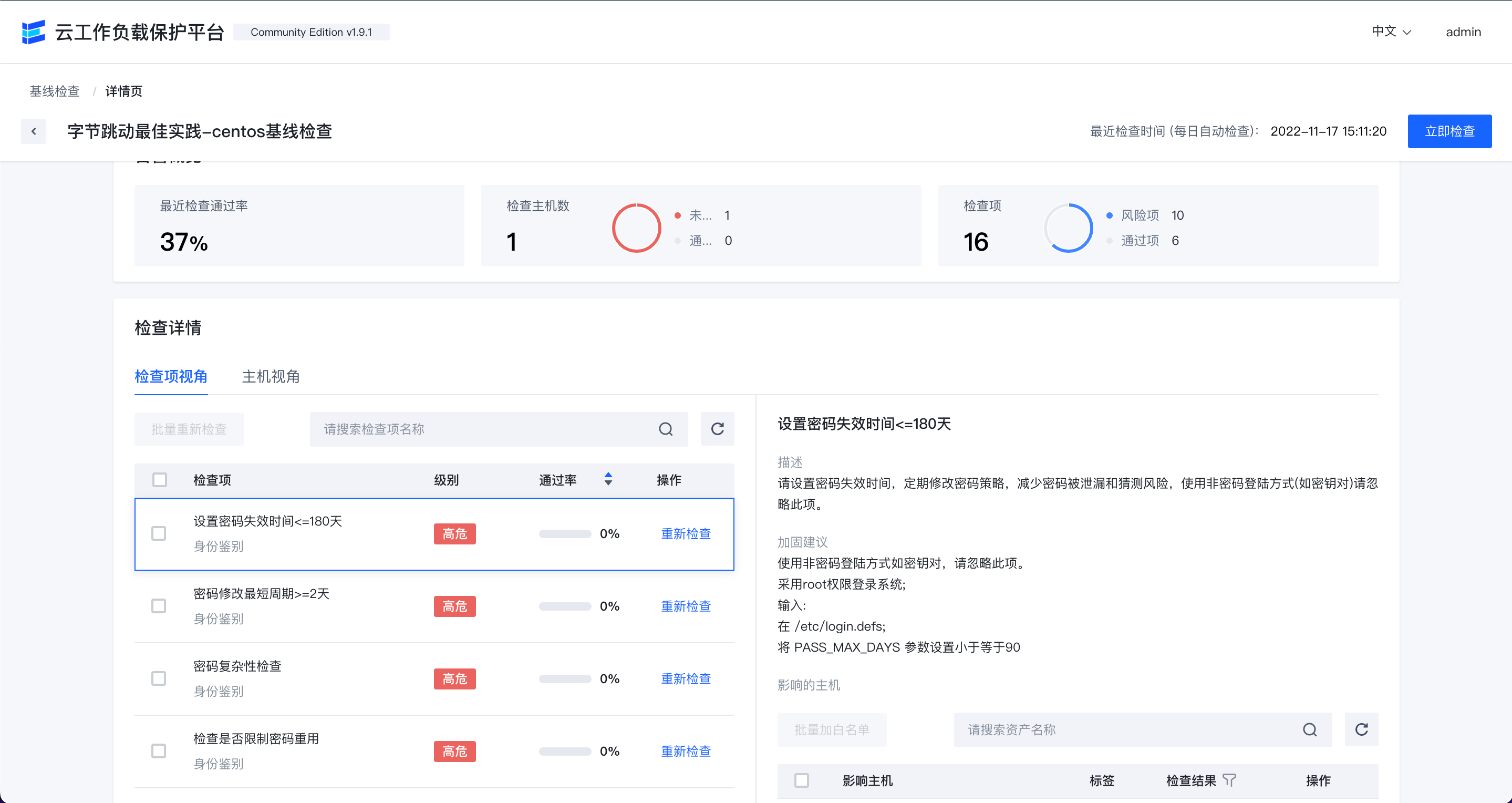

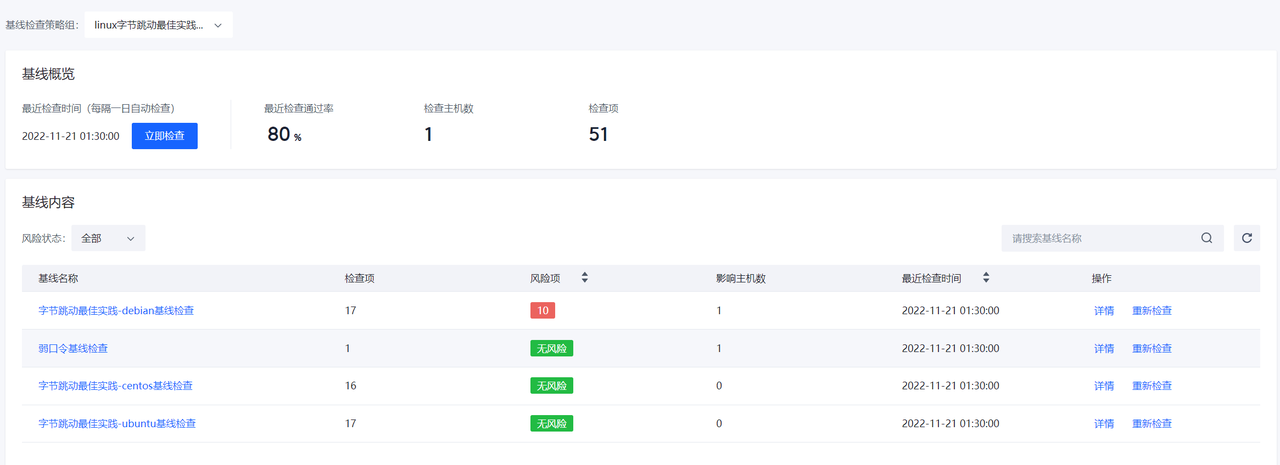

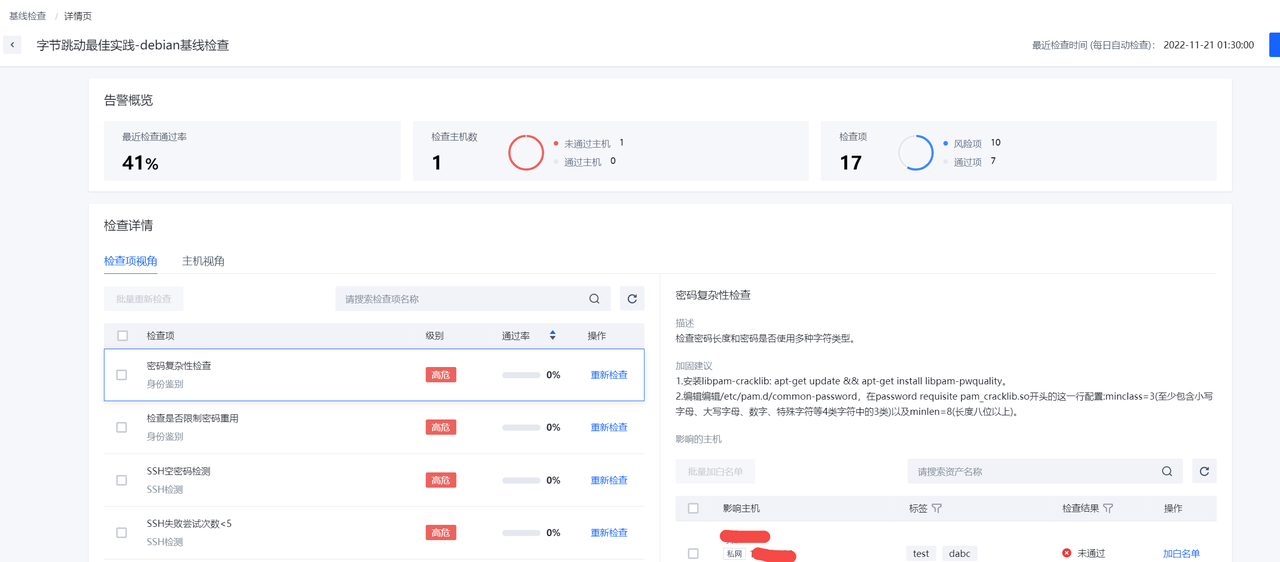

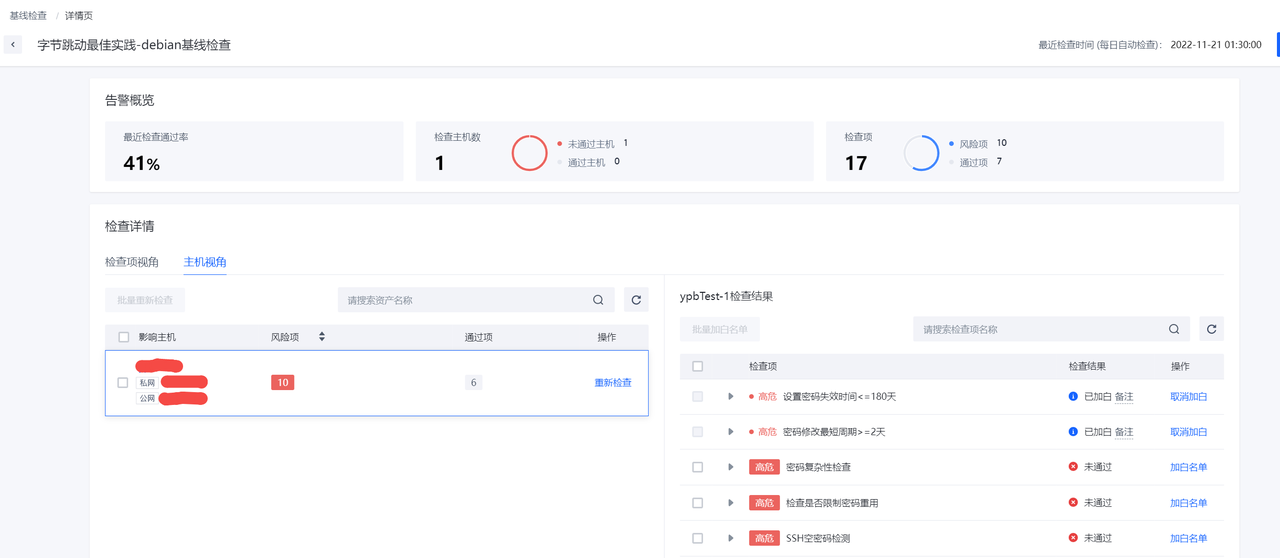

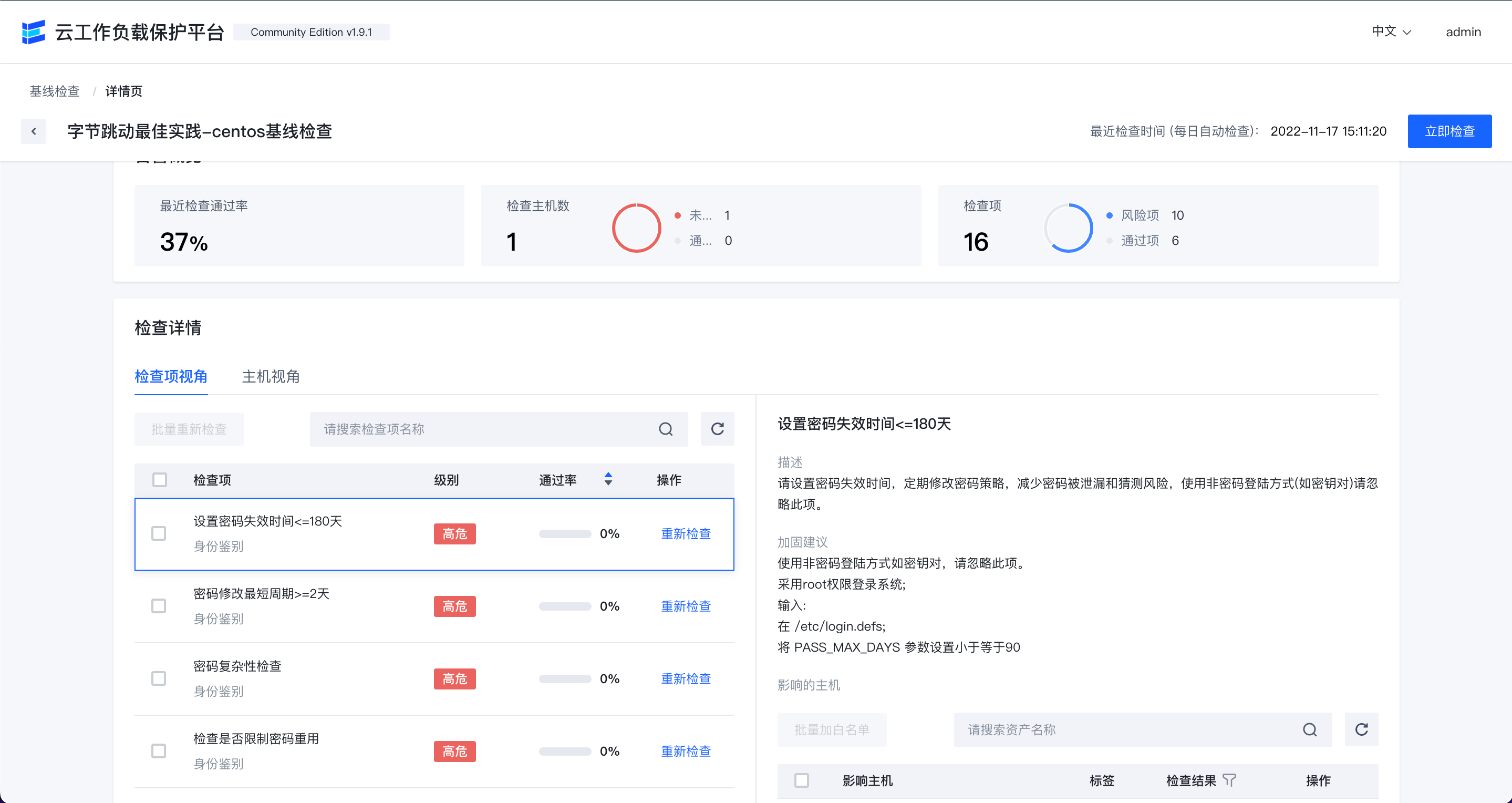

Baseline check

Virus scan

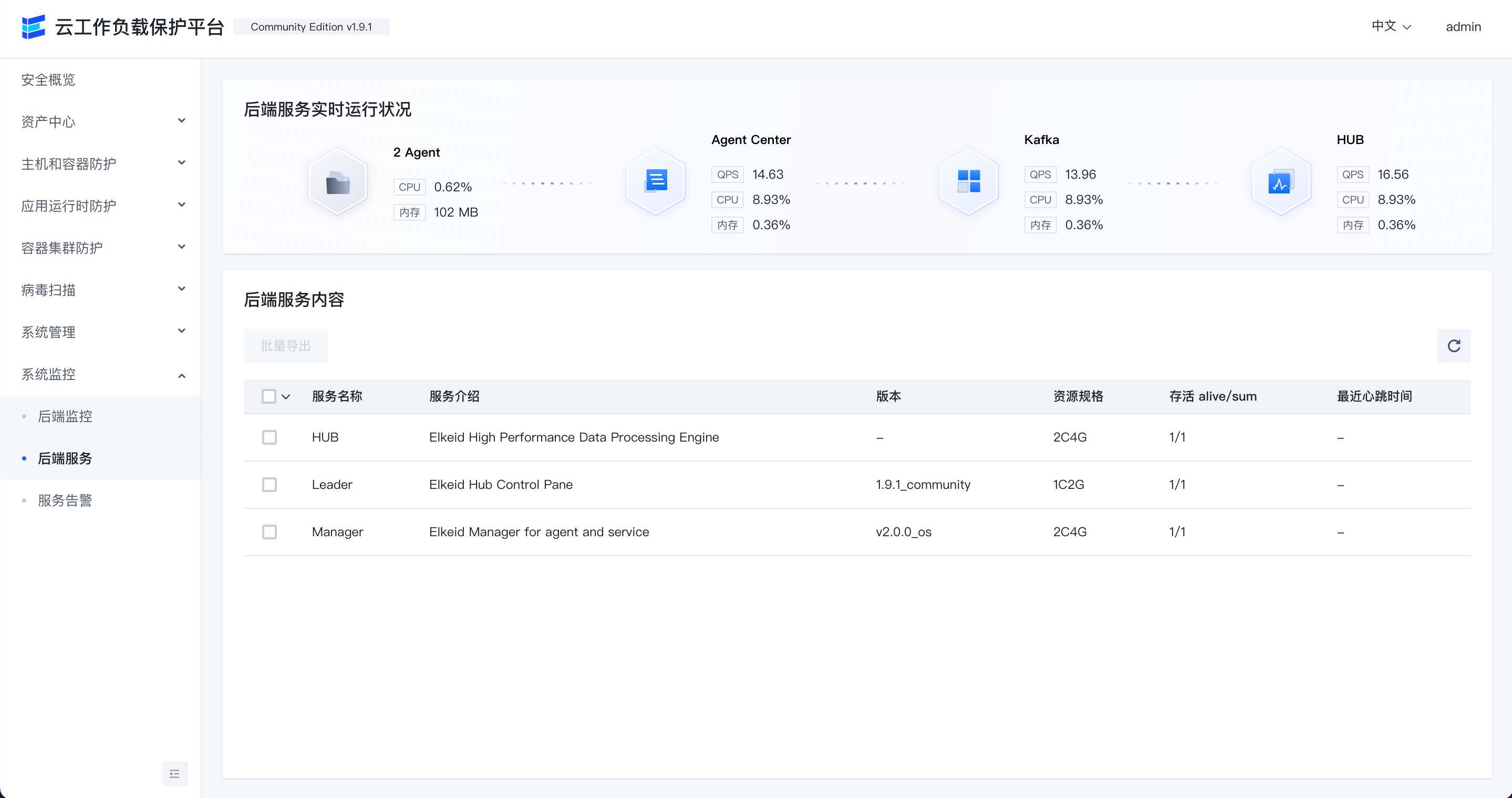

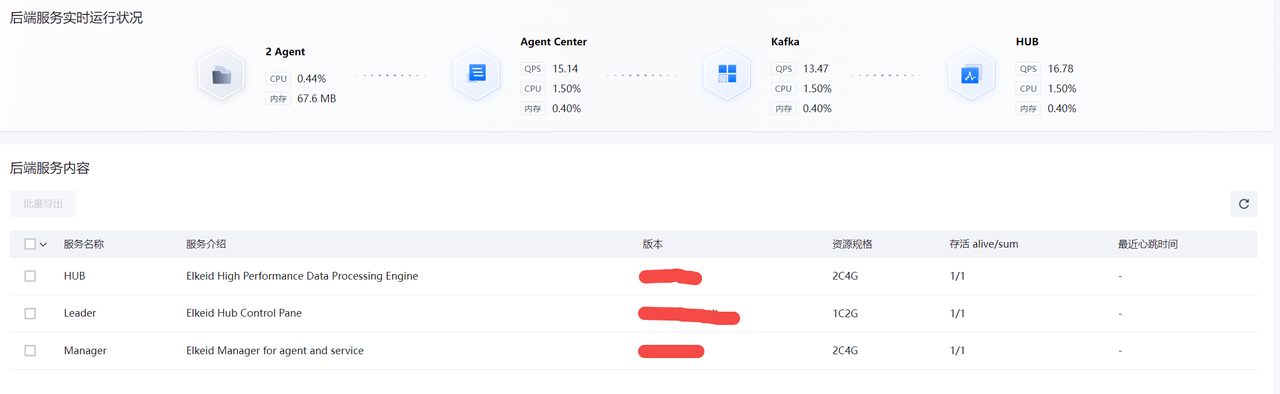

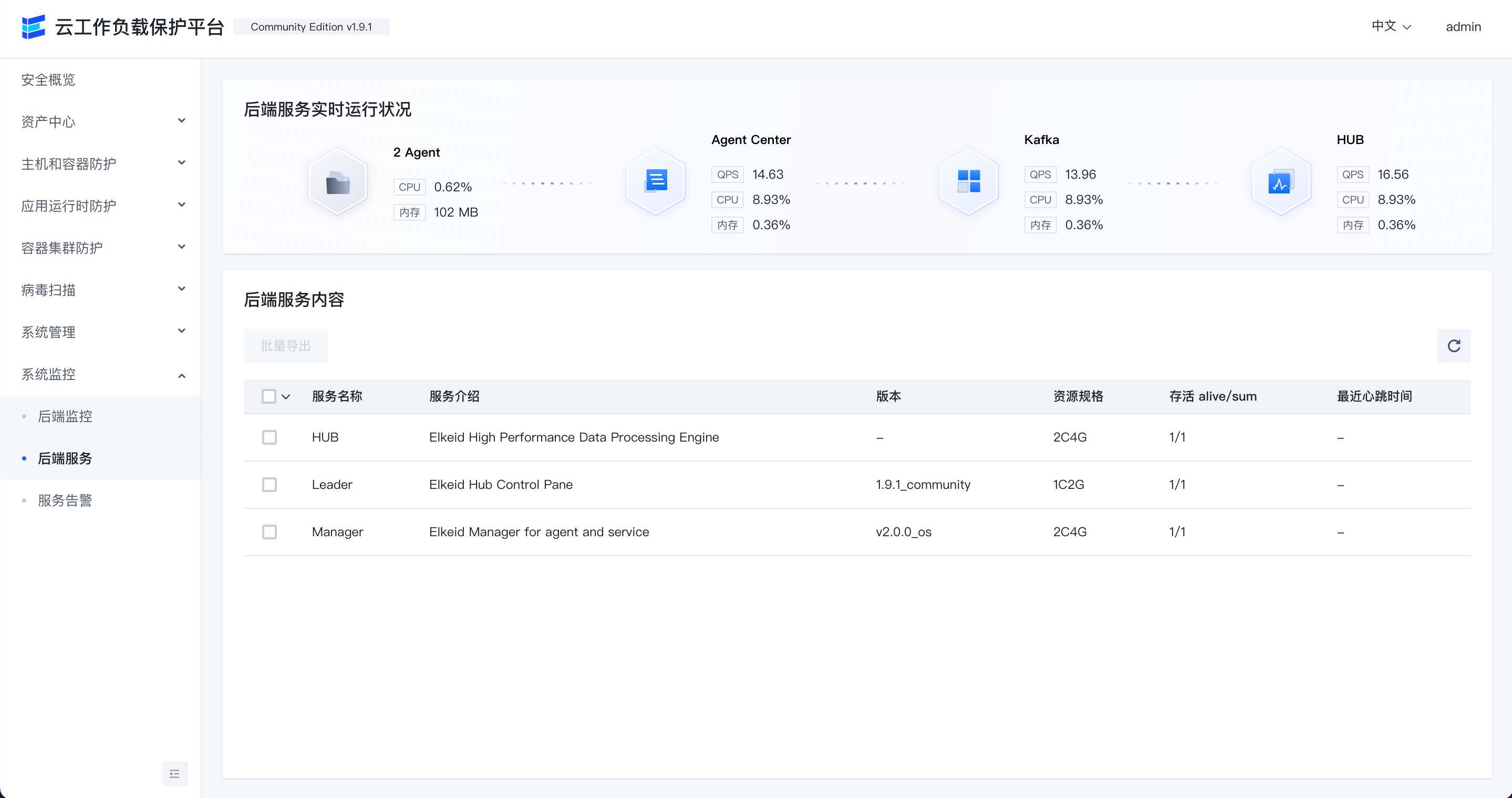

Backend hosts monitoring

Backend service monitoring

Console User Guide

Quick Start

Contact us && Cooperation

Lark Group

About Elkeid Enterprise Edition

Elkeid Enterprise Edition supports separate intrusion detection rules(like the HIDS, RASP, K8s) sales, as well as full capacity sales.

If interested in Elkeid Enterprise Edition please contact elkeid@bytedance.com

Elkeid Docs

For more details and latest updates, see Elkeid docs.

License

- Elkeid Driver: GPLv2

- Elkeid RASP: Apache-2.0

- Elkeid Agent: Apache-2.0

- Elkeid Server: Apache-2.0

- Elkeid Console: Elkeid License

- Elkeid HUB: Elkeid License

404StarLink 2.0 - Galaxy

Elkeid has joined 404Team 404StarLink 2.0 - Galaxy

ElkeidUP

Automated deployment of Elkeid tools

Component List

Instructions

- The backend server used for deployment needs to be used by Elkeid only

- The back-end server used for deployment needs to ensure intranet interoperability

- The backend server used for deployment requires root user privileges when deploying

- The backend server used for deployment can only be used: Centos7 and above; Ubuntu16 and above; Debian9 and above

- The server which execute elkeidup could execute

ssh root@x.x.x.xwithout password to any backend server - Deployment cannot be manually interrupted

- Only cat use LAN IP, do not use 127.0.0.1 or hostname or public IP

- Don't remove

~/.elkeidupdir - Don't fix any components used user's password, Include the Console(Elkeid Manager)

Awareness of Auto-download missing kernel driver service

In this open-source version, we have integrated a service to provide auto-download capabilities for kernel driver files of those kernel versions that are missing from pre-compiled lists.

Service background: Elkeid Driver works in the kernel state. Since the kernel module loaded by the kernel is strongly bound to the kernel version, the kernel driver would have to match the correct kernel version. We cannot occupy the resources of the client's computer to compile ko files on the client's host machines when installing the agent. Therefore, we precompiled kernels for major Linux system distributions in the release package to fit general cases. Currently, there are a total of 3435 precompiled ko, but there are still two problems that cannot be solved. One is that it cannot be updated in real-time. After the Major Linux system distributions release new updates to the kernel, we cannot and do not have enough manpower to catch up with those changes in time. The other problem is that you may use your own Linux kernel distribution. To this end, we provide the function of automatically downloading the missing precompiled kernel drivers. This function is mainly to inform our relevant engineer that some specific kernel versions are being used by users, and the release version should be updated as soon as possible. If you choose to agree and enable this service, we need to collect some basic operating information at the same time, so that we can customize priority scheduling according to users with different needs, and give a reasonable evaluation of resource occupation. The email information filled in is only used to distinguish the identity of the source, real email or any nickname can be used. Specific information is as follows:

- The kernel version and server architecture (only arm64 or amd64 can be selected, and no other CPU machine information is involved).

- The number of connections of the agent on the agent center is collected every 30 minutes.

- The QPS of the agent on the agent center, including send and receive, is collected every 30 minutes, and the average value of 30 minutes is taken.

- The hub input QPS is collected every 30 minutes, and the average value of 30 minutes is taken.

- Redis QPS, collected every 30 minutes, takes an average value of 30 minutes.

- Redis memory usage, collected every 30 minutes, real-time value.

- The QPS produced and consumed by Kafka are collected every 30 minutes, and the average value of 30 minutes is taken.

- MongoDB QPS, collects every 30 minutes, and takes an average value of 30 minutes.

If you do not agree to enable this service, you can still have access to all pre-compiled ko included in the release package, and all other functions will not be affected.

The specific operation is to download ko_1.7.0.9.tar.xz on the release interface, and then replace package/to_upload/agent/component/driver/ko.tar.xz. During deployment, ko will be decompressed to /elkeid/nginx/ElkeidAgent/agent/component/driver/ko directory.

You may simply enable related functions during the elkeidup deployment progress. The relative config could also bee found inside elkeidup_config.yaml file in the conf directory where the manager is running based upon. If you enable this service during deployment, but need to disable it in the subsequent process, you can set report.enable_report in the elkeidup_config.yaml file to false, and then restart the manager.

The codes for collecting information and downloading KO files from Elkeid services are all in the open-sourced code. The relevant functions are listed as follows.

- The on/off switch is located in the InitReport() function of

internal/monitor/report.go. - The collection information item is located in the heartbeatDefaultQuery structure of

internal/monitor/report.go. - The function of automatically downloading ko is located in the SendAgentDriverKoMissedMsg function of

biz/handler/v6/ko.go.

Elkeid Deployment(Recommended)

Elkeid HUB Deployment

Elkeid Upgrading and Expansion

Raw Data Usage Tutorial

Elkeid Full Deployment

1、Stand-alone docker rapid deployment (stand-alone testing environment recommended)

1.1、Import Mirroring

wget https://github.com/bytedance/Elkeid/releases/download/v1.9.1.4/elkeidup_image_v1.9.1.tar.gz.00

wget https://github.com/bytedance/Elkeid/releases/download/v1.9.1.4/elkeidup_image_v1.9.1.tar.gz.01

wget https://github.com/bytedance/Elkeid/releases/download/v1.9.1.4/elkeidup_image_v1.9.1.tar.gz.02

wget https://github.com/bytedance/Elkeid/releases/download/v1.9.1.4/elkeidup_image_v1.9.1.tar.gz.03

cat elkeidup_image_v1.9.1.tar.gz.* > elkeidup_image_v1.9.1.tar.gz

docker load -i elkeidup_image_v1.9.1.tar.gz

1.2、Run the container

docker run -d --name elkeid_community \

--restart=unless-stopped \

-v /sys/fs/cgroup:/sys/fs/cgroup:ro \

-p 8071:8071 -p 8072:8072 -p 8080:8080 \

-p 8081:8081 -p 8082:8082 -p 8089:8080 -p 8090:8090\

--privileged \

elkeid/all-in-one:v1.9.1

1.3、Set external IP

Using this machine IP cannot use 127.0.0.1.

docker exec -it elkeid_community bash

cd /root/.elkeidup/

# This command will start interactive input

./elkeidup public {ip}

./elkeidup agent init

./elkeidup agent build

./elkeidup agent policy create

cat ~/.elkeidup/elkeid_passwd

1.4、Access the front console and install Agent

After a successful installation, the /root/.elkeidup/elkeid_passwd file records the passwords and associated URLs of each component.

The initial password is fixed when mirroring is built, please do not use it in the production environment for security

| Field | Description |

|---|---|

| elkeid_console | Console account password |

| elkeid_hub_frontend | hub front-end account password |

| grafana | grafana account password |

| grafana | grafana address |

| elkeid_hub_frontend | elkeid hub front-end address |

| elkeid_console | elkeid console address |

| elkeid_service_discovery | Service Discovery Address |

To access elkeid_console, follow the Console instruction manual - Install configuration to install and deploy the Agent.

2、Full deployment with elkeidup

2.1、Configure the target machine root user ssh ssh password-free login

If the deployment machine is local, you still need to configure the local password-free login, and the login time needs to be less than 1s. The following command can be used to verify that the output of the two date commands needs to be the same.

date && ssh root@{ip} date

# The output time difference should be less than 1s

2.2、Download the release product and configure the catalog

- Download the release product (rolled compressed packet) and merge compressed packets

wget https://github.com/bytedance/Elkeid/releases/download/v1.9.1.4/elkeidup_package_v1.9.1.tar.gz.00

wget https://github.com/bytedance/Elkeid/releases/download/v1.9.1.4/elkeidup_package_v1.9.1.tar.gz.01

wget https://github.com/bytedance/Elkeid/releases/download/v1.9.1.4/elkeidup_package_v1.9.1.tar.gz.02

cat elkeidup_package_v1.9.1.tar.gz.* > elkeidup_package_v1.9.1.tar.gz

You can also refer to Build Elkeid from Source to compile and build packages yourself.

If installed before, delete the

/root/.elkeidupand/elkeidfolders to avoid interference

- Unzip and release products and configuration catalog

mkdir -p /root/.elkeidup && cd /root/.elkeidup

mv {DownloadDir}/elkeidup_package_v1.9.1.tar.gz elkeidup_package_v1.9.1.tar.gz

tar -xf elkeidup_package_v1.9.1.tar.gz

chmod a+x /root/.elkeidup/elkeidup

2.3、Generate and modify config.yaml

If it is not a standalone deployment, please refer to the deployment resource manual to modify config.yaml

cd /root/.elkeidup

./elkeidup init --host {ip}

mv config_example.yaml config.yaml

2.4、Deployment

cd /root/.elkeidup

# This command will start interactive input

./elkeidup deploy

2.5、Build Agent

cd /root/.elkeidup

./elkeidup agent init

./elkeidup agent build

./elkeidup agent policy create

2.6、Access the front console and install Agent

After a successful installation, the /root/.elkeidup/elkeid_passwd file records the passwords and associated URLs of each component.

| Field | Description |

|---|---|

| elkeid_console | Console account password |

| elkeid_hub_frontend | hub front-end account password |

| grafana | grafana account password |

| grafana | grafana address |

| elkeid_hub_frontend | elkeid hub front-end address |

| elkeid_console | elkeid console address |

| elkeid_service_discovery | Service Discovery Address |

To access elkeid_console, follow the Console instruction manual - Install configuration to install and deploy the Agent.

3、Agent Install Remark

- Driver module dependency pre-compile ko, specific support list reference: ko_list

- Under normal circumstances, after the installation of the Agent is completed, it takes about 10 minutes for the Driver module to work normally (involving the automatic download and installation of KO).

- The way the Driver exists:

lsmod | grep hids_driver- If the test machine kernel version is not in the supported list, compile ko file and generate sign file (sha256) and import it into Nginx.

- If you do not agree to the declaration in the execution of elkeidup deploy, you also need to compile ko yourself or download the corresponding pre-compile ko (support list) and sign files in the Release, and import it into Nginx.

3.1, ko import Nginx method

The format of the ko/sign file should follow: hids_driver_1.7.0.4_{uname -r}_{arch}.ko/sign format, the file needs to be placed on the corresponding nginx server: /elkeid/nginx/ElkeidAgent/agent/component/driver/ko, and modify the permissions chown -R nginx: nginx /elkeid/nginx. After the placement is completed, the Agent can be restarted.

4、HTTPS配置

Elkeid https Configuration documentation

5、Upgrade specified components

If a component has been updated, or if a component has been recompiled, you can reinstall the specified component using the elkeidup reinstall command. For example, the Hub Community Edition has been updated in release: v 1.9.1.1, and you can reinstall it with the following command.

# {v1.9.1.1} is the unzipped package directory for v1.9.1.1

# reinstall hub

cp {v1.9.1.1}/package/hub/hub.tar.gz /root/.elkeidup/package/hub/hub.tar.gz

cp {v1.9.1.1}/package/hub_leader/hub_leader.tar.gz /root/.elkeidup/package/hub_leader/hub_leader.tar.gz

/root/.elkeidup/elkeidup reinstall --component Hub

/root/.elkeidup/elkeidup reinstall --component HubLeader

HUB deployed separately

If you need to deploy the HUB separately, you can use the -- hub_only parameter in elkeidup. The specific steps are as follows:

1、Configure the target machine root user ssh ssh password-free login

If the deployment machine is local, you still need to configure the local password-free login, and the login time needs to be less than 1s. The following command can be used to verify that the output of the two date commands needs to be the same.

date && ssh root@{ip} date

# The output time difference should be less than 1s

2、Download the release product and configure the catalog

mkdir -p /root/.elkeidup && cd /root/.elkeidup

wget https://github.com/bytedance/Elkeid/releases/download/v1.9.4/elkeidup_hub_v1.9.1.tar.gz -O elkeidup.tar.gz && tar -xf elkeidup.tar.gz

chmod a+x /root/.elkeidup/elkeidup

3、Generate and modify config.yaml

If it is not a standalone deployment, please refer to the deployment resource manual to modify config.yaml

cd /root/.elkeidup

## Generate hub only configurations

./elkeidup init --host {ip} --hub_only

mv config_example.yaml config.yaml

4、Deployment

cd /root/.elkeidup

# Command is interactive

./elkeidup deploy --hub_only

## status

./elkeidup status --hub_only

## undeploy

./elkeidup undeploy --hub_only

5、Visit the HUB front end

After a successful installation, executing cat /root/.elkeidup/elkeid_passwd will see the randomly generated passwords and associated URLs for each component.

| Field | Description |

|---|---|

| elkeid_hub_frontend | hub front-end account password |

| grafana | grafana account password |

| grafana | grafana address |

| elkeid_hub_frontend | elkeid hub front-end address |

| elkeid_service_discovery | Service Discovery Address |

To access elkeid_hub_frontend, refer to the Elkeid HUB Quick Start Tutorial.

6、HTTPS configuration

Please refer to Elkeid https configuration documentation

Resource Configuration of Elkeid Community Edition

Elkeid Architecture diagram

Note: Currently, Elkeid HUB's community version only supports stand-alone deployment

Components in detail

| Component name | Minimum deployment in the testing environment | Production environment | Listen ports | Description |

| Redis | single | Three, Sentry mode (only supports 3, larger clusters need to be replaced after deployment) |

|

cache database |

| MongoDB | single | Three replicas mode (only 3 are supported, larger clusters need to be replaced after deployment) |

|

db.table |

| Kafka | single | Calculated by the number of agents (only 3 units are supported in the case of automatic deployment, and multiple units need to be replaced after deployment) |

|

message channel |

| Nginx | single | Single or multiple units can be used. The download function is recommended to use internal CDN , if you need external access, it is recommended to use self-built LB |

|

File server and reverse proxy |

| Service Discovery | single | two to three |

|

Service Discovery |

| HUB | single | The community version only supports a single station (whether the production environment uses the community version, please conduct additional evaluation) |

|

rules engine |

| HUB Leader | single | The community version only supports a single station (whether the production environment uses the community version, please conduct additional evaluation) |

|

Rules engine Cluster control layer |

| HIDS Manager | single | two to three |

|

HIDS Control layer |

| Agent Center | single | Calculate by Agent quantity |

|

HIDS Access layer |

| Prometheus | single | Single or both |

|

Database for monitoring |

| Prometheus Alermanager | with Prometheus Shared server | - | ||

| Grafana | single | single |

|

monitoring panel |

| NodeExporter | No need to specify a separate server; all machines need to deploy the monitoring service | - |

|

monitoring probe |

| ProcessExporter | No need to specify separate a separate server, all machines need to deploy the monitoring service | - |

|

monitoring probe |

Configure Elkeidup

Notes for keywords:

- ssh_host is a generic configuration, indicating which machines the component is deployed on. If it is an array type, it means that the component supports Clustered Deployment. Otherwise, it only supports stand-alone deployment. See the configuration file notes for specific restrictions.

- Quotas are generic configurations that will eventually turn into cgroup limits.

- In a stand-alone testing environment, all machines can fill-in with the same address.

# Redis: Single or 3 hosts, 3 hosts infers it will be in Sentinel mode

redis:

install: true

quota: 1C2G

ssh_host:

- redis-1

- redis-2

- redis-3

# MongoDB: Single or 3 hosts, 3 hosts infers it will be in Replica-Set mode

mongodb:

install: true

quota: 2C4G

ssh_host:

- monogo-1

- monogo-2

- monogo-3

# Kafka: Single or 3 hosts, 3 hosts infers it will be in Cluster mode

kafka:

install: true

topic: hids_svr

partition_num: 12 # Default partition number for one topic

quota: 2C4G

ssh_host:

- kafka-1

- kafka-2

- kafka-3

# leader: The community edition currently only supports stand-alone mode

leader:

install: true

quota: 1C2G

ssh_host: leader-1

# nginx: one or more hosts, but other components will only use the first one by default

nginx:

install: true

quota: 1C2G

ssh_host:

- nginx-1

- nginx-2

domain: # 指向nginx机器的域名,仅支持单个

public_addr: # nginx机器的公网IP,仅支持单个

# sd: one or more hosts

service_discovery:

install: true

quota: 1C2G

ssh_host:

- sd-1

- sd-2

# hub: The community edition currently only supports stand-alone mode

hub:

install: true

quota: 2C4G

ssh_host: hub-1

# manager: one or more hosts

manager:

install: true

quota: 2C4G

ssh_host:

- manager-1

# ac: one or more hosts

agent_center:

install: true

grpc_conn_limit: 1500 # 单个AC的最大连接数限制

quota: 1C2G

ssh_host:

- ac-1

# prometheus: one or two host, The second one will be used for double-write only.

prometheus:

quota: 1C2G

ssh_host:

- prometheus-1

# grafana: one host only

grafana:

quota: 1C2G

ssh_host: grafana-1

Build Elkeid CWPP from Source Code

In the current community version, some components have not been open sourced. Mainly, the related components of Elkeidup and Hub can only provide community version binaries at present, so it cannot provide a build manual built entirely from source code from zero to one. You can run the executable program built from source code by replacing the specified files in the package before installation, or replacing the executable program after installation. The specific file locations and corresponding relationships are described below.

Replace before installation

Agent

The Agent part will be built from the source code during the elkeidup deploy process, so the following files in the package can be replaced. It is recommended to unzip the file and confirm that the file and directory structure are the same as the files before replacement.

package/agent/v1.9.1/agent/elkeid-agent-src_1.7.0.24.tar.gz

Driver Plugin

The Driver plugin will also build from the source code during the elkeidup deploy process, so you can also replace the following files in the package. It is recommended to unzip the file and confirm that the file and directory structure are the same as the files before replacement.

package/agent/v1.9.1/driver/driver-src_1.0.0.15.tar.gz

Other agent plugins

Other agent plugins are pre-compiled. According to the documentation of each plugin, replace the corresponding files after compiling. Note that the plugin has plg format and tar.gz format. The plg format is an executable file, and the tar.gz is a compressed packet. The version number is currently hard coding in elkeidup, which needs to be consistent, please do not change it.

package/agent/v1.9.1/driver/driver-src_1.0.0.15.tar.gz

package/agent/v1.9.1/baseline/baseline-default-aarch64-1.0.1.23.tar.gz

package/agent/v1.9.1/baseline/baseline-default-x86_64-1.0.1.23.tar.gz

package/agent/v1.9.1/collector/collector-default-aarch64-1.0.0.140.plg

package/agent/v1.9.1/collector/collector-default-x86_64-1.0.0.140.plg

package/agent/v1.9.1/etrace/etrace-default-x86_64-1.0.0.92.tar.gz

package/agent/v1.9.1/journal_watcher/journal_watcher-default-aarch64-1.0.0.23.plg

package/agent/v1.9.1/journal_watcher/journal_watcher-default-x86_64-1.0.0.23.plg

package/agent/v1.9.1/rasp/rasp-default-x86_64-1.9.1.44.tar.gz

package/agent/v1.9.1/scanner/scanner-default-aarch64-3.1.9.6.tar.gz

package/agent/v1.9.1/scanner/scanner-default-x86_64-3.1.9.6.tar.gz

ko

When deploying by default, the pre-compiled ko will not be copied to nginx. The pre-compiled ko will be provided in the release interface at the same time. After downloading the pre-compiled ko or compiling ko by yourself, you can replace the following files. The file is in tar.xz format. There is a ko folder after decompression, the format must be the same.

package/to_upload/agent/component/driver/ko.tar.xz

Manager & ServiceDiscovery & AgentCenter

Compile the corresponding binary, decompress the tar.gz of the following path, and then replace the binary and pack it back to tar.gz.

# manager

package/manager/bin.tar.gz

# service discovery

package/service_discovery/bin.tar.gz

# agent center

package/agent_center/bin.tar.gz

Replace after installation

Agent related

The agent part can be uploaded through the front end, see the agent release document for details

ko

Copy the corresponding ko and sing files to the following directory, and then execute the command to modify the directory permissions

# ko directory

/elkeid/nginx/ElkeidAgent/agent/component/driver/ko

# Modify permissions

chown -R nginx: nginx /elkeid/nginx

Manager & ServiceDiscovery & AgentCenter

Pause the service, replace the corresponding binary, and restart the service

#manager

systemctl stop elkeid_manager

mv new_manager_bin /elkeid/manager/manager

systemctl start elkeid_manager

#service discovery

systemctl stop elkeid_sd

mv new_sd_bin /elkeid/service_discovery/sd

systemctl start elkeid_sd

#agent center

systemctl stop elkeid_ac

mv new_ac_bin /elkeid/agent_center/agent_center

systemctl start elkeid_ac

Elkeidup Community Edition Upgrade Guide 1.7.1 -- > 1.9.1

Foreword

First you need to configure elkeidup 1.7.1 to coexist with version 1.9.1, and then switch as the case may be.

For detailed operation, please refer to the documentation of 1.7.1 and 1.9.1 at the same time.

# rename .elkeidup dir

cd /root

mv .elkeidup .elkeidup_v1.7.1

ln -s .elkeidup_v1.7.1 .elkeidup

# copy cert to v1.9.1

mkdir -p /root/.elkeidup_v1.9.1

cp -r /root/.elkeidup_v1.7.1/elkeid_password /root/.elkeidup_v1.9.1

cp -r /root/.elkeidup_v1.7.1/cert /root/.elkeidup_v1.9.1

# download v1.9.1 package to /root/.elkeidup_v1.9.1

Switch to 1.7.1

rm /root/.elkeidup && ln -s /root/.elkeidup_v1.7.1 /root/.elkeidup

Switch to 1.9.1

rm /root/.elkeidup && ln -s /root/.elkeidup_v1.9.1 /root/.elkeidup

Backend

The v1.9.1 backend is currently not compatible with v1.7.1, you need to uninstall the v1.7.1 backend and reinstall v1.9.1.

backup data

Select backup data as needed:

- Backup MongoDB: The directory is located /elkeid/mongodb is only a backup DB, and the backed up data cannot be used directly. If there is a recovery need, there is no automated script at present, and manual conversion is required.

- Backup Hub Policies: The directory is located /elkeid/hub Policies can be imported in the Hub web interface.

uninstall v1.7.1

After uninstalling the v1.7.1 backend, Agent will automatically close all plugins after 1 minute and enter the daemon state until the new backend is installed

#switch to v1.7.1 according to the preface

cd /root/.elkeidup

./elkeidup undeploy

install v1.9.1

After installing the v1.9.1 backend, the Agent will be reconnected within 1min, but no plugins have been loaded at this time, you can see this state on the Console

#switch to v1.9.1 according to the preface

#For installation documentation, see v1.9.1 installation documentation

cd /root/.elkeidup

./elkeidup deploy

Agent

Confirm configuration and state

-

'/root/elkeidup_v1/cert'/root/elkeidup_v1/cert 'The contents of all files in the three directories are consistent

-

'/root/elkeidup_v1/elkeid_server.yaml'/root/elkeidup_v1/elkeidup_config.yaml 'The following related configurations are consistent.

-

Note: The filed value of the specific field is subject to'v1.9.1 ', please do not directly cover.

-

nginx

- domain

- ssh_host

- public_addr

-

mg

- ssh_host

-

-

After confirming that the backend update is complete, all v1.7.1 Agents have been successfully launched

Build v1.9.1 component

./elkeidup agent init

./elkeidup agent build

./elkeidup agent policy created

Submit a task

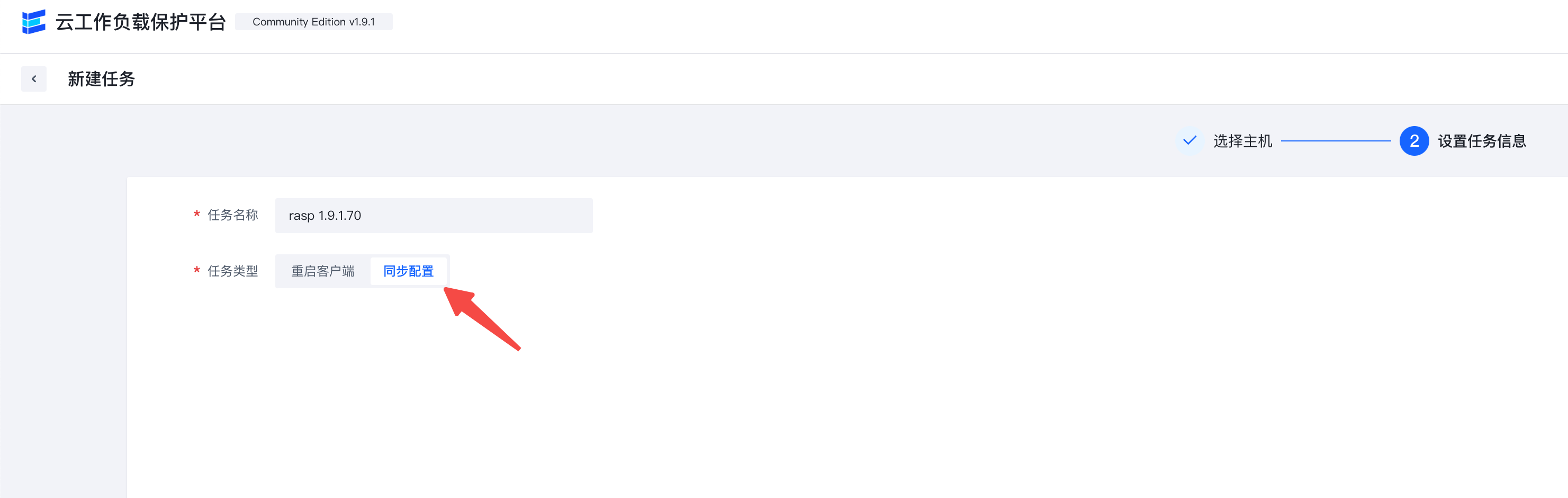

Grey release upgrade can be performed as needed. At this time, the newly launched/reconnected client/client side/client end will automatically pull the latest configuration upgrade, and other client/client side/client ends need to manually sync up configuration upgrade

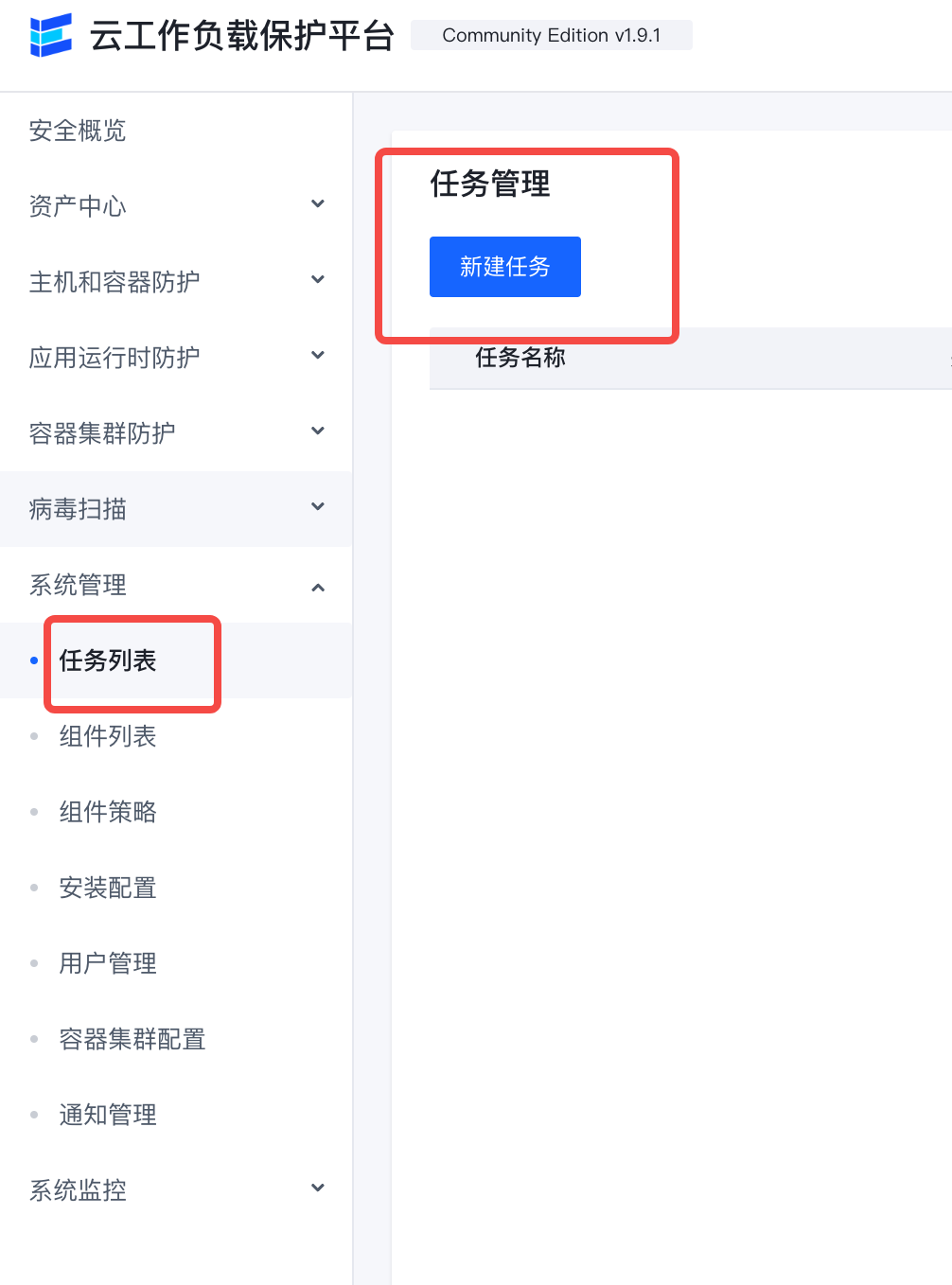

- In the Elkeid Console - Task Management interface, click "New Task", select a single host, click Next, select the "sync up configuration" task type, and click Confirm. Then, find the task you just created on this page, click Run, and observe whether the upgraded host meets expectations.

- In the Elkeid Console - Task Management interface, click "New Task", select all hosts, click Next, select "sync up configuration" task type, and click Confirm. Then, find the task you just created on this page and click Run to upgrade the old version of Agent.

Elkeid Community Edition, Expansion Guide

ServiceDiscovery

Self-expansion (dependency elkeidup)

- Modify config.yaml add other hosts in sd, and the login conditions are the same as when installing.

- Execute the following command elkeidup reinstall --component ServiceDiscovery --re-init

Self-expansion (manual operation)

- Copy the /elkeid/service_discovery of the installed SD machine to the machine to be expanded.

- Update all SD configuration file /elkeid/service_discovery/conf/conf.yaml Cluster. Members item, which is an array of all SD instances, and each SD must fill in the addresses of all instances.

- Execute the /elkeid/service_discovery/install.sh of the new SD instance, which will automatically start SD.

- Restart all old sd instances'systemctl restart elkeid_sd '.

sync up the upstream and downstream configuration

SD is currently a dependency of AgentCenter, Manager and Nginx. After expanding SD, you need to sync up and restart.

- AgentCenter: The configuration file is located sd.addrs/elkeid/agent_center/conf/svr.yml, restart the command'systemctl restart elkeid_ac '.

- Manager: configuration file is sd.addrs/elkeid/manager/conf/svr.yml, restart command'systemctl restart elkeid_manager '.

- Nginx: configuration file is located in the upstream sd_list of/elkeid/nginx/nginx/nginx.conf, restart command'systemctl restart elkeid_nginx '.

AgentCenter

Self-expansion (dependency elkeidup)

- Modify config.yaml add other hosts in ac, and the login conditions are the same as when installing.

- Execute the following command elkeidup reinstall --component AgentCenter --re-init

Self-expansion (manual operation)

- Copy the /elkeid/agent_center of the installed AC machine to the machine to be expanded.

- Executing the /elkeid/agent_center/install.sh of the new AC instance installs and starts AC automatically.

sync up the upstream and downstream configuration

If the agent is linked to the AC by means of service discovery, there is no need to manually sync up the upstream and downstream configurations.

If the agent is linkage AC through the AC address of the code, you need to re-compile the agent and add the new AC address to the agent linkage configuration.

Elkeid https configuration documentation

1. Overview

- By default, the Elkeid Console listens on ports 8082 and 8072, and the Elkeid HUB listens on ports 8081 and 8071.

- If HTTPS is required, ports 8072 and 8071 can be used for access.

| Elkeid Console | Elkeid HUB Console | |

|---|---|---|

| HTTP | http://{{NignxIP}}:8082 | http://{{NignxIP}}:8081 |

| HTTPS | https://{{NignxIP}}:8072 | https://{{NignxIP}}:8072 |

2. Use an internal enterprise certificate

The self-signed certificate generated during installation is located in the '/elkeid/nginx/nginx' directory on the machine where nginx is located, and includes the following two files:

server.key

server.crt

After replacing the above two files, do the following:

chown -R nginx: nginx /elkeid/nginx

systemctl restart elkeid_nginx

3. Use the self-signed certificate generated at deployment time

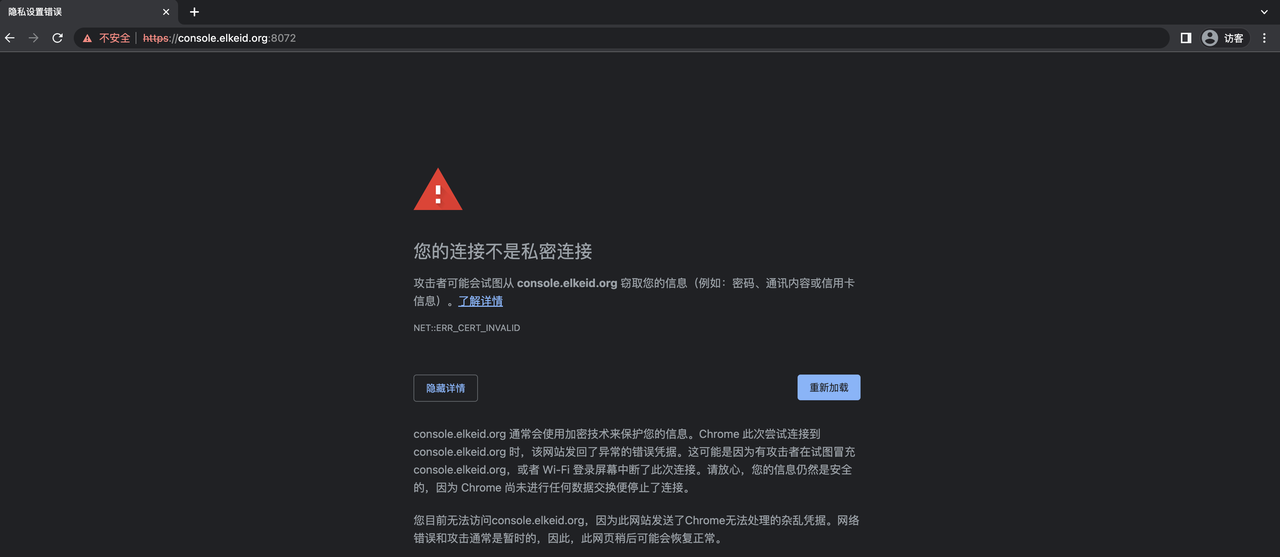

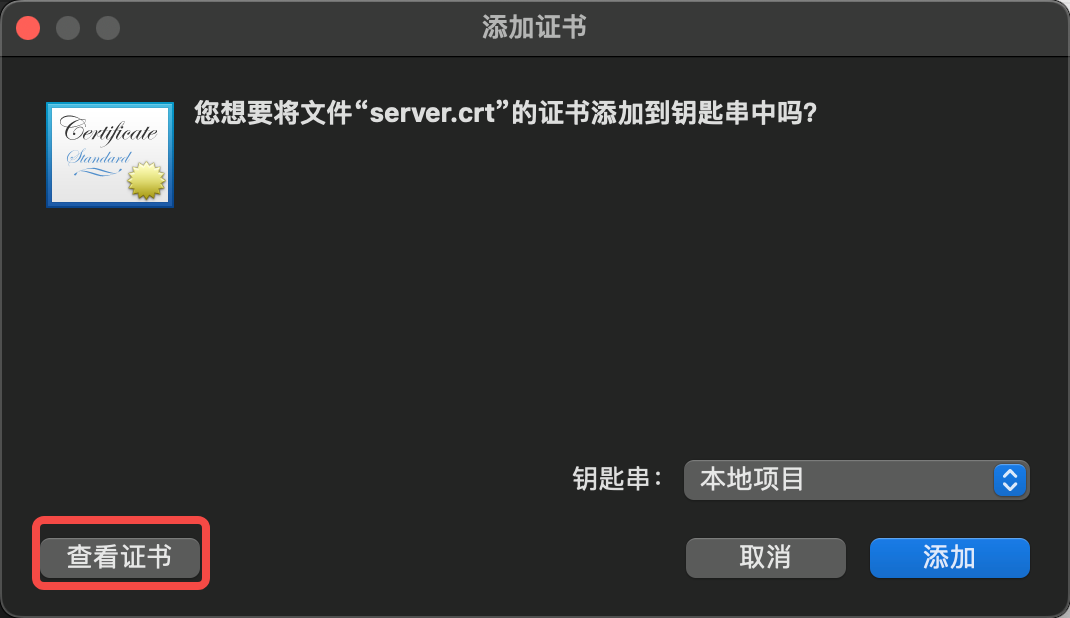

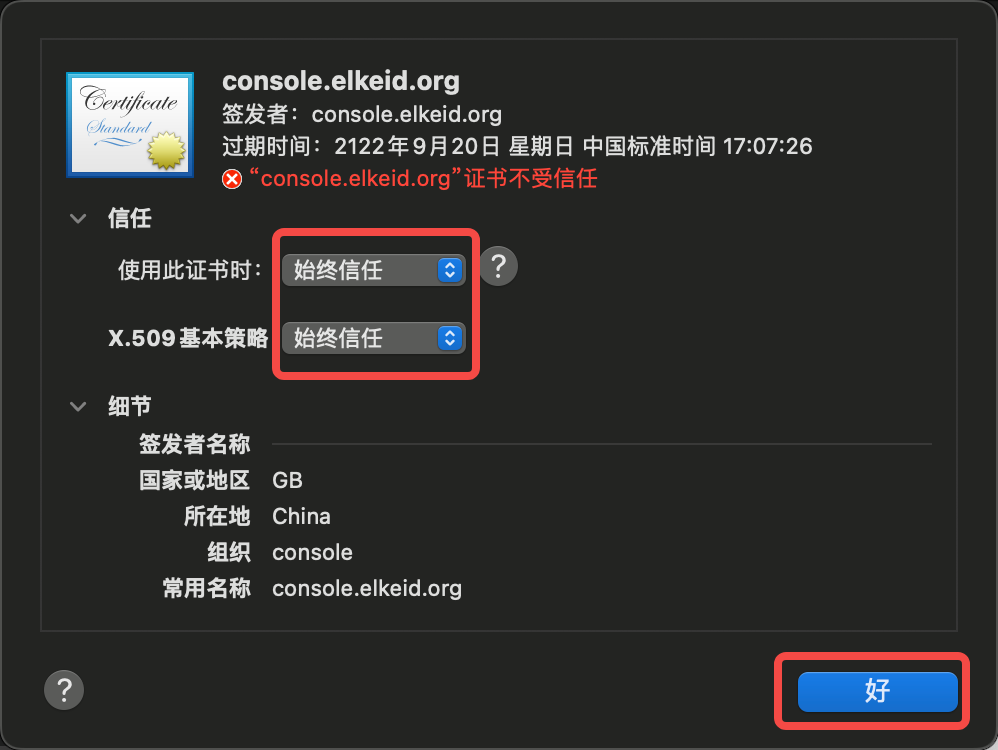

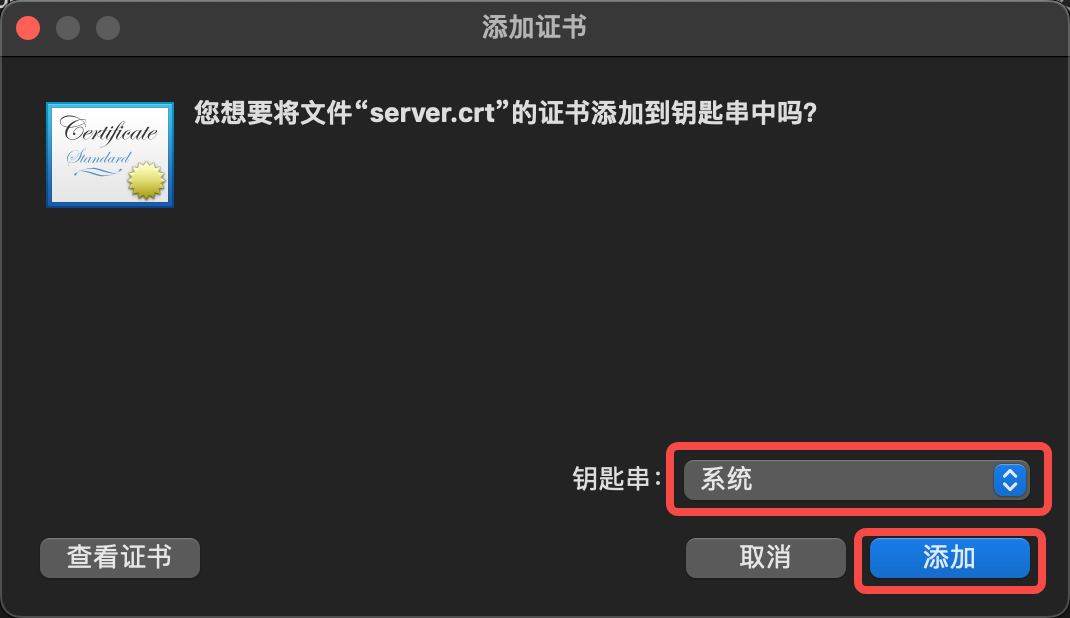

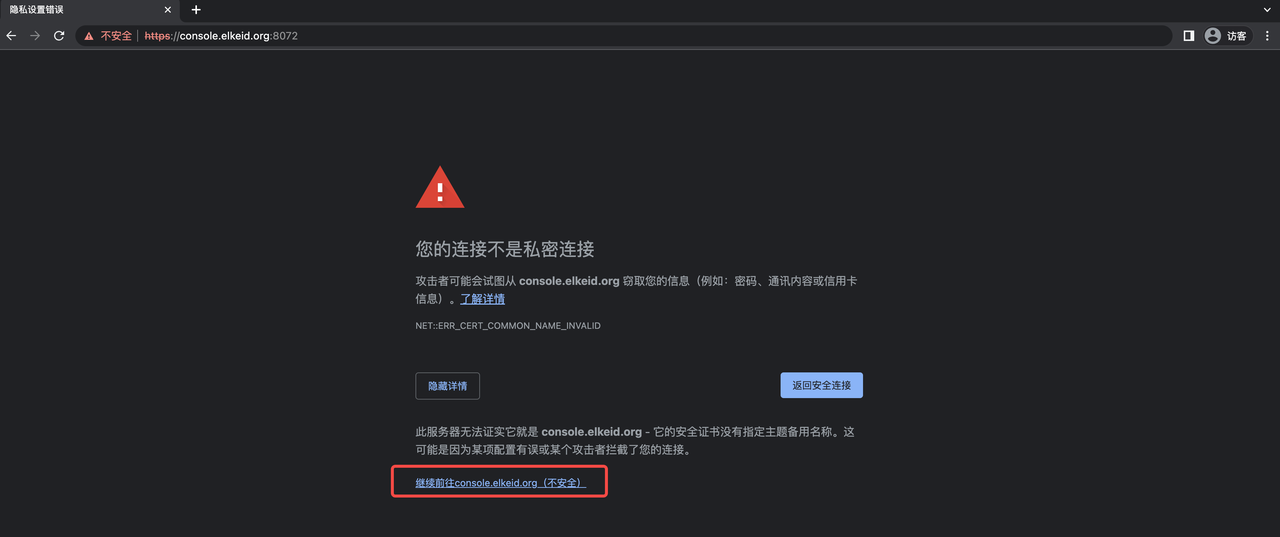

When Elkeid is deployed, it can only use a self-signed certificate. Due to the security settings of chrome, it cannot be accessed directly. All you need to manually trust the certificate to access it using https. The specific operation is as follows:

The following example hypotheses that the server where nginx is located is console.elkeid.org and has a configuration of /etc/hosts or dns parsing.

3.1, Macos

- Access https://console.elkeid.org:8072/ Export Certificate

- Import the exported certificate and trust it

- Click Keychain Access, Trust Certificate

- Visit https://console.elkeid.org:8072/ again

Elkeid Console 主机安全保护平台使用手册

版本

社区版v1.9.1

安全概览

通过安全概览能快速了解覆盖范围,告警势态,运营情况(安全告警与安全风险处置情况)等的整体安全态势。

资产概览

查看当前在覆盖的主机和容器集群信息。

入侵告警

展示未处理告警的数量以及最近7天内的变化趋势。

漏洞风险

展示未处理漏洞的数量以及最近7天内的变化趋势。

基线风险

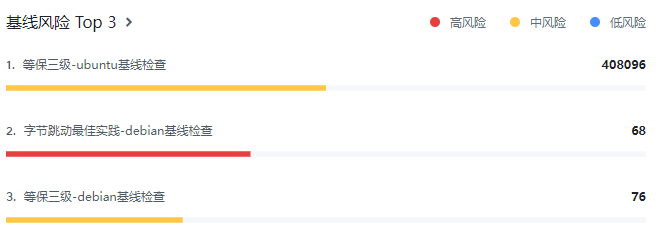

展示TOP3的基线风险数量。

主机风险分布

展示当前存在待处理告警、高可利用漏洞和基线风险的主机占比。

Agent 概览

展示Agent的在线情况和资源占用情况。

后端服务状态

展示后端服务的负载。

资产中心

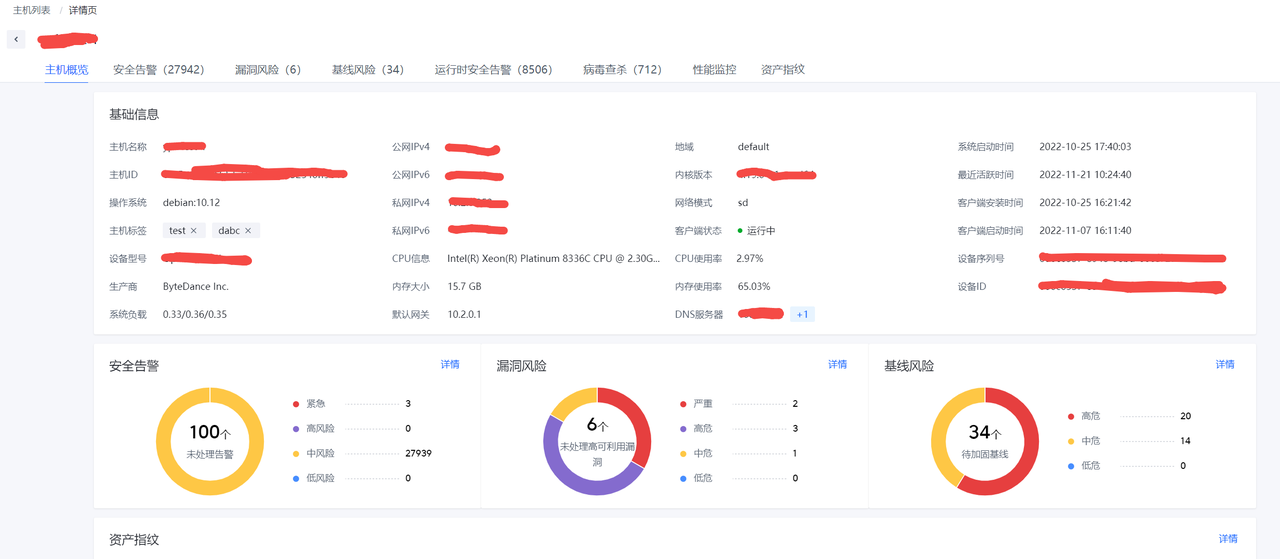

运营人员可以通过资产中心对资产数,Agent运行情况,资产上详细信息进行查看。

主机列表

展示主机资产的列表以及各主机的风险情况。

点击"主机名称"可以进入查看此主机的资产信息和风险信息。

点击页面中各标签可以查看主机各类相关数据,目前支持下列数据。

- 安全告警

- 漏洞风险

- 基线风险

- 运行时安全告警

- 病毒查杀

- 性能监控

- 资产指纹

资产指纹

通过该功能查看各主机的开放端口,运行进程,系统用户,定时任务,系统服务,系统软件等详情。

点击页面中各标签可以查看相应资产数据,目前支持下列资产数据:

- 容器

- 开放端口

- 运行进程

- 系统用户

- 定时任务

- 系统服务

- 系统软件

- 系统完整性校验

- 应用

- 内核模块

点击各行的"主机名称"可以跳转到相应主机的详情页,查看此主机资产指纹数据

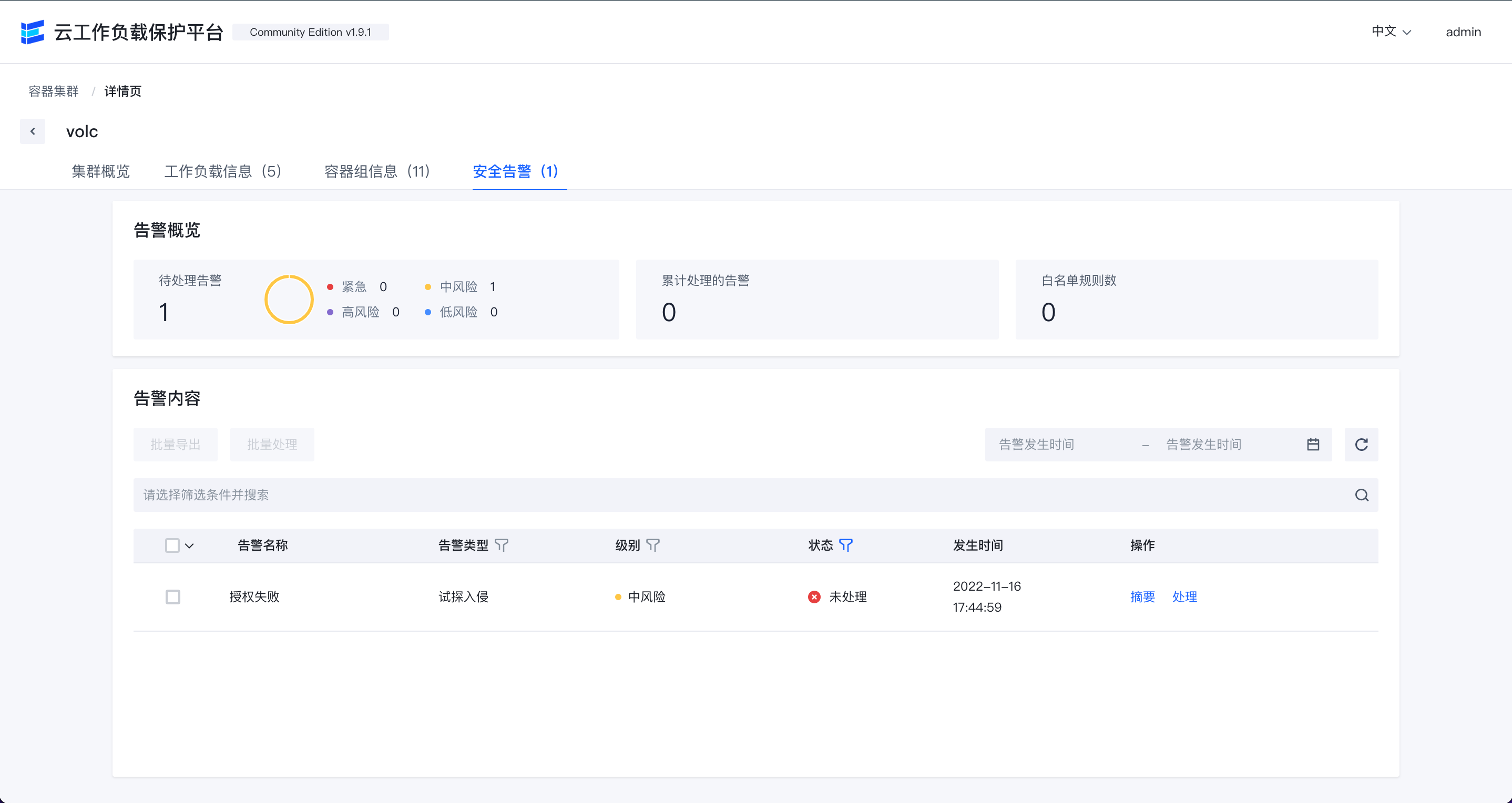

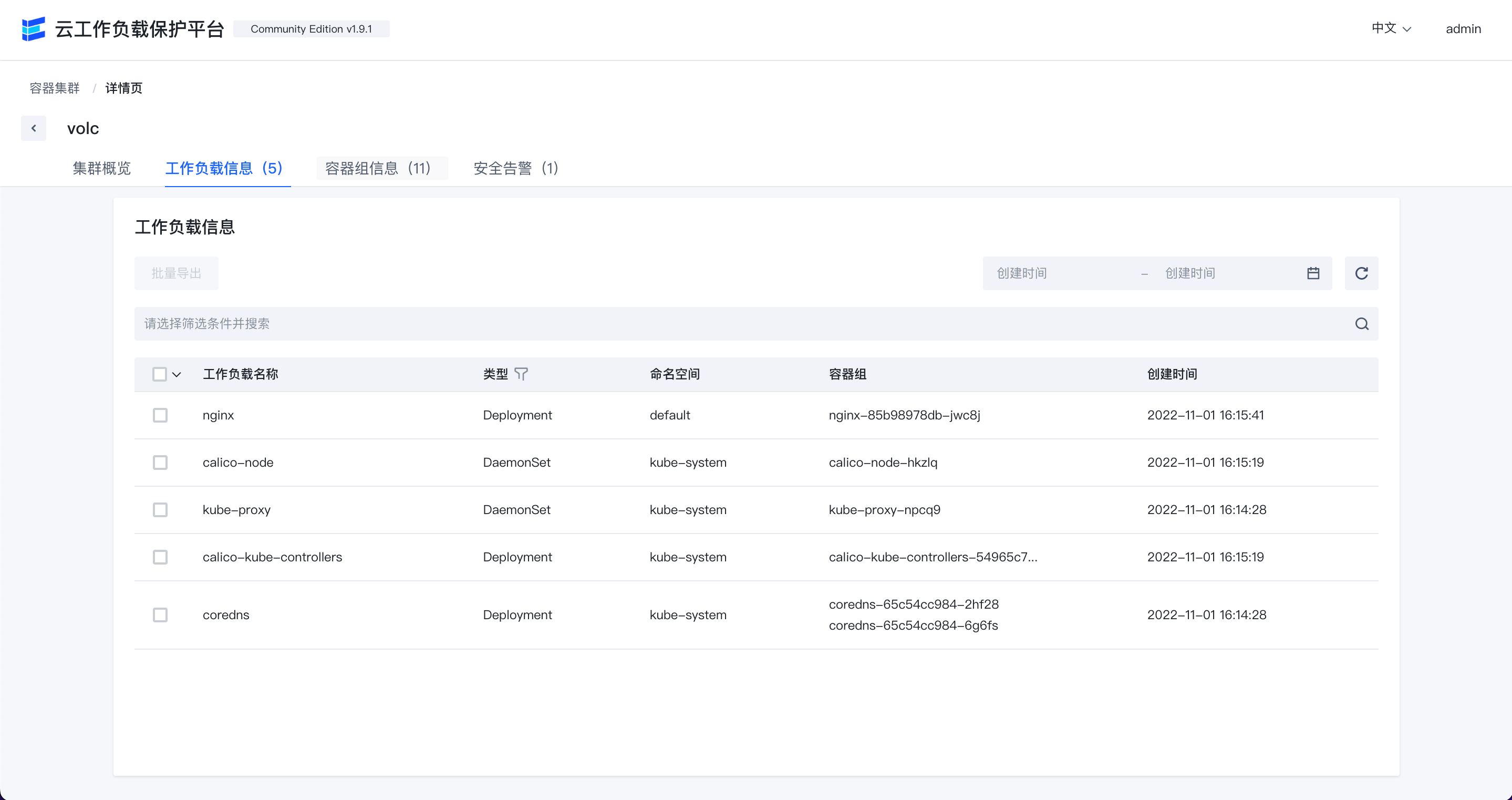

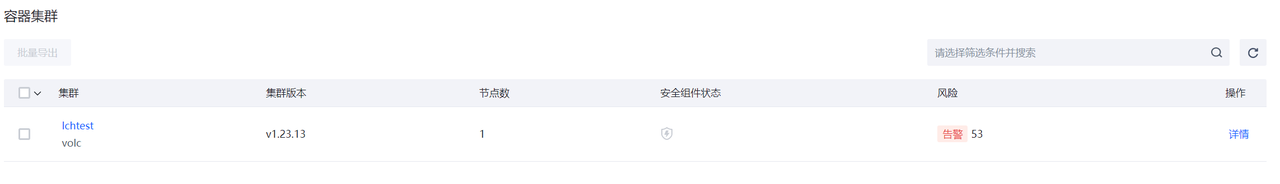

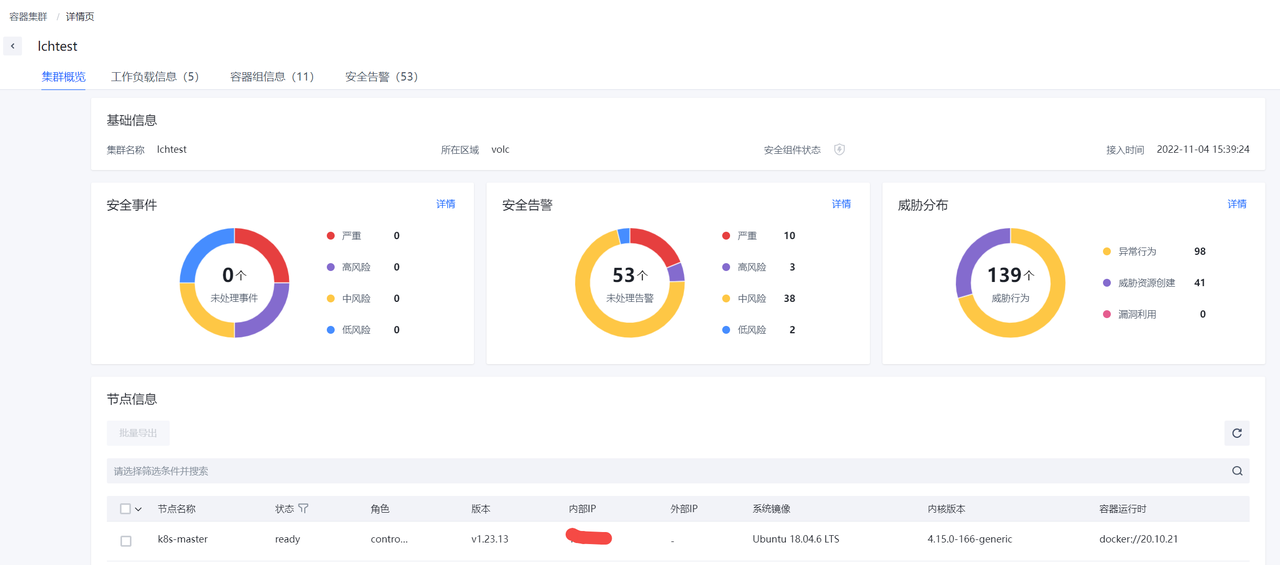

容器集群

展示已经接入容器集群防护能力的容器集群信息。

主机和容器防护

入侵检测

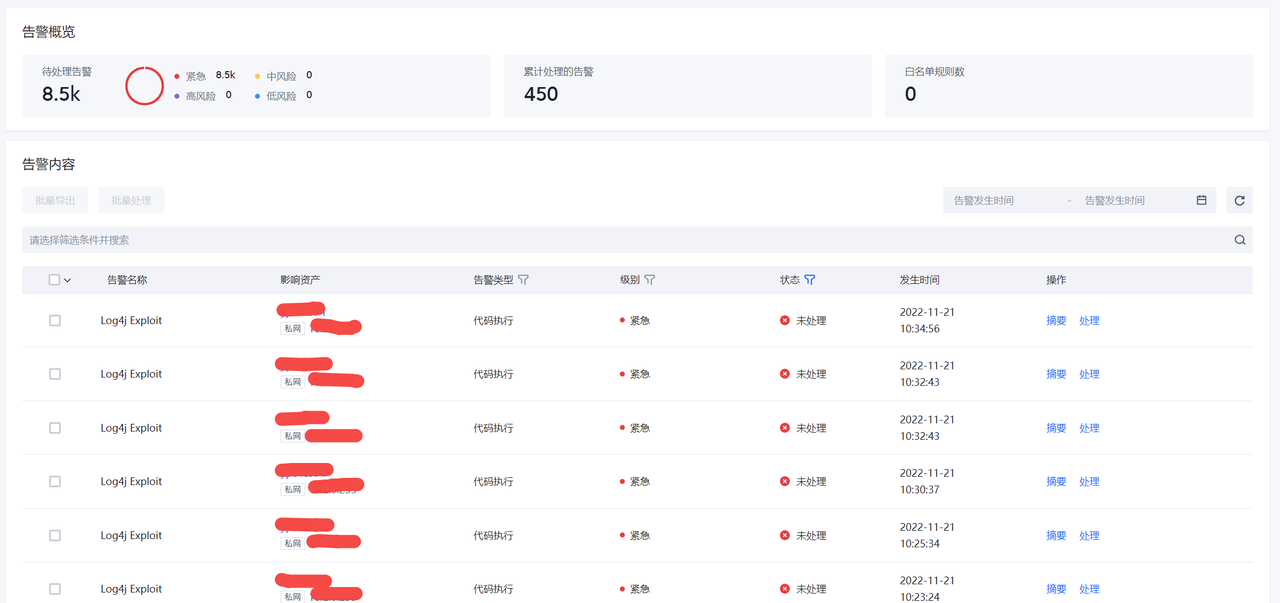

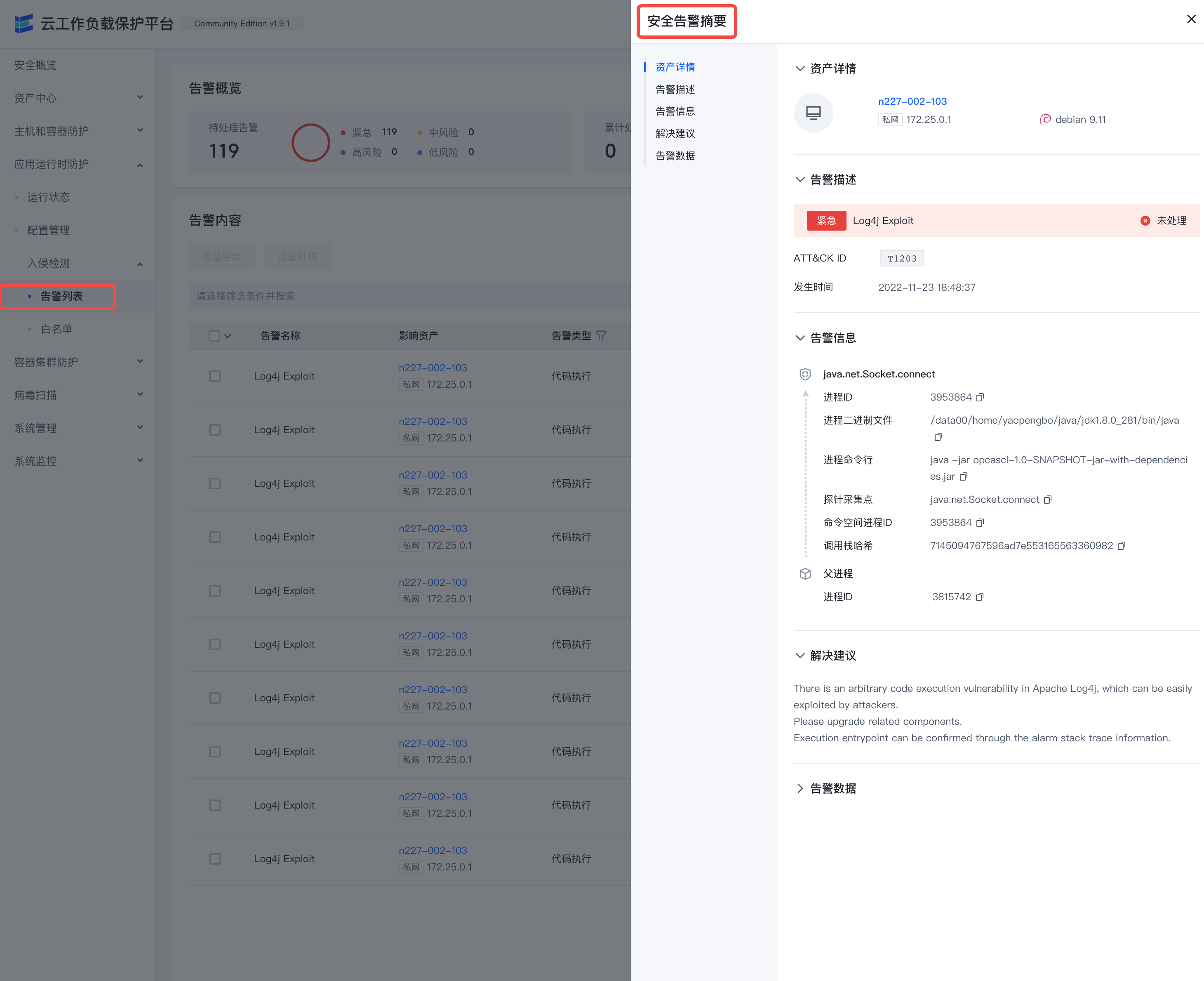

告警列表

告警列表可以查看当前环境内存在的安全告警列表。

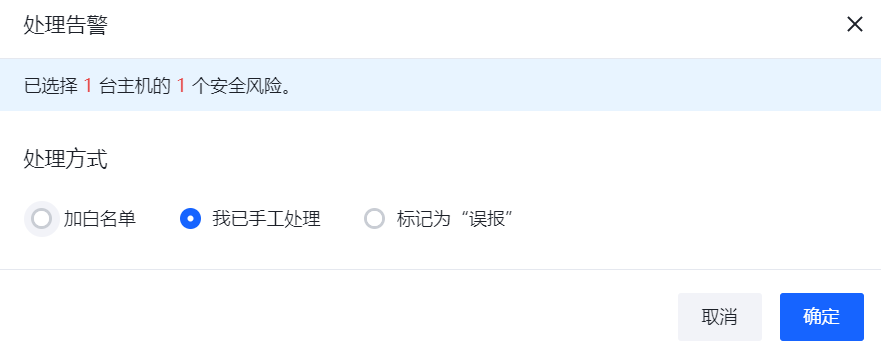

点击"摘要"可以查看告警关键摘要信息。

点击”处理”可以进行告警的处理,目前处理操作支持"加白","已人工处理"和“误报”。

点击各行的“影响资产”可以跳转到关联的主机详情页面查看相关数据。

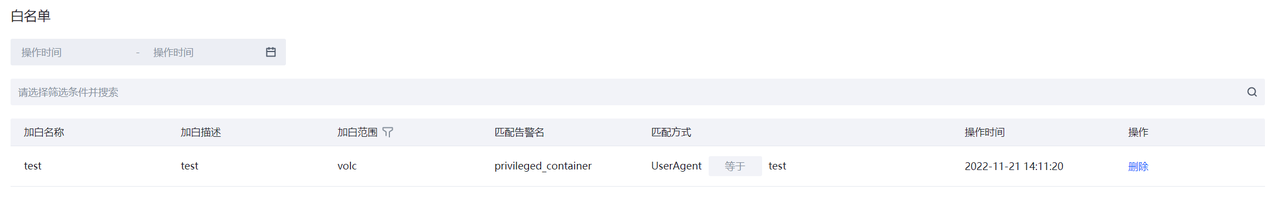

白名单

主机和容器防护告警的白名单。

风险防范

漏洞列表

漏洞列表页面可以查看当前环境内存在的安全漏洞风险,默认只显示高风险漏洞的信息,用户通过立即检查来检测环境中最新存在的安全漏洞。

在漏洞列表数据右侧点击详情可以查看漏洞信息以及影响的资产列表。

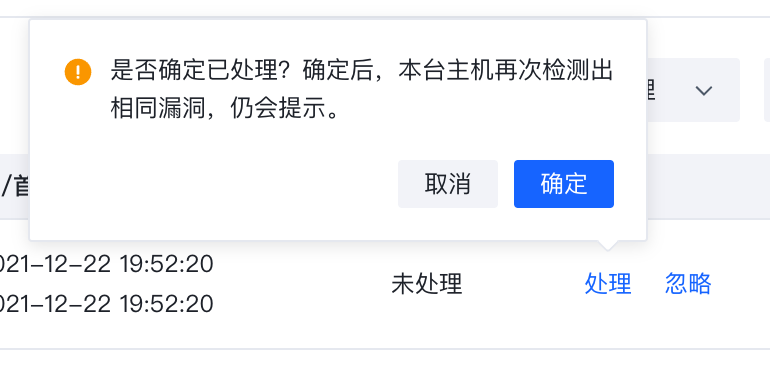

漏洞信息也可以通过处置与忽略进行标记。

基线检查

基线检查页面可以查看当前环境内存在的安全基线风险,可以通过立即检查来查看环境中最新存在的安全基线问题。

在基线列表右侧点击详情可以看到相应的基线详情。

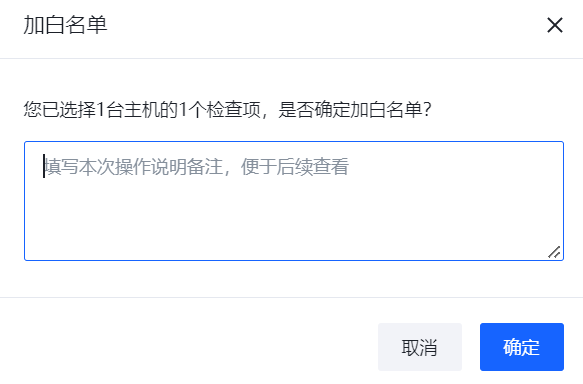

点击加白名单可以进行加白操作。

应用运行时安全防护

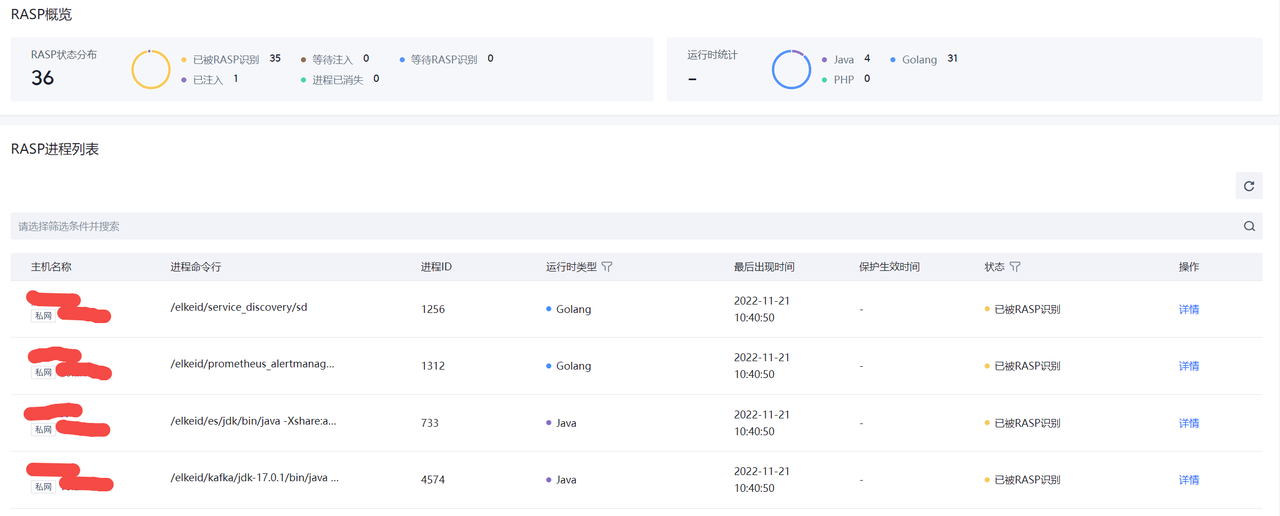

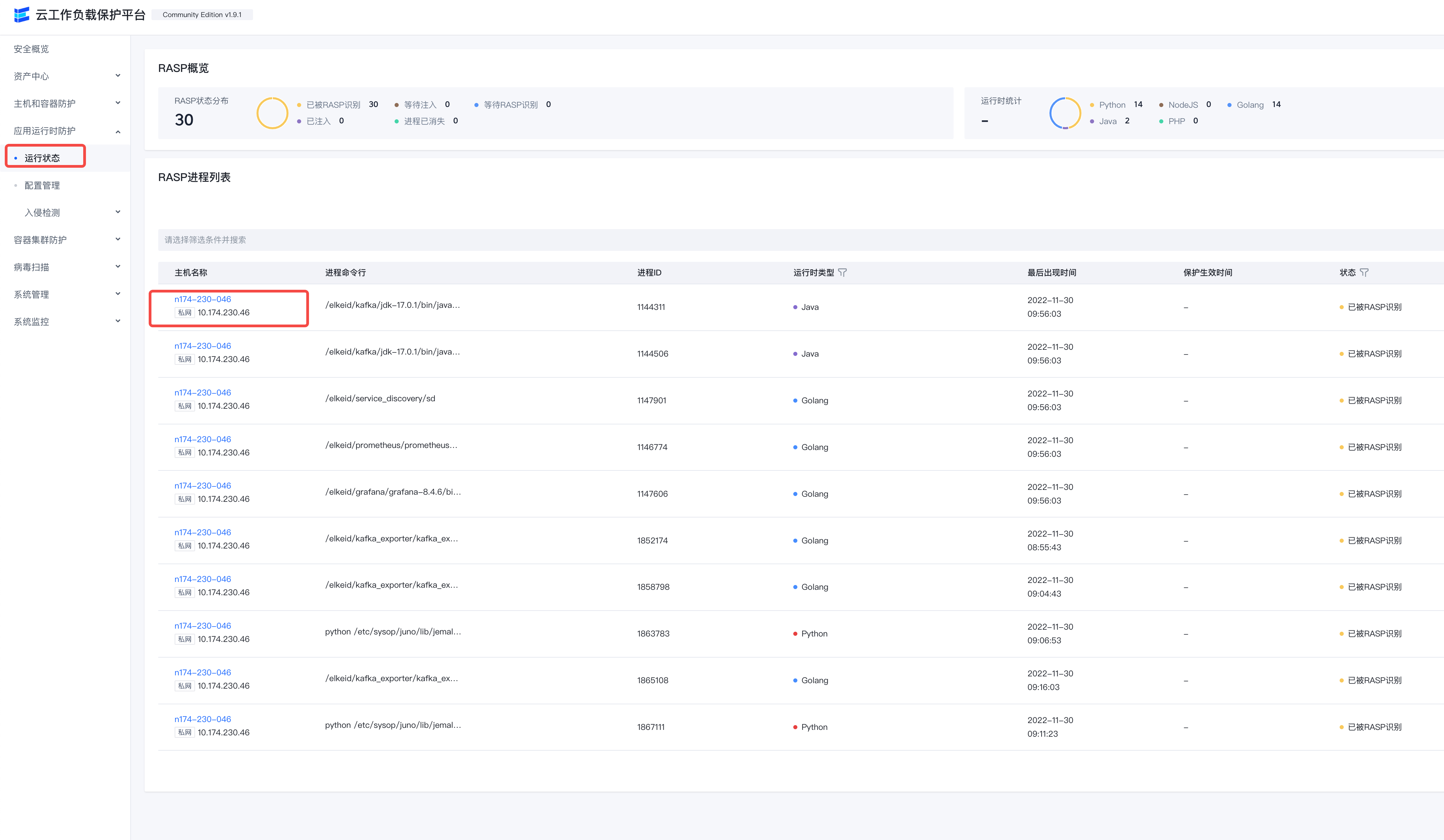

运行状态

展示运行时安全组件的覆盖情况。

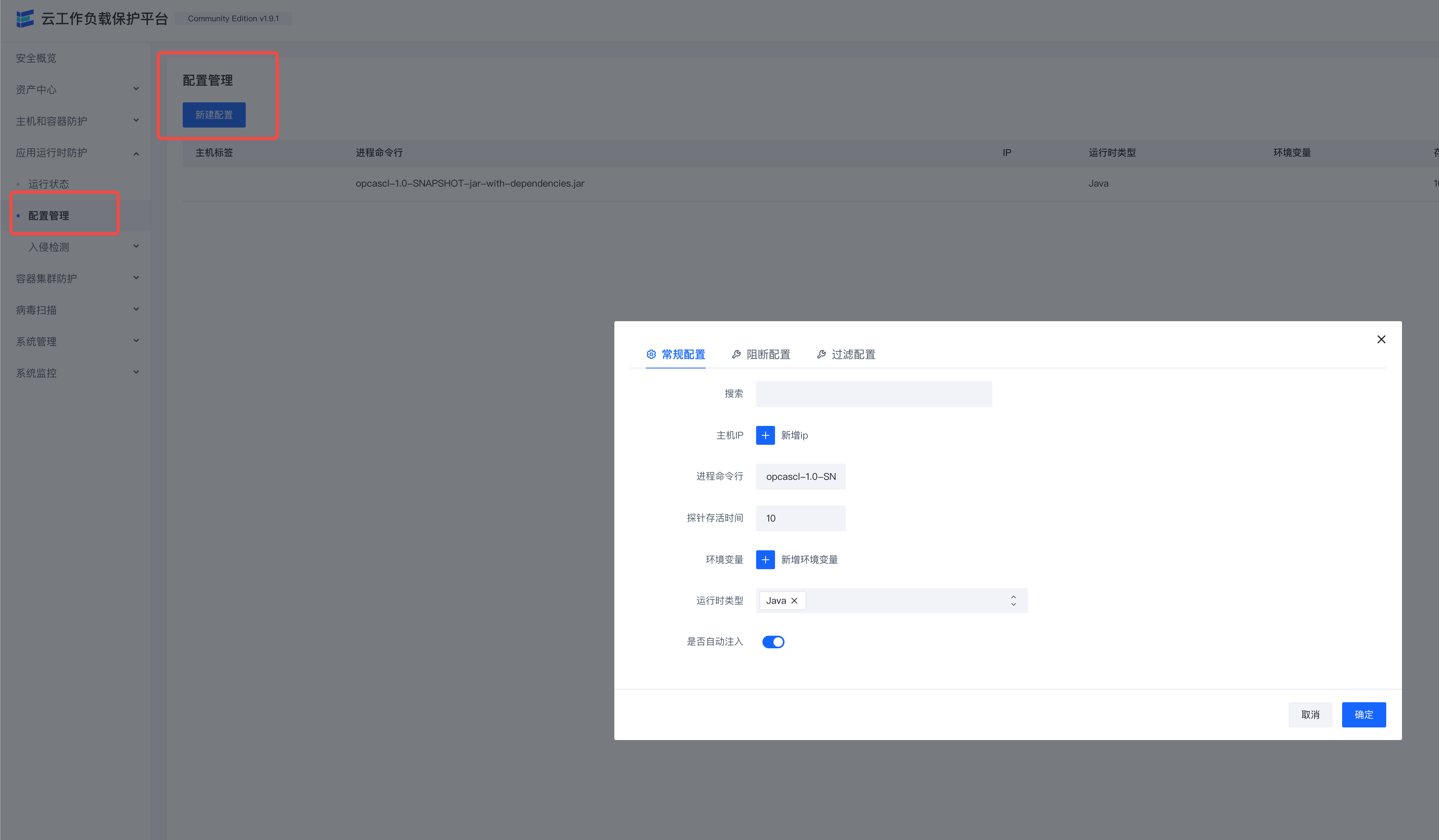

配置管理

进行运行时安全组件的配置管理。

入侵检测

告警列表

运行时安全检测发现的安全告警展示。

白名单

运行时安全检测告警过滤白名单规则展示。

容器集群防护

入侵检测

告警列表

容器集群防护发现的安全告警展示。

白名单

容器集群防护告警过滤白名单规则展示。

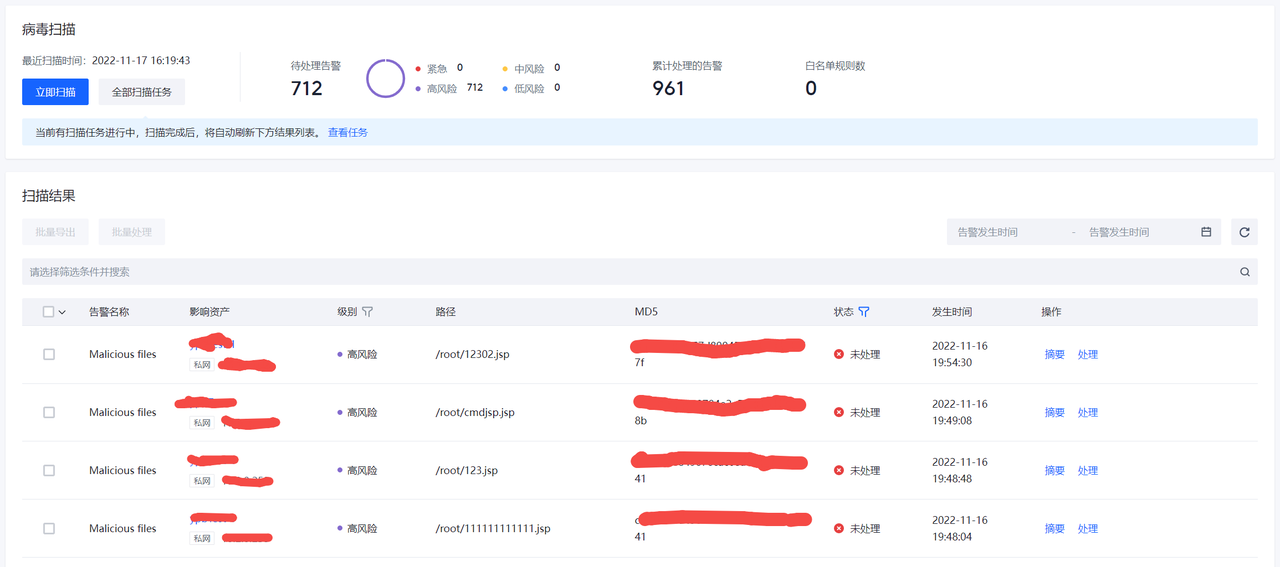

病毒扫描

病毒扫描

显示检测到的病毒文件信息。

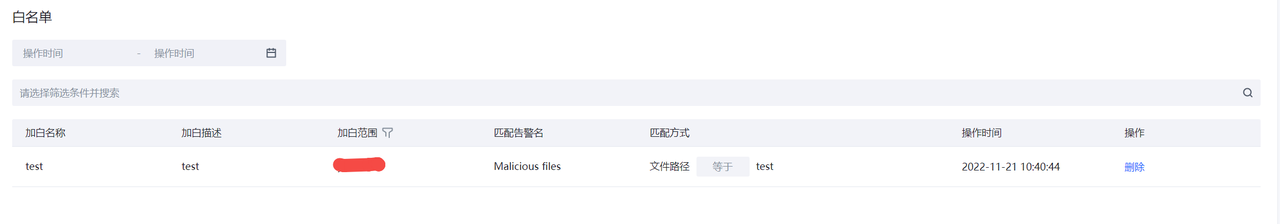

白名单

对病毒检测结果进行过滤的白名单列表。

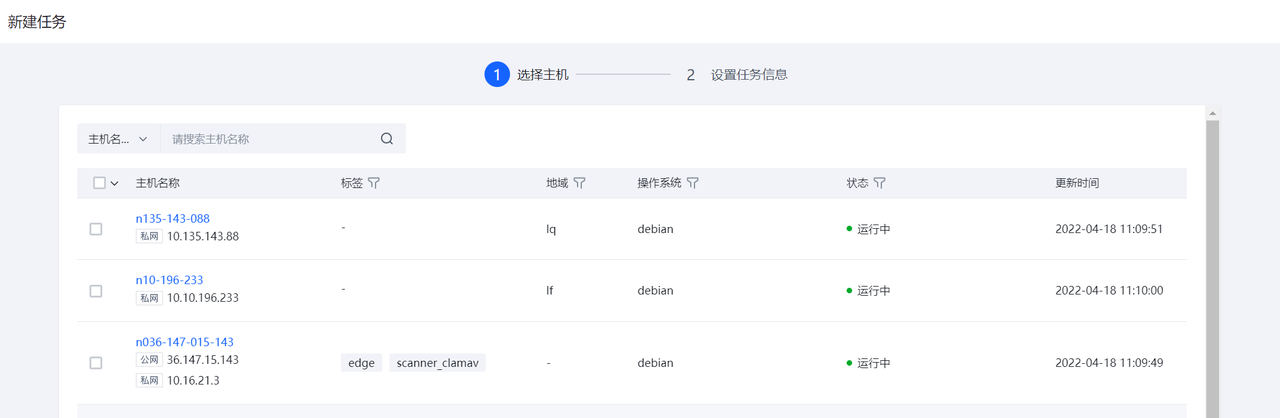

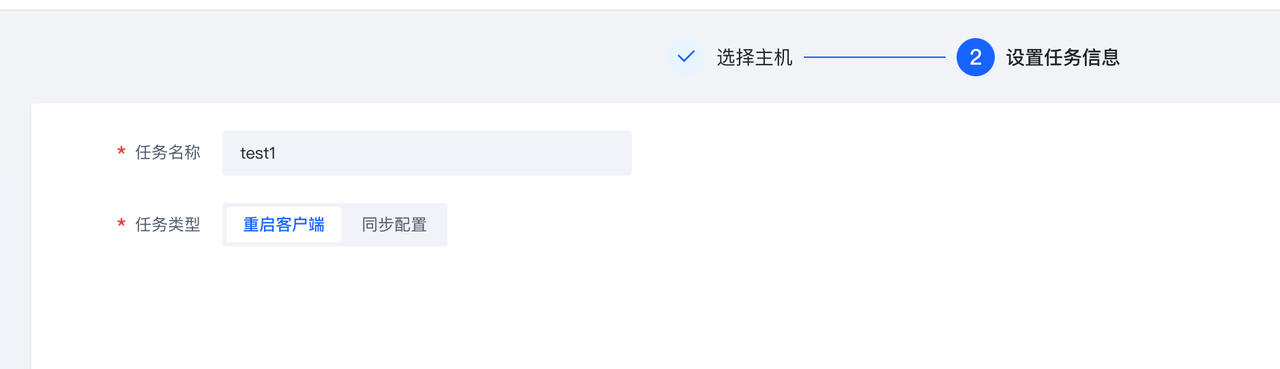

系统管理

任务管理

该功能主要用于重启客户端、同步配置等管理。

点击”新建任务“,可以进行任务的配置。

除"重启客户端"类型任务外,其他类型任务需要先在"组件管理"和"组件策略"界面进行Agent和插件的配置,才能进行相应操作。

组件管理

用于对Agent和相应插件进行升级配置的管理。

点击”新建组件“可以选择组件的类型是Agent还是插件,然后进行相应配置。

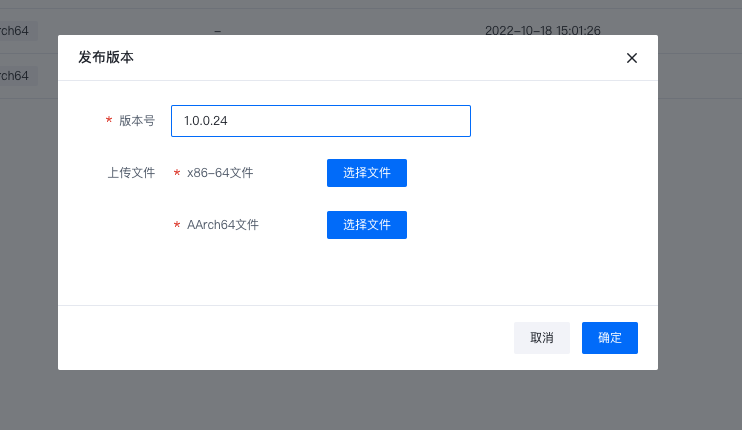

点击发布版本按钮,可以通过上传对应架构与发行版的组件文件生成版本实例。

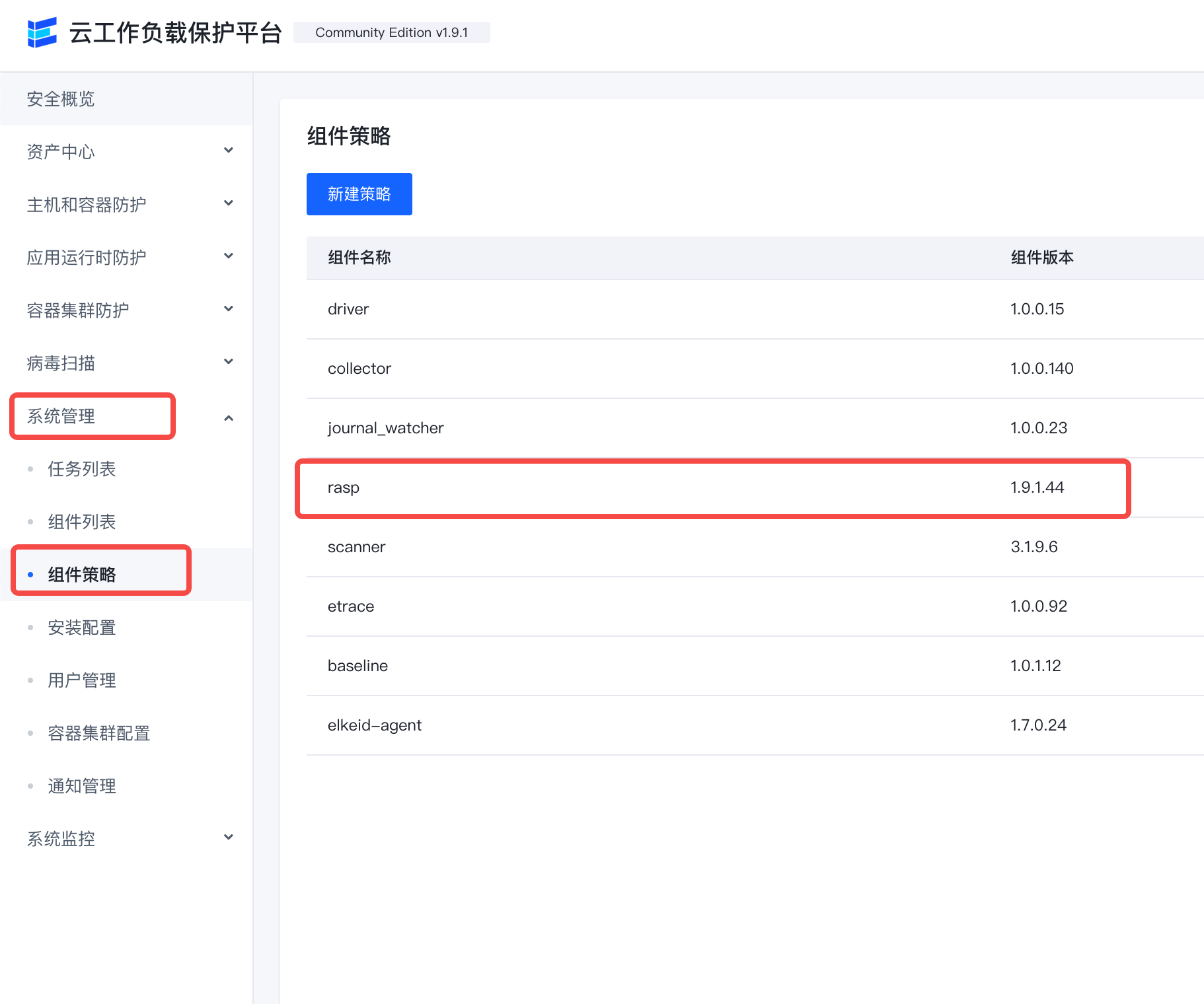

组件策略

通过管理组件策略,调整Agent实际生效的组件版本,进而实现更新、卸载等操作。

点击新建策略,通过选择组件名称及相匹配的版本,将对应策略添加至策略组中。

通过屏蔽规则使得部分主机不加载(生效)对应组件。

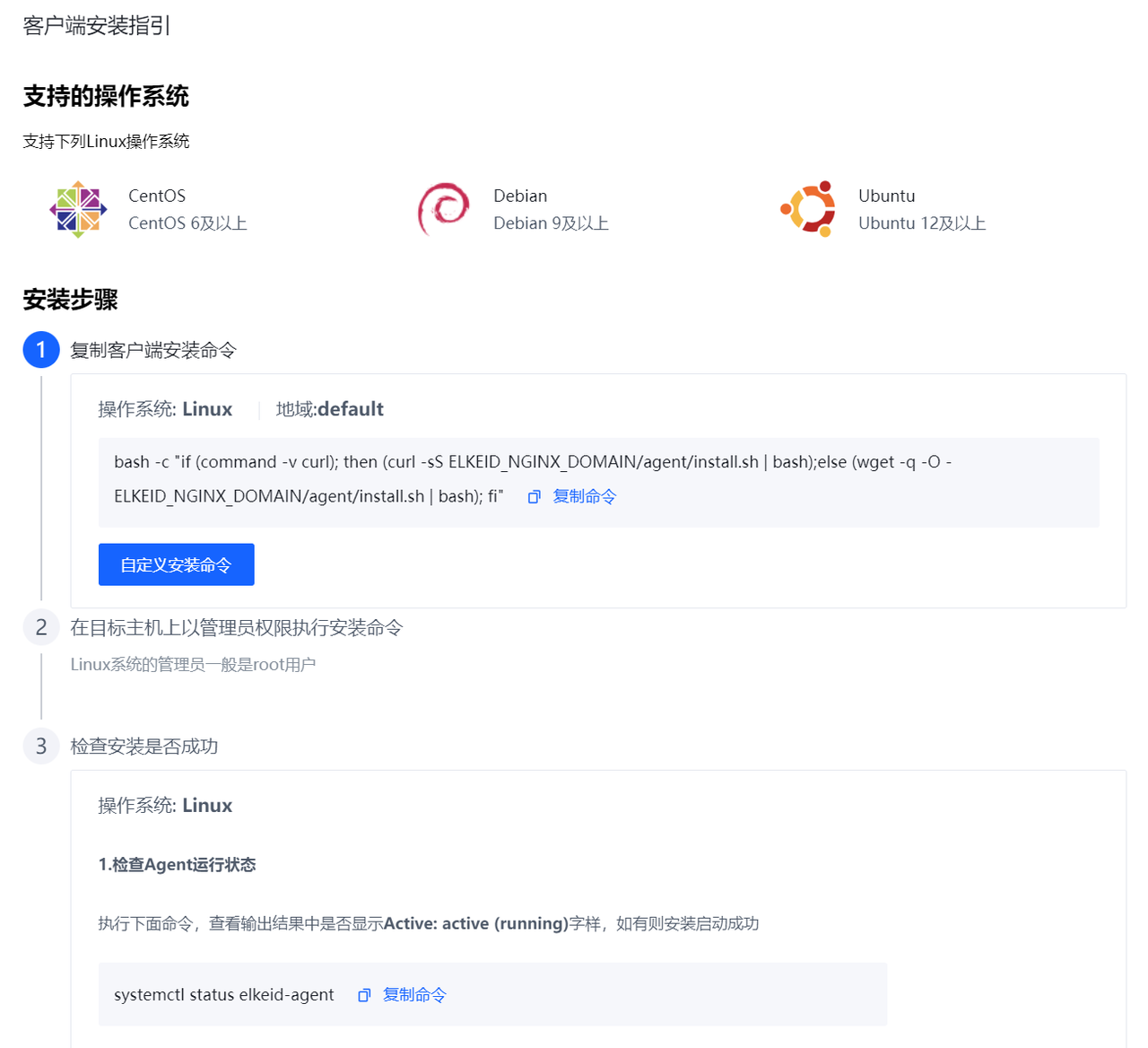

安装配置

可以通过部署帮助来实现Agent的部署和卸载。

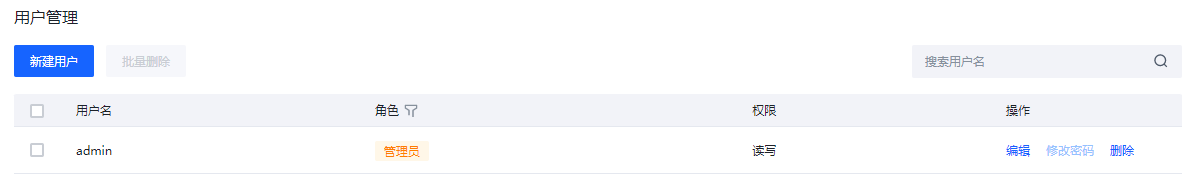

用户管理

可以在用户管理中对Elkeid Console进行管理,如修改密码,新增与删除用户。

点击"新增用户"可以设置增加新的用户,设置密码的时候请注意密码强度的要求。

容器集群配置

进行容器集群防护能力接入的配置。

通知管理

告警通知以及过期通知的配置管理,支持飞书,钉钉等。

系统监控

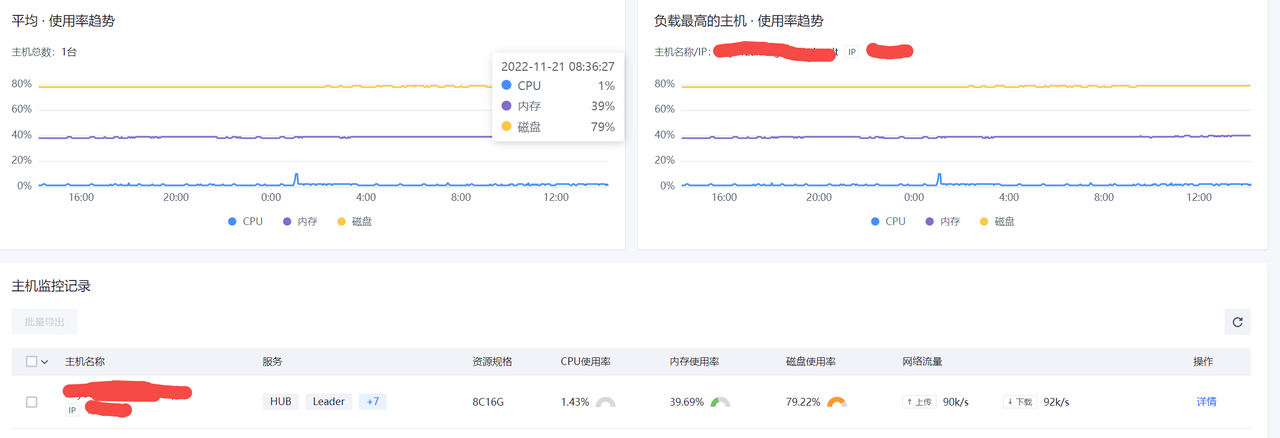

后端监控

查看后端服务所在主机的CPU,内存,磁盘和网络流量等使用情况。

后端服务

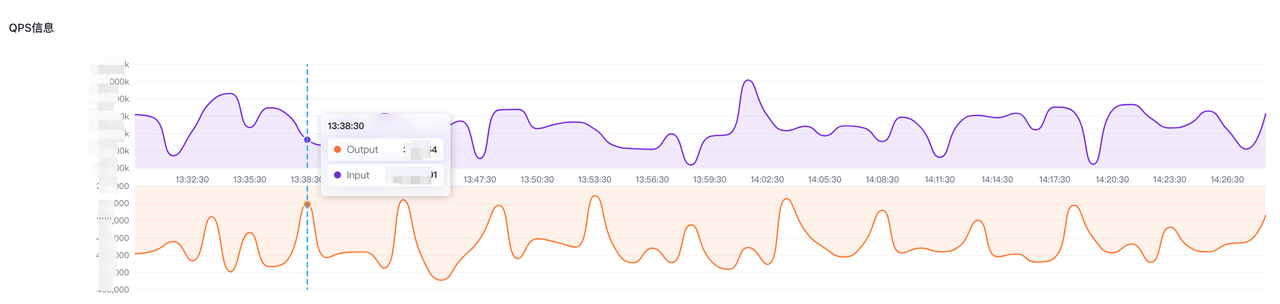

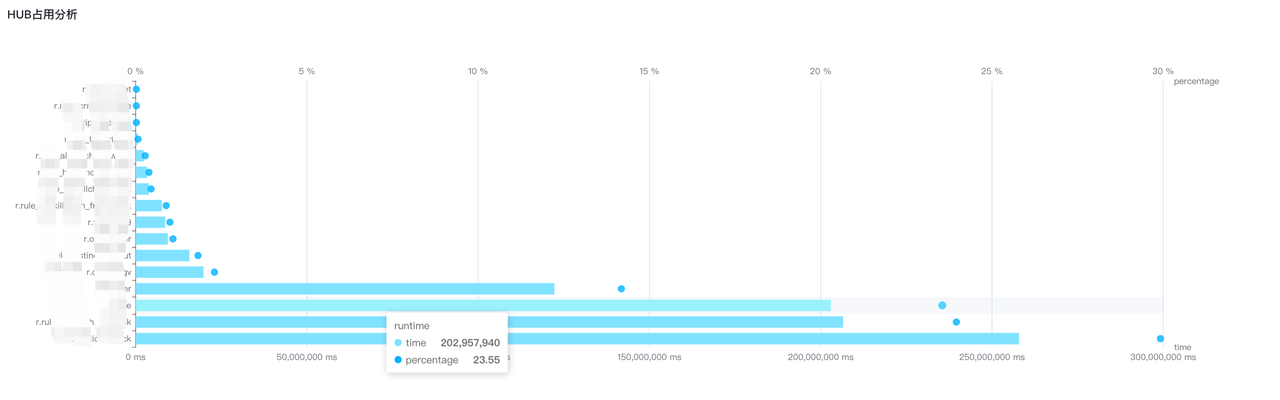

查看后端各服务模块的QPS,CPU和内存的使用情况。

服务告警

显示最近1小时/24小时发现的服务异常告警。

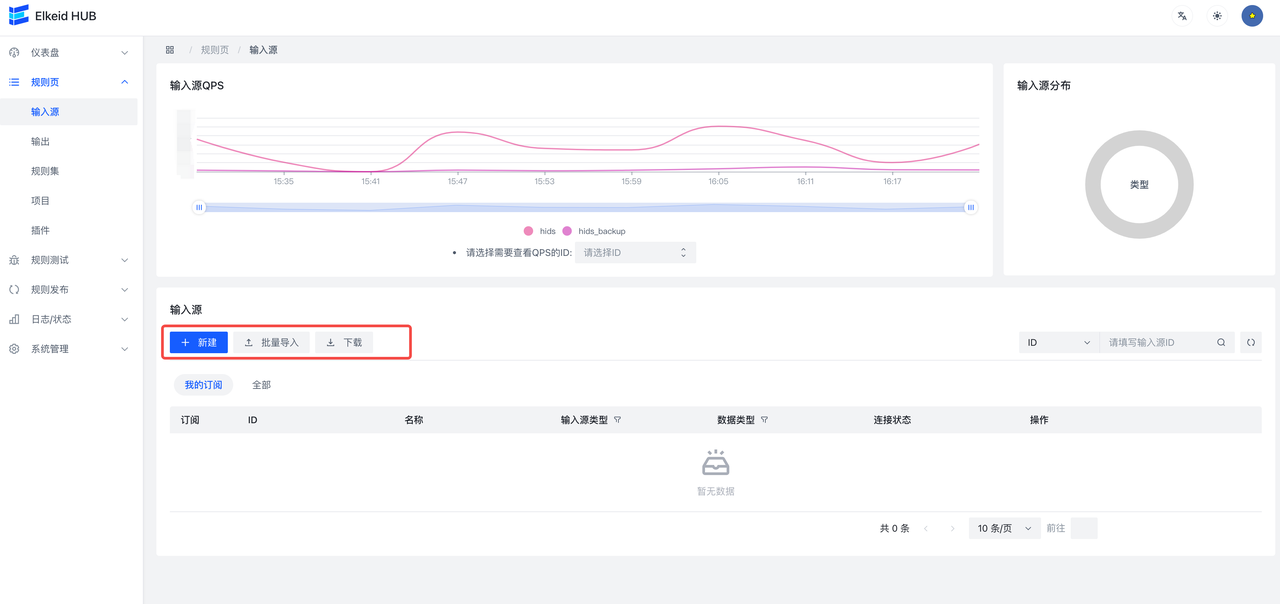

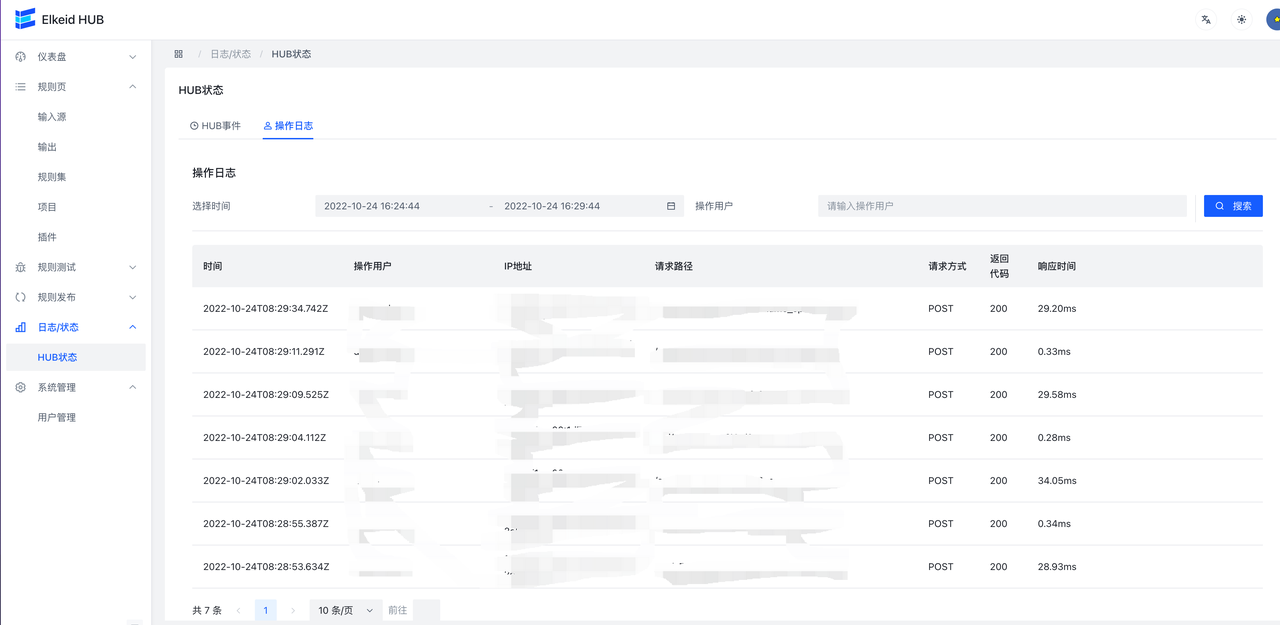

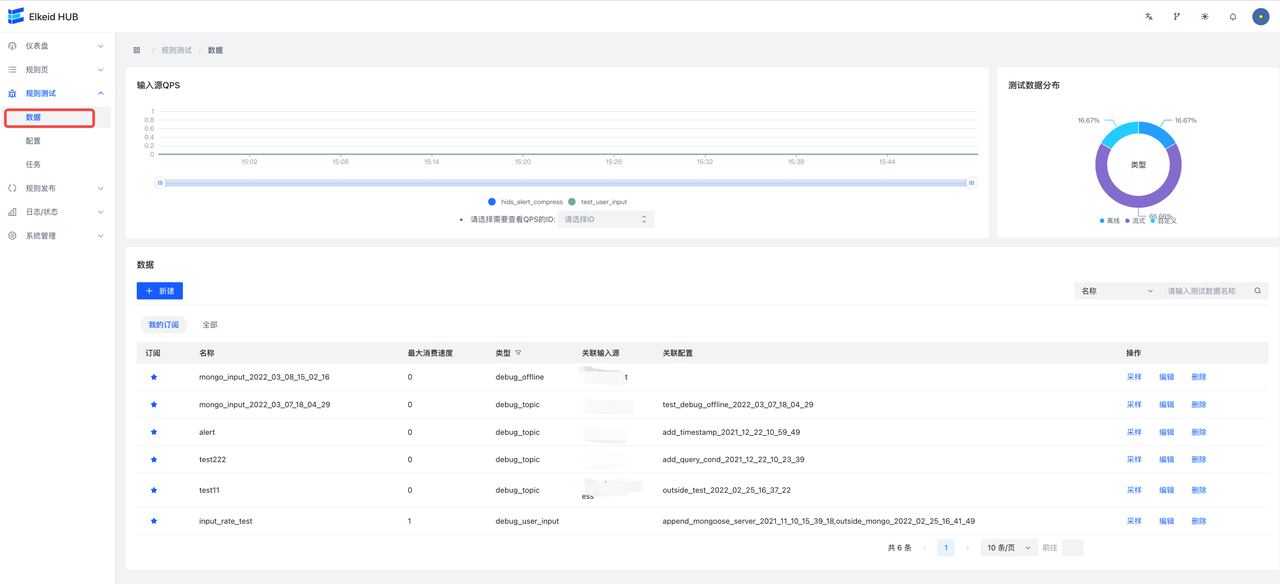

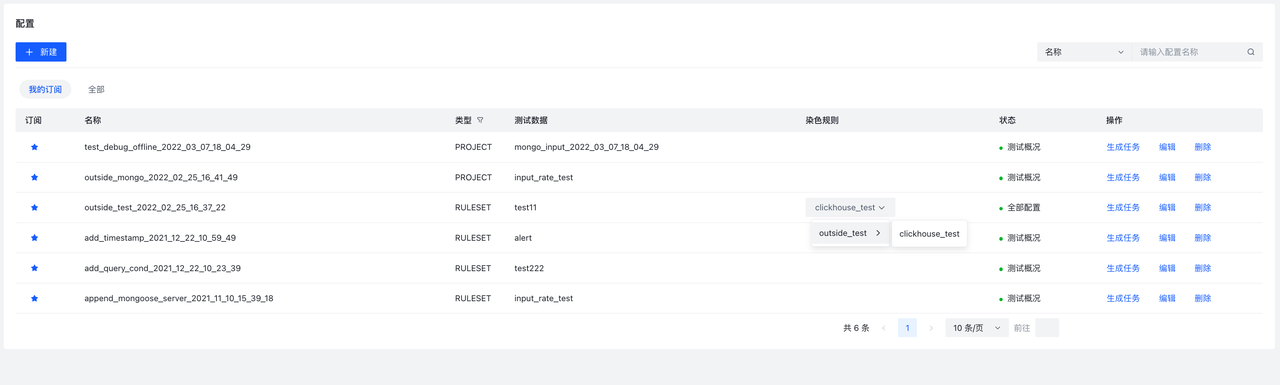

Elkeid HUB 社区版快速上手教程

本教程的前置条件

在开始本教程之前,请检查:

- 已经按照部署文档,使用elkeidup正确部署了 HUB。

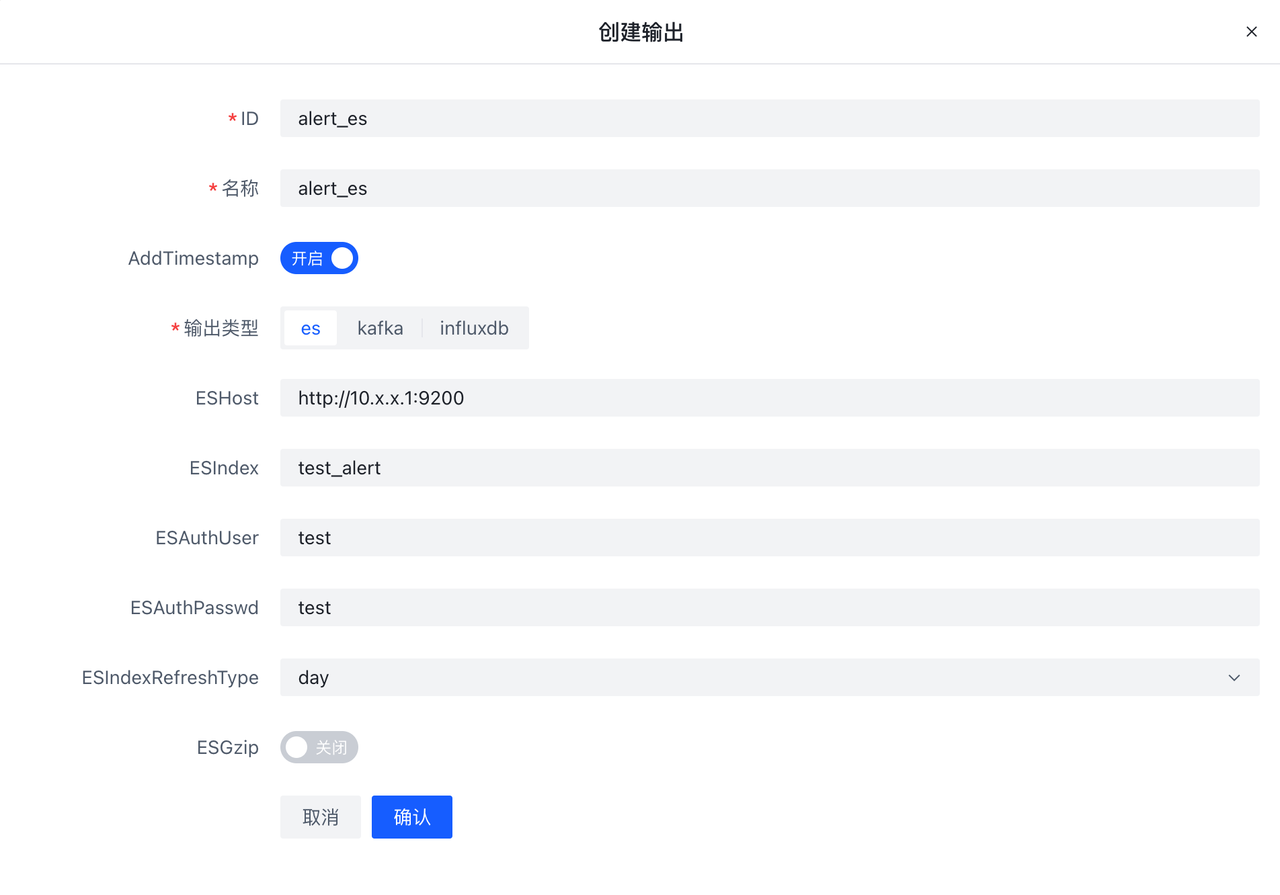

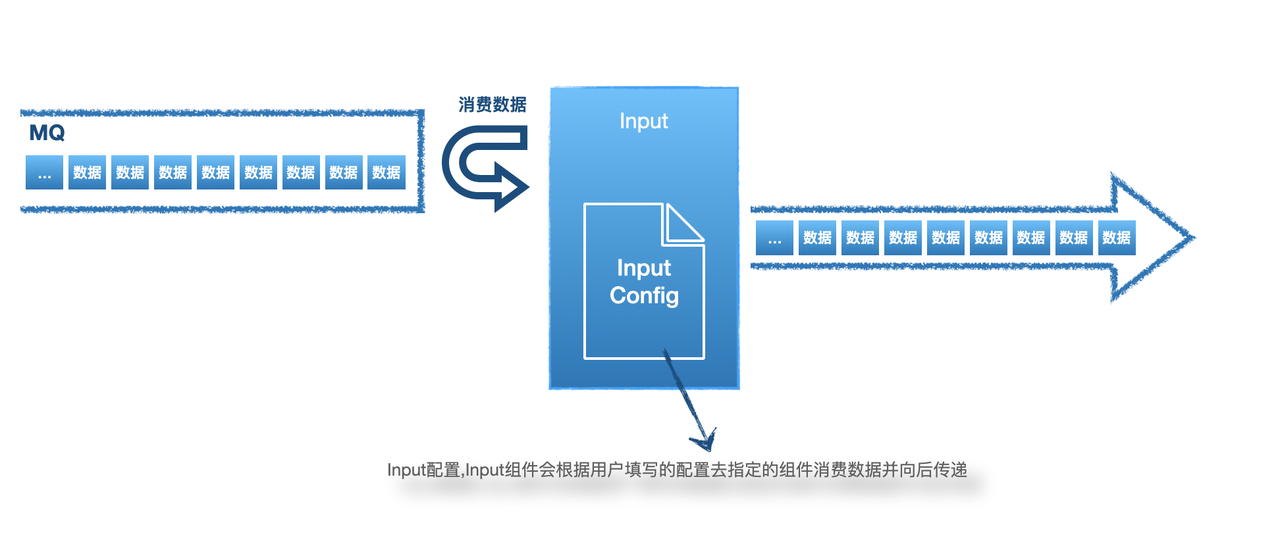

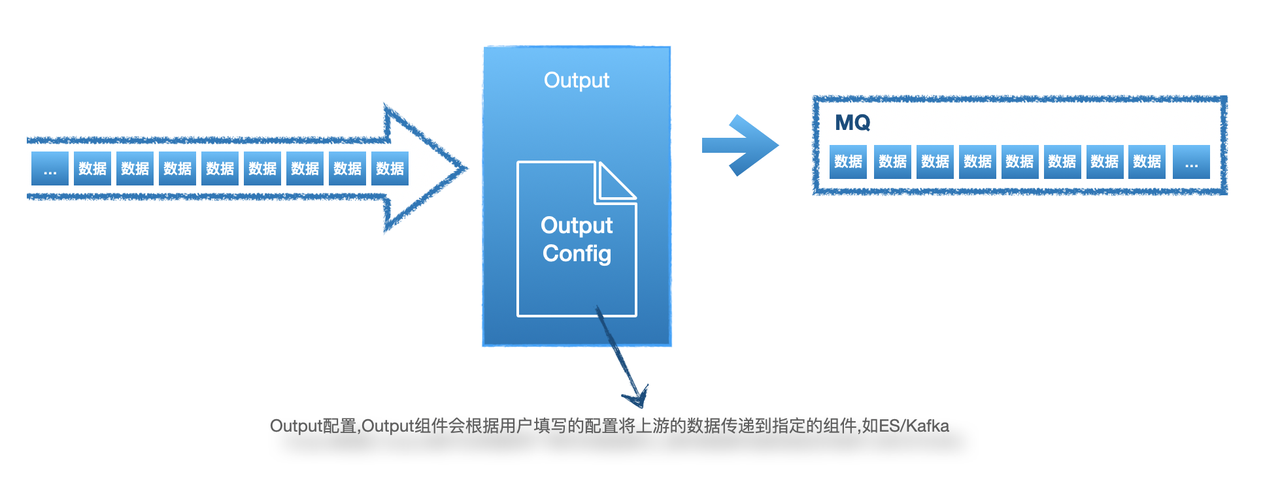

- 至少有一个可以使用的数据输入源(Input)和数据存储的输出源(Output)。在HIDS场景中,数据输入源为 AgentCenter 配置文件中指定的 kafka ,输出源可以使用 ES 或 Kafka ,本教程中以 ES 为例。

e.g. 社区版默认已配置输入源(hids,k8s)可在前端看到,方便测试使用

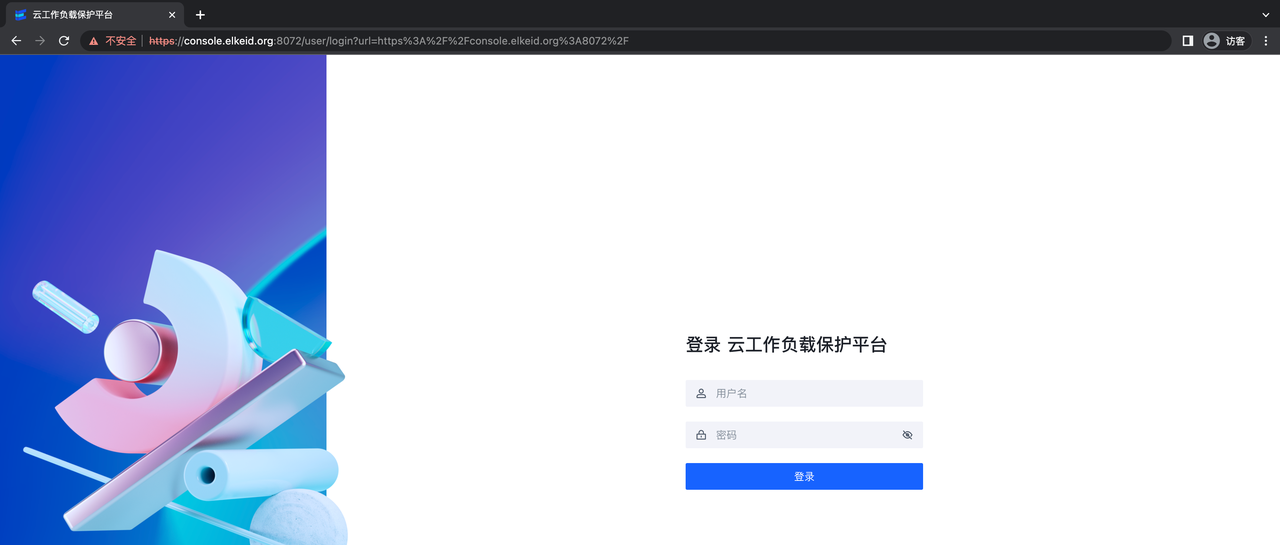

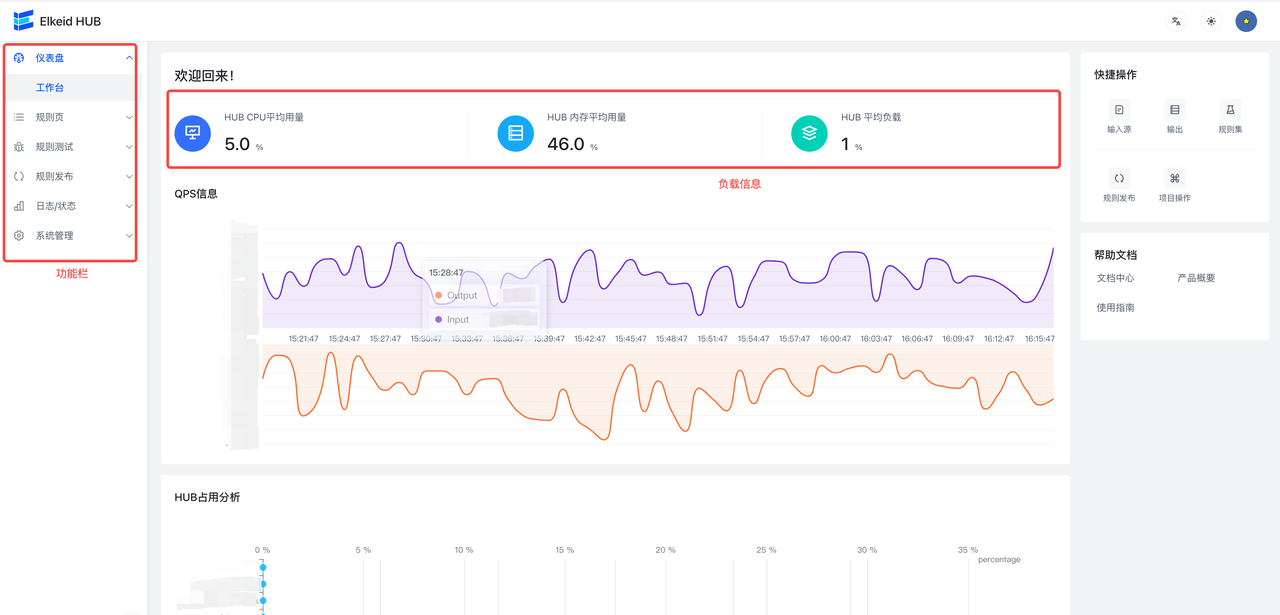

Step 1. 访问并登录前端

使用部署前端机器的 IP 即可访问前端,登录信息为elkeidup部署创建的用户名和密码。

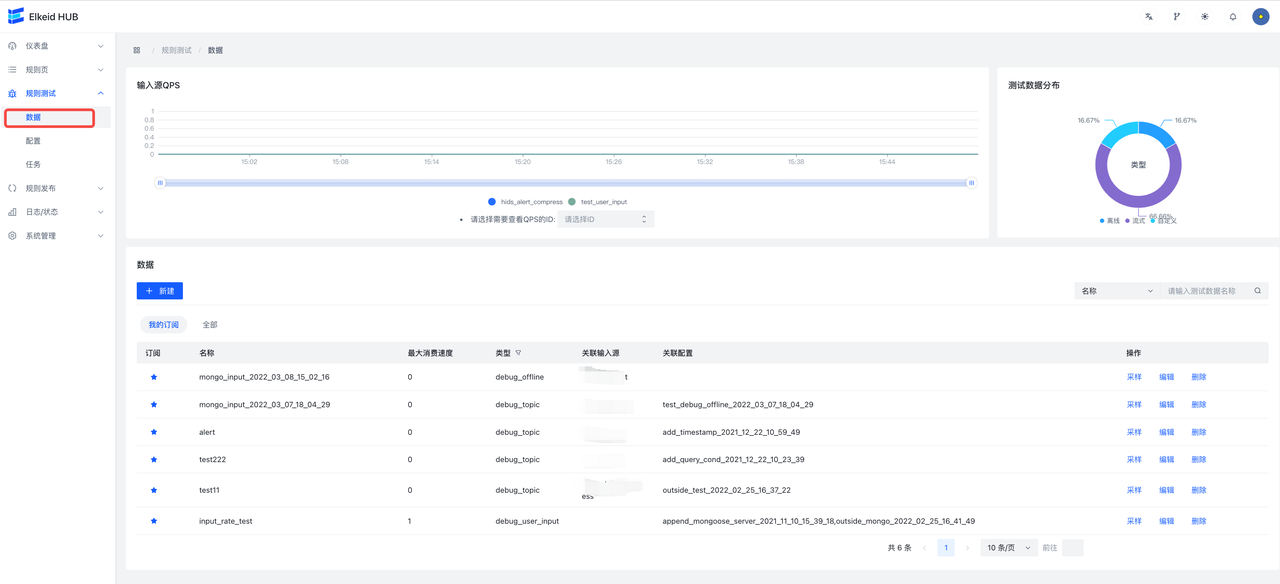

Step 2. 编写策略

基本概念介绍

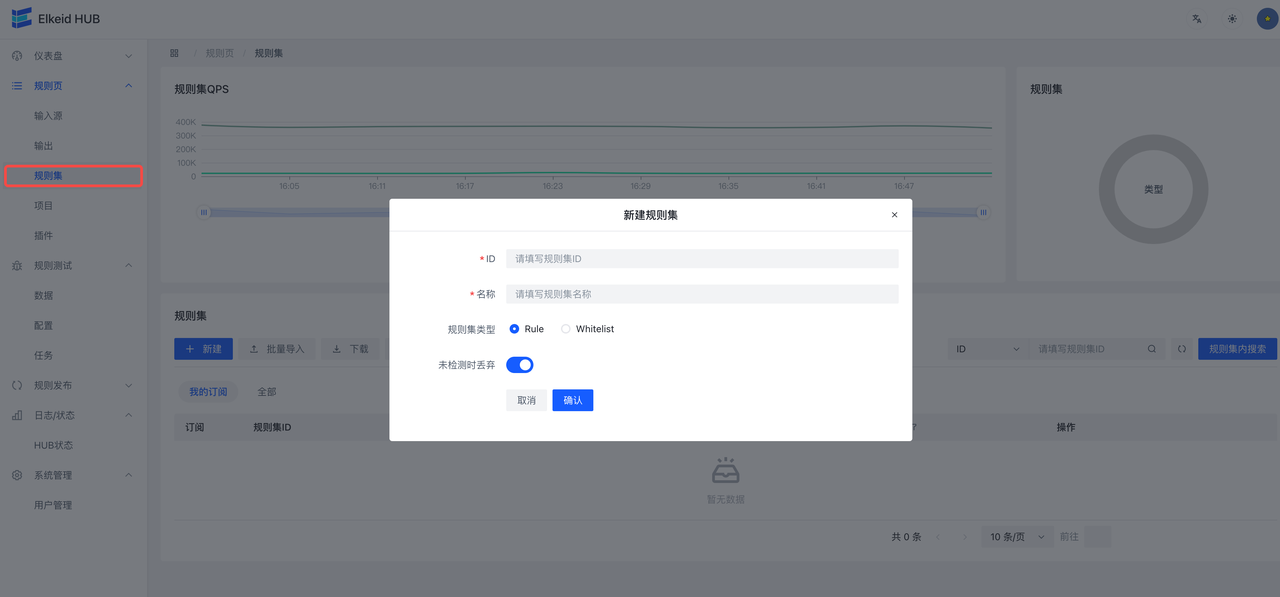

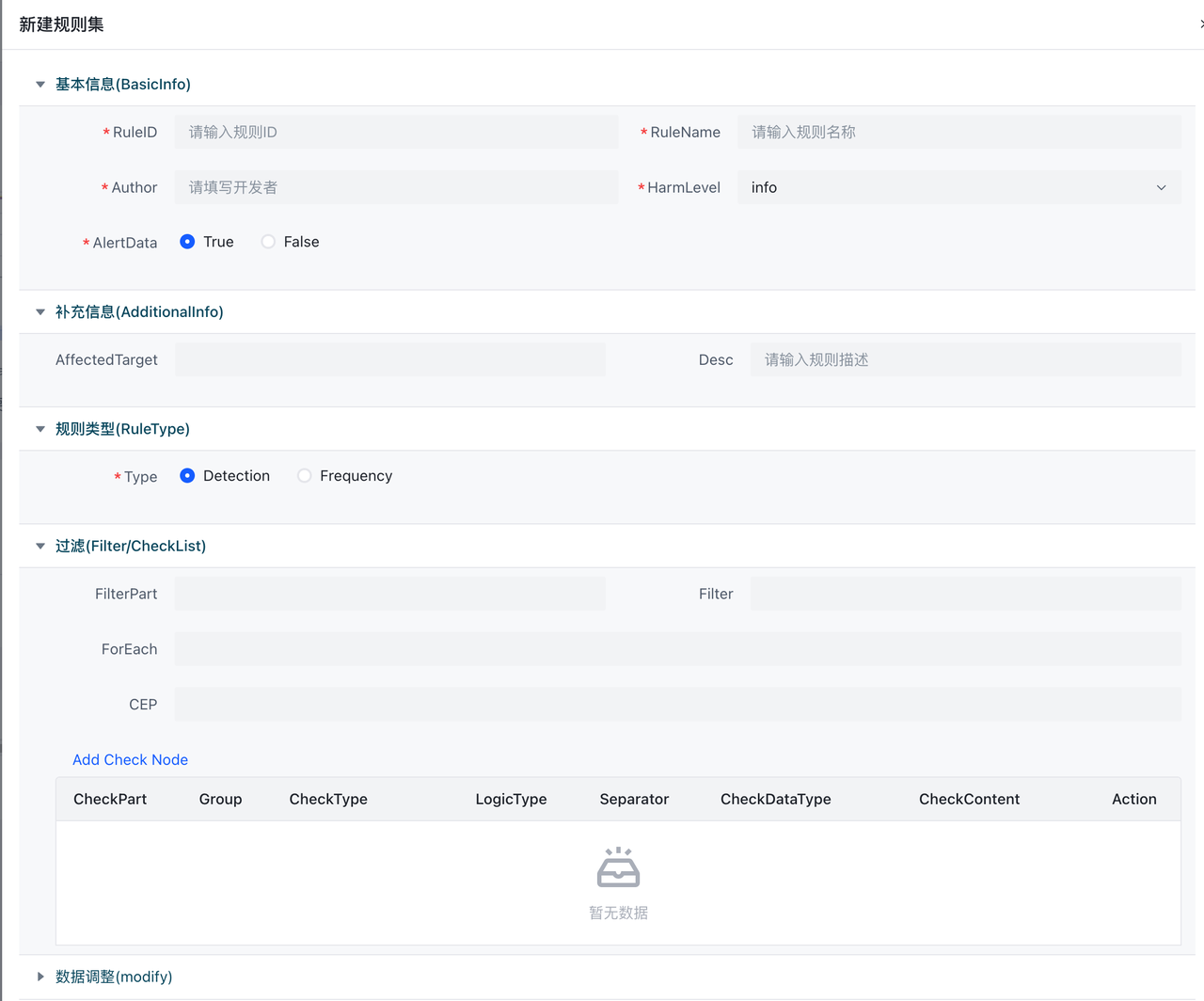

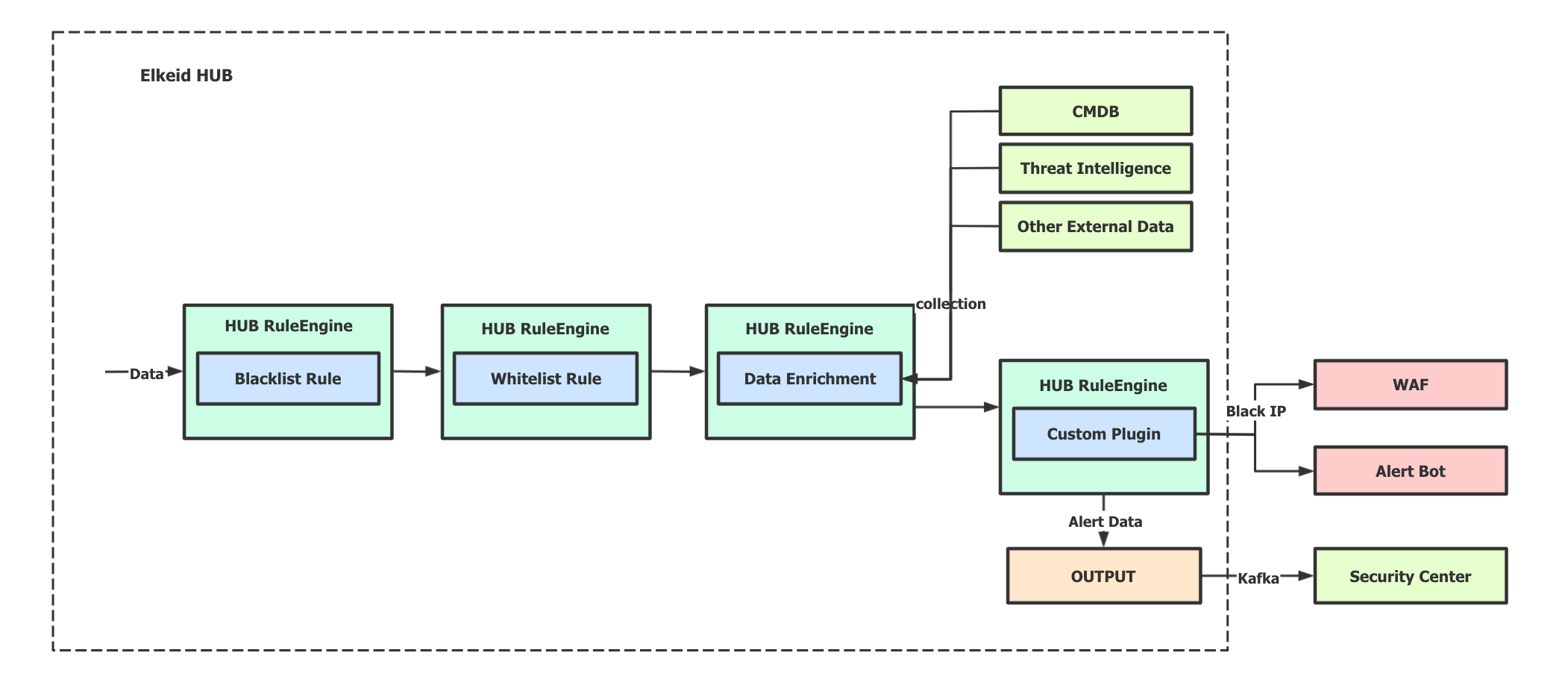

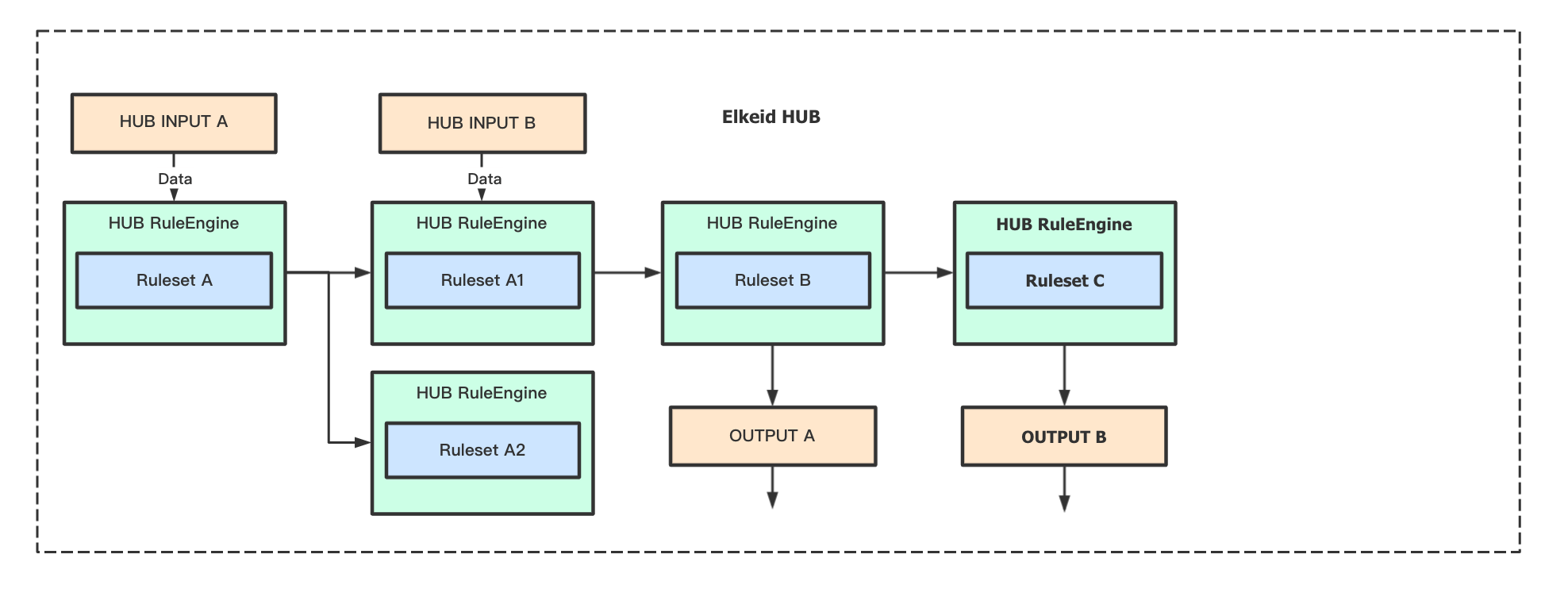

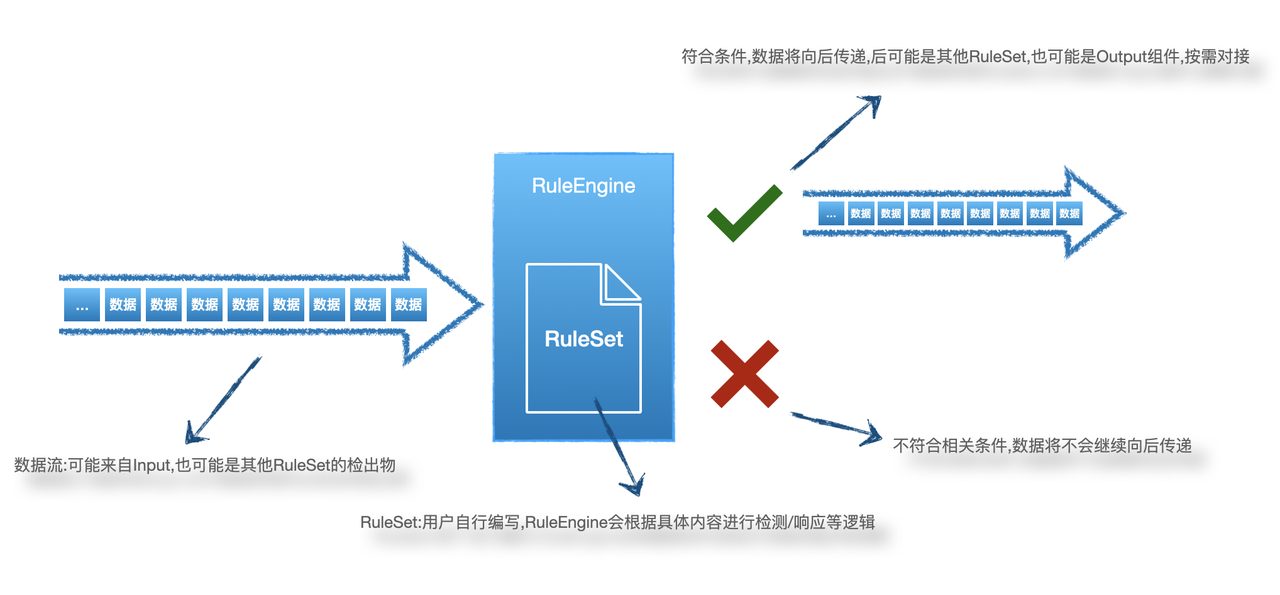

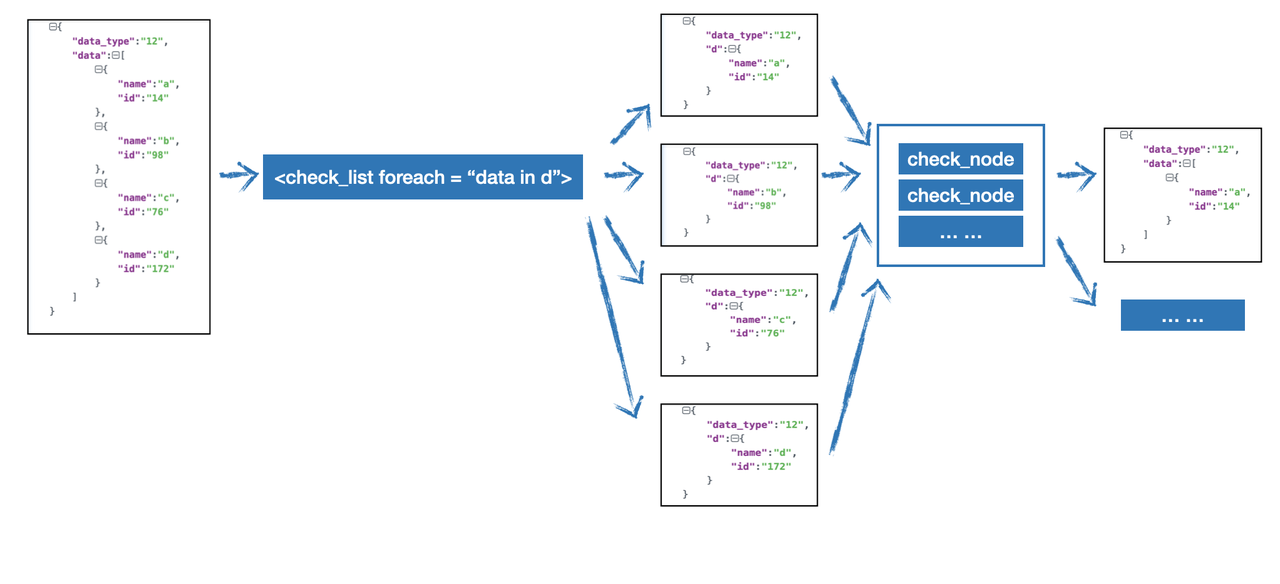

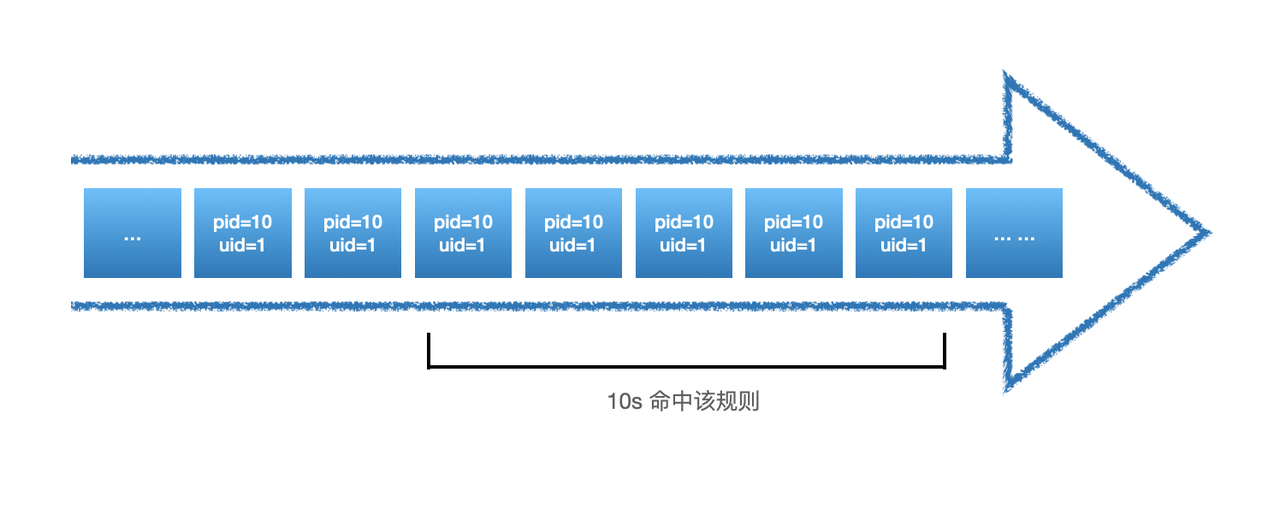

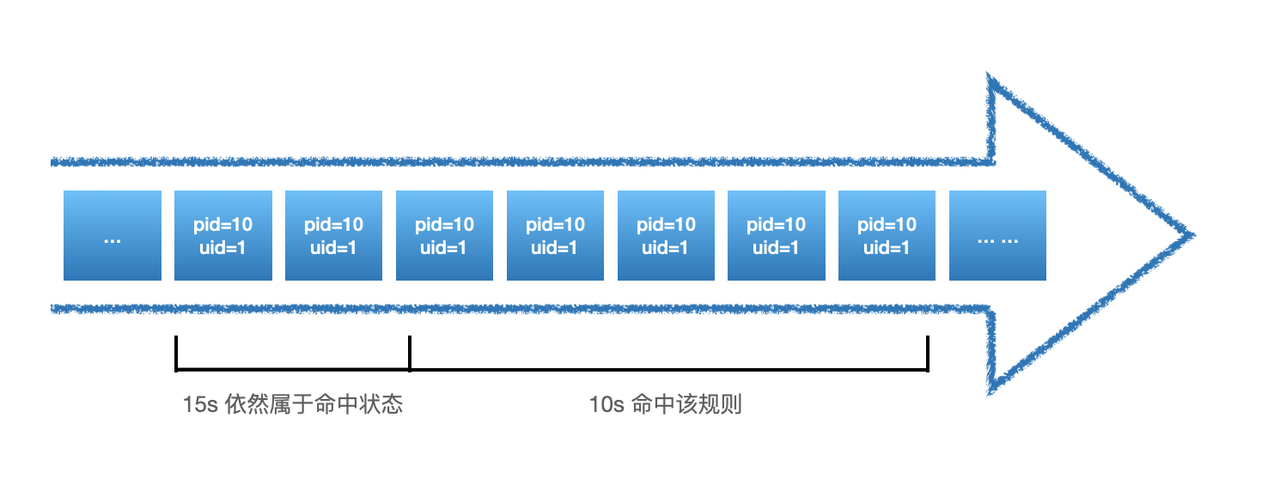

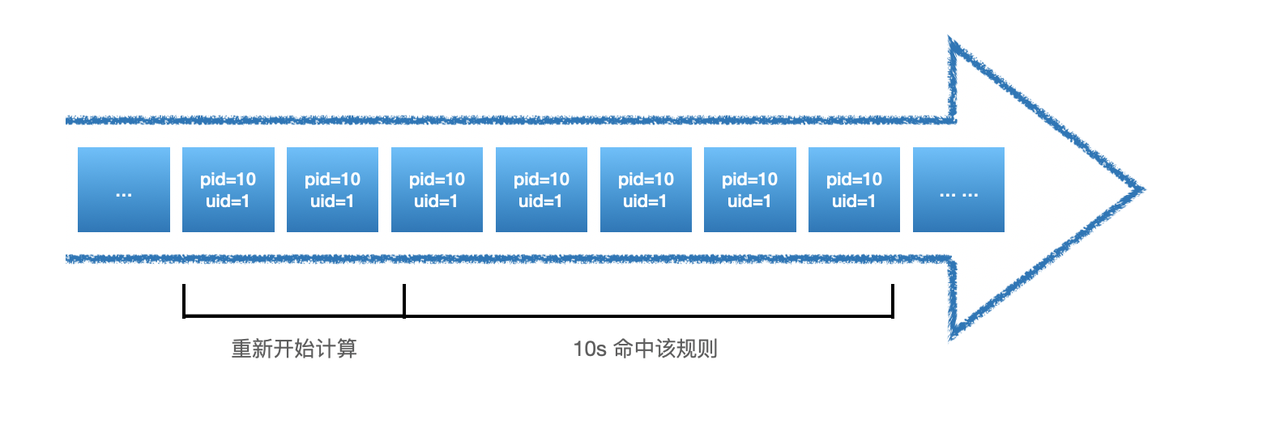

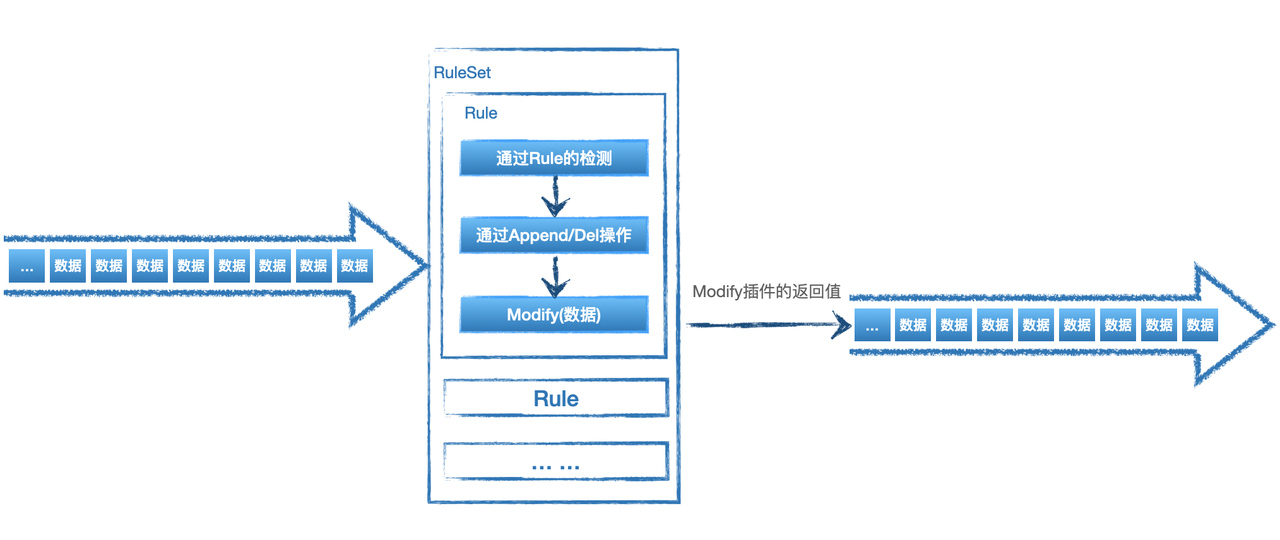

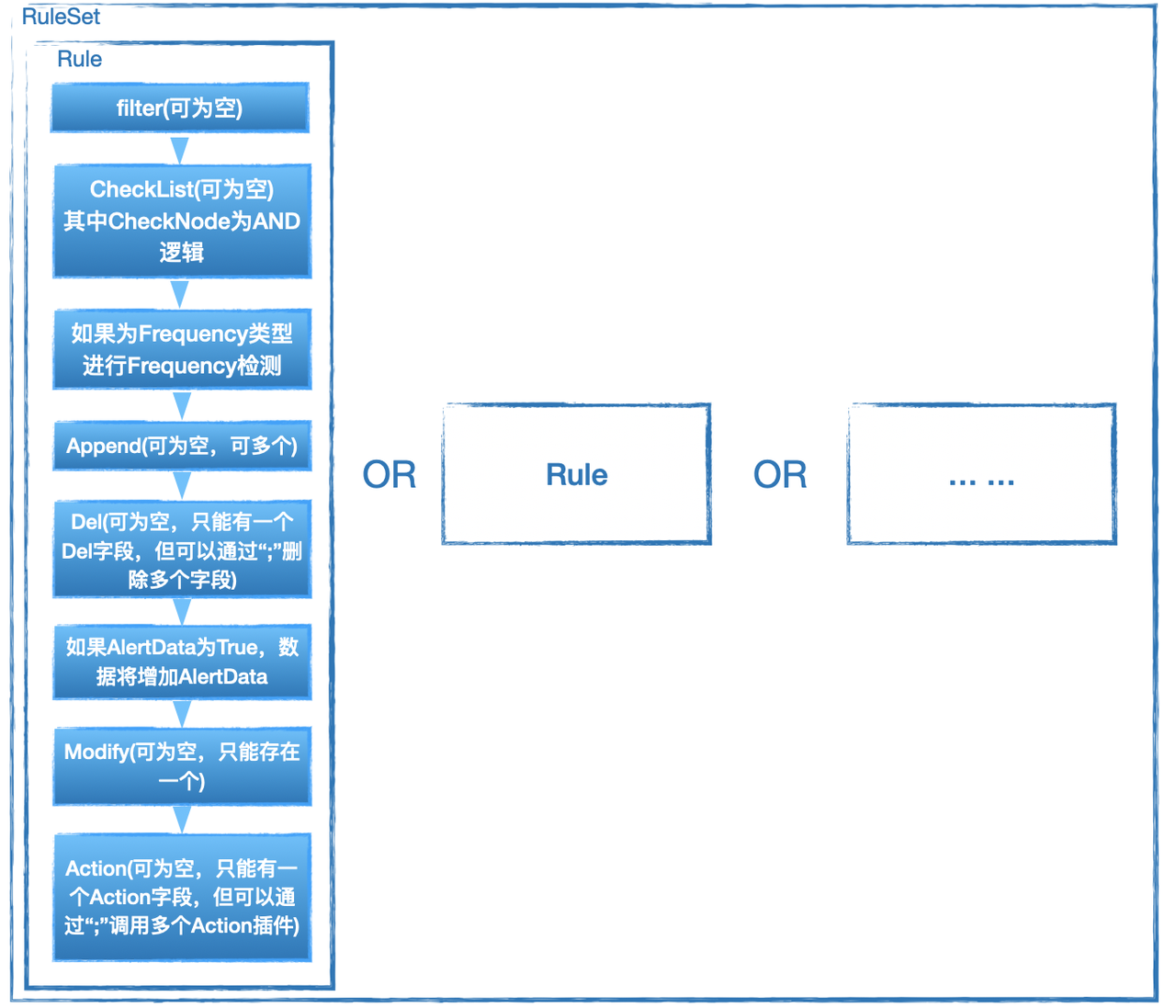

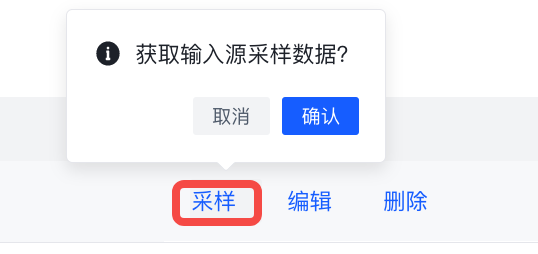

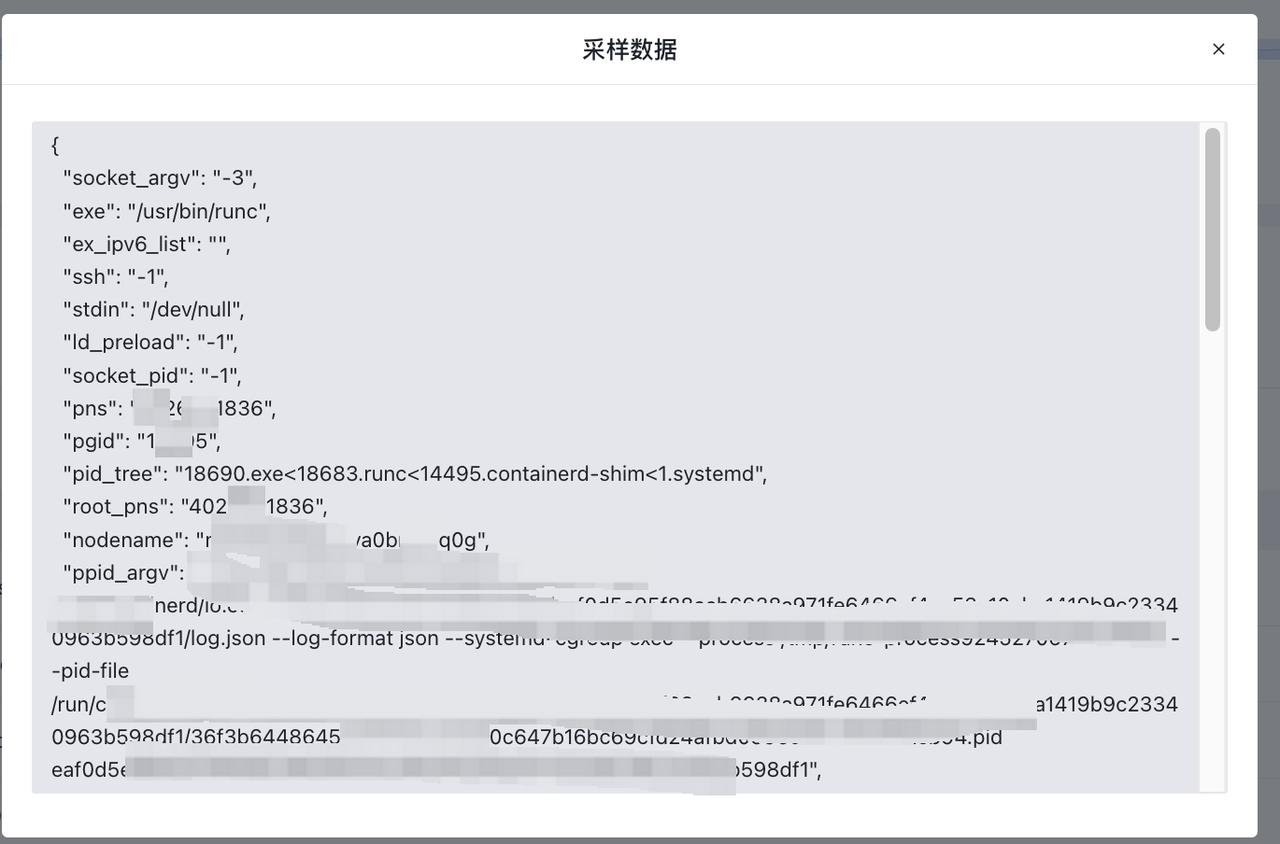

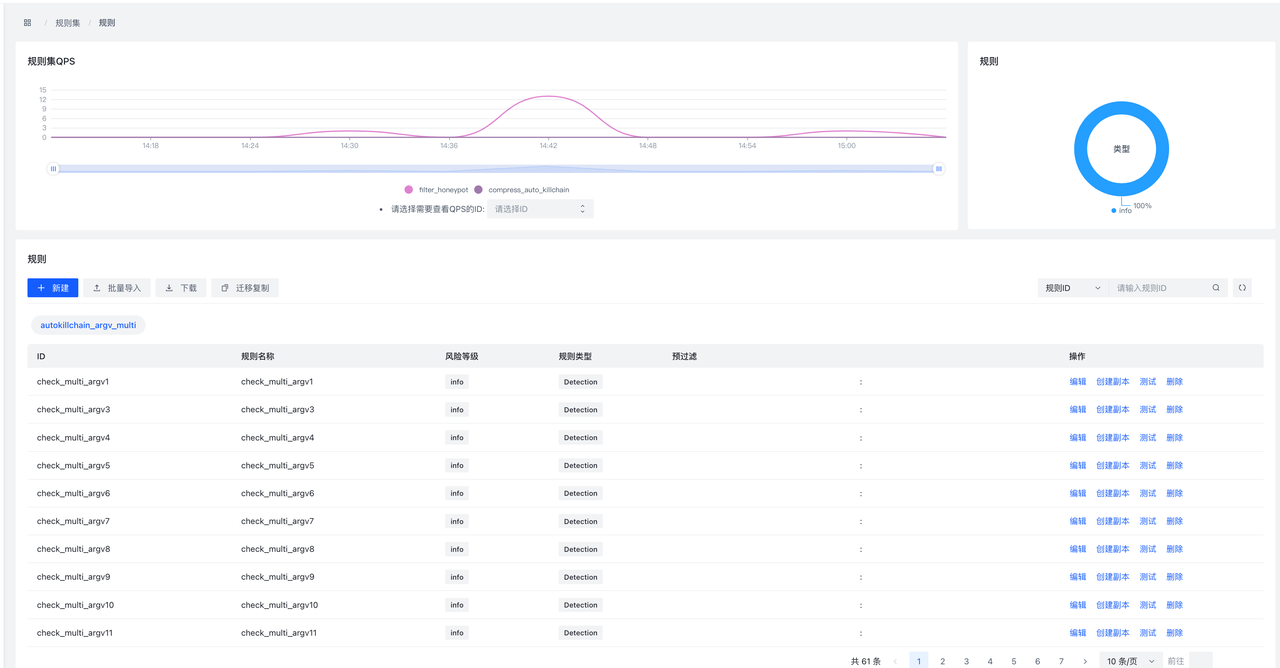

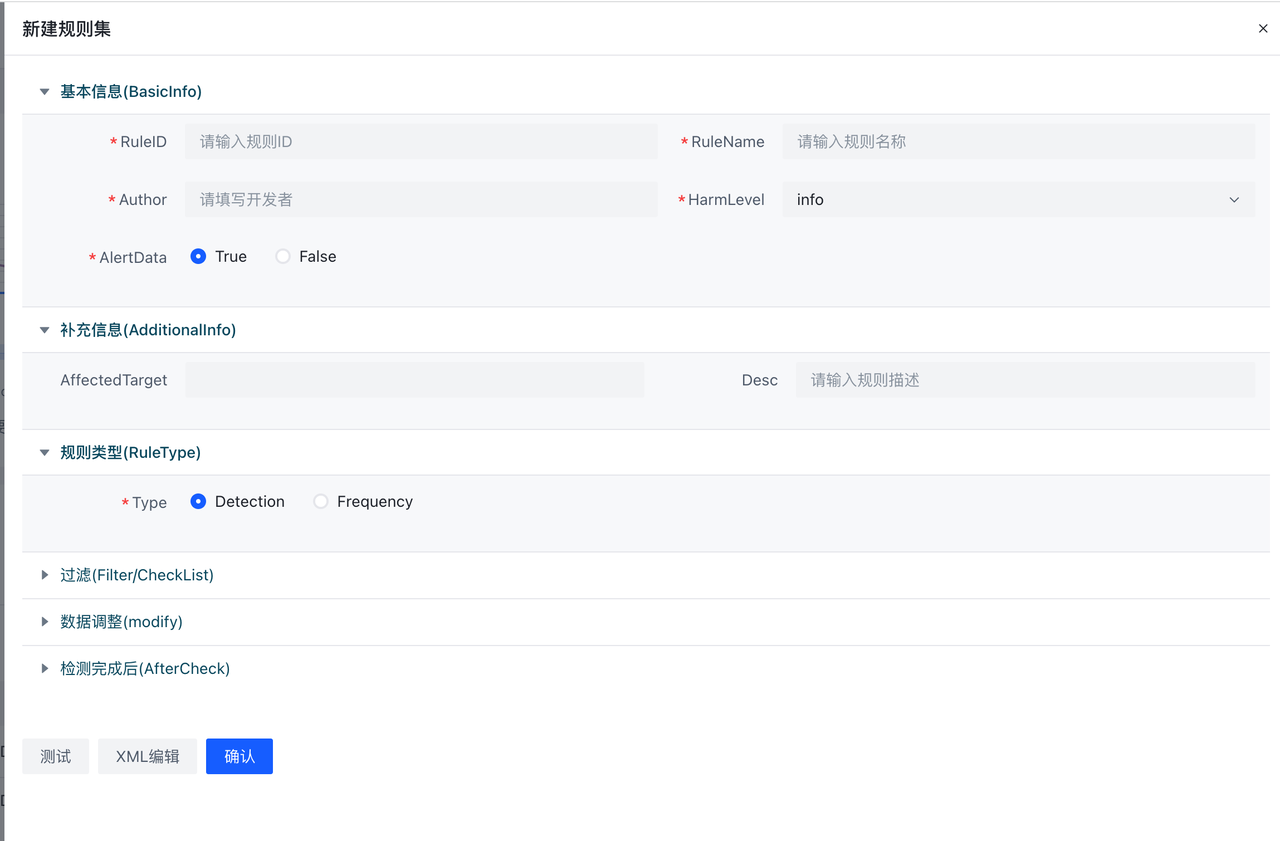

RuleSet是HUB实现检测/响应的核心部分,可以根据业务需要对输入的数据进行检测响应,配合插件还能实现报警的直接推送或对消息的进一步处理。因此如果有额外的业务需要可能需要自行编写部分规则。

RuleSet是通过XML格式来描述的规则集,RuleSet分为两种类型rule 和 whitelist。rule为如果检测到会继续向后传递,whitelist则为检测到不向后传递,向后传递相当于检出,因此whitelist一般用于白名单。

Ruleset里可以包含一条或多条rule,多个rule之间的关系是'或'的关系,即如果一条数据可以同时命中多条rule。

使用前端编写规则

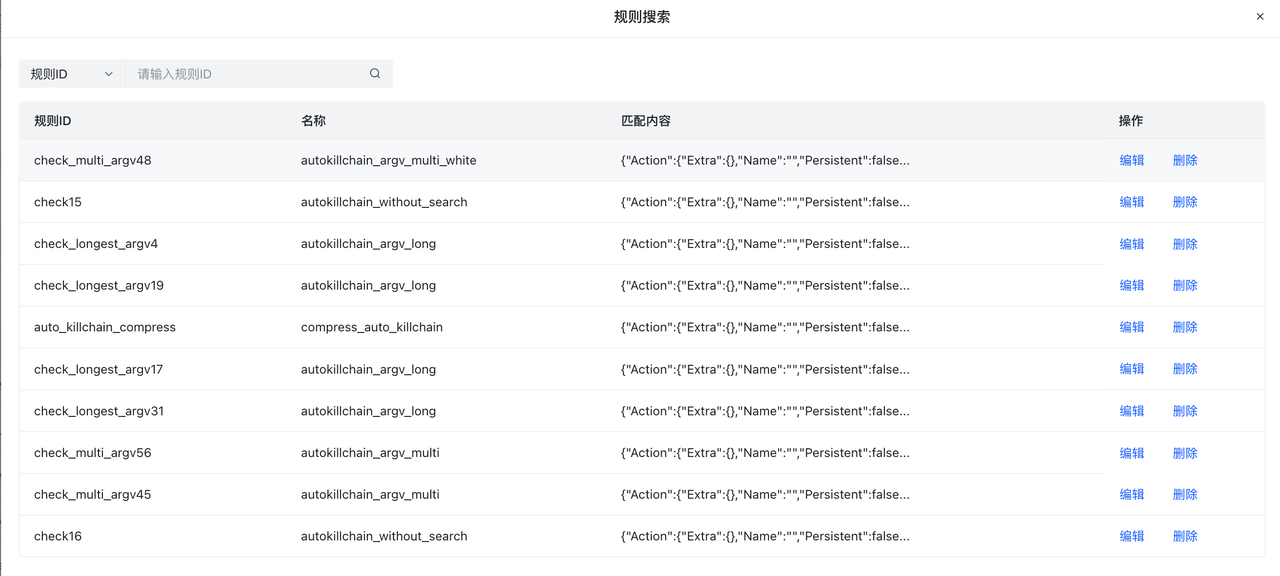

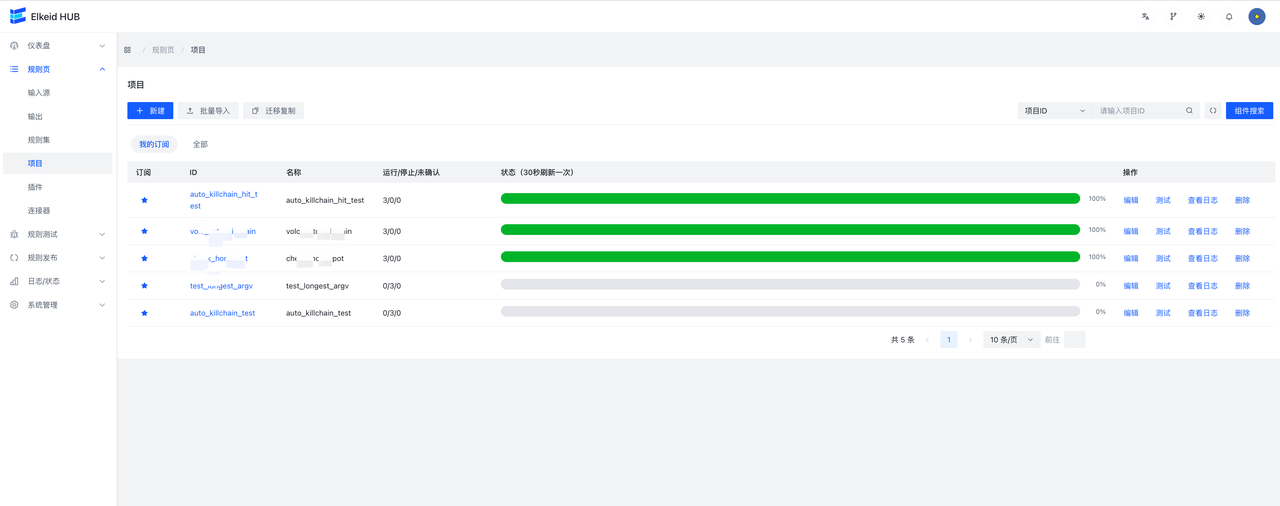

进入规则页->规则集页面,可以看到当前收藏的RuleSet和全部RuleSet。

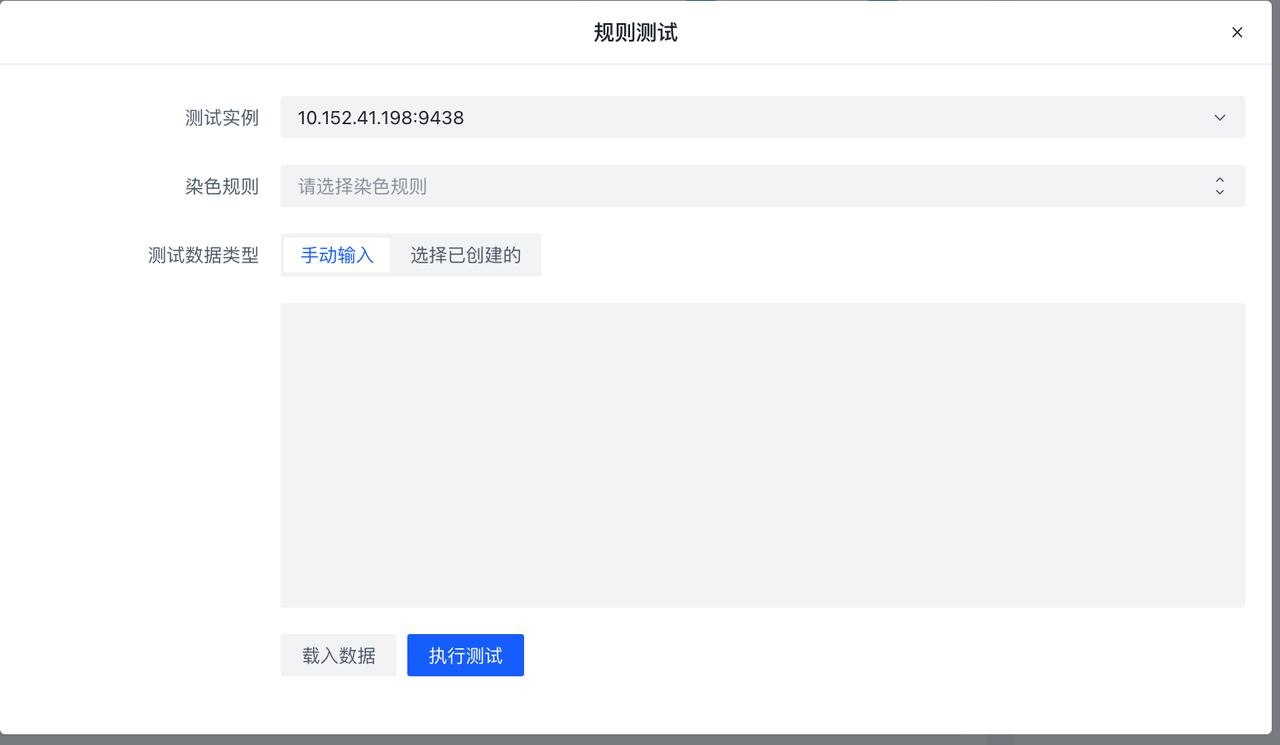

当Type为Rule时会出现未检测时丢弃字段,意味未检测到是否丢弃,默认是True即为未检测到即丢弃,不向下传递,在这里我们设为True。创建完成后,在创建好的条目上点击规则按钮,进入该Ruleset详情。在RuleSet详情中点击新建会弹出表单编辑器。

HUB已经默认开放了数十条规则,可以查看已经编写的规则,进行相关策略编写

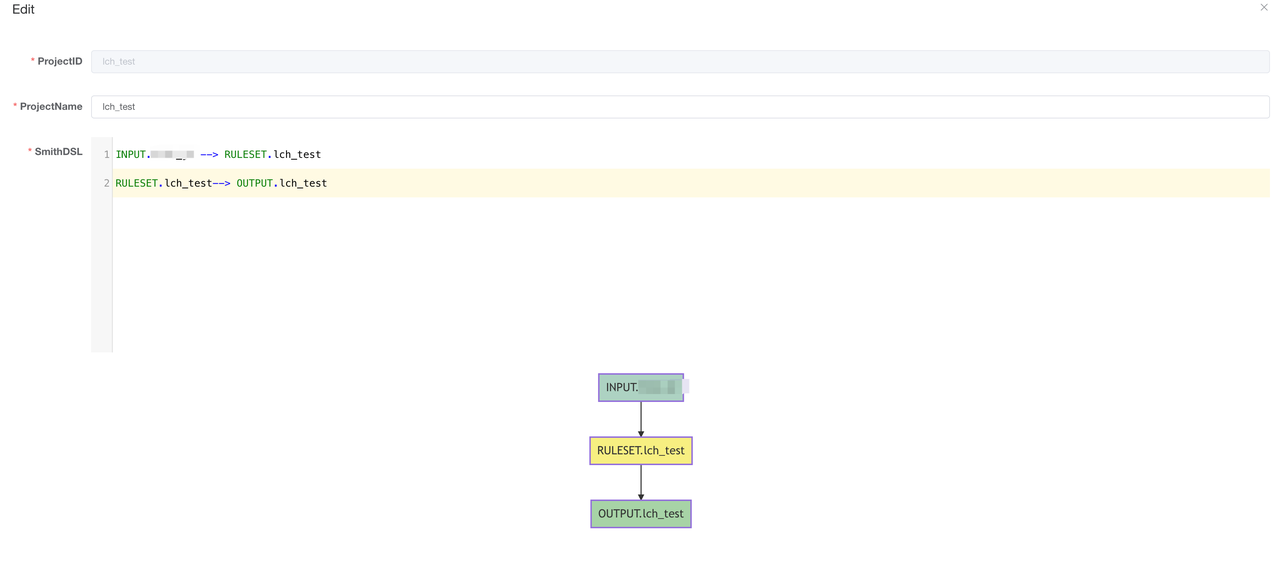

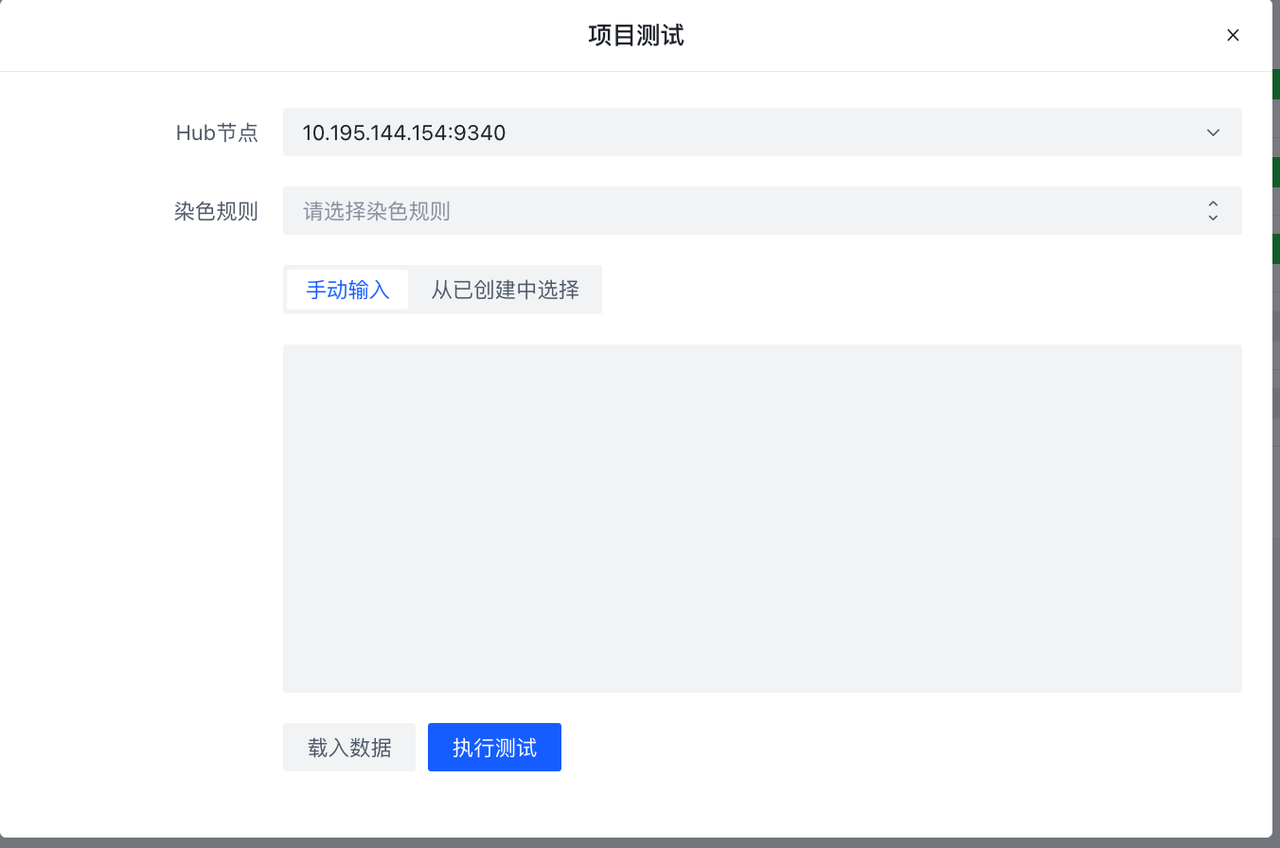

也可以根据Elkeid HUB 社区版使用手册 进行编写.编写完成后,可以在项目页新建project,将编写好的规则与输入输出进行组合.

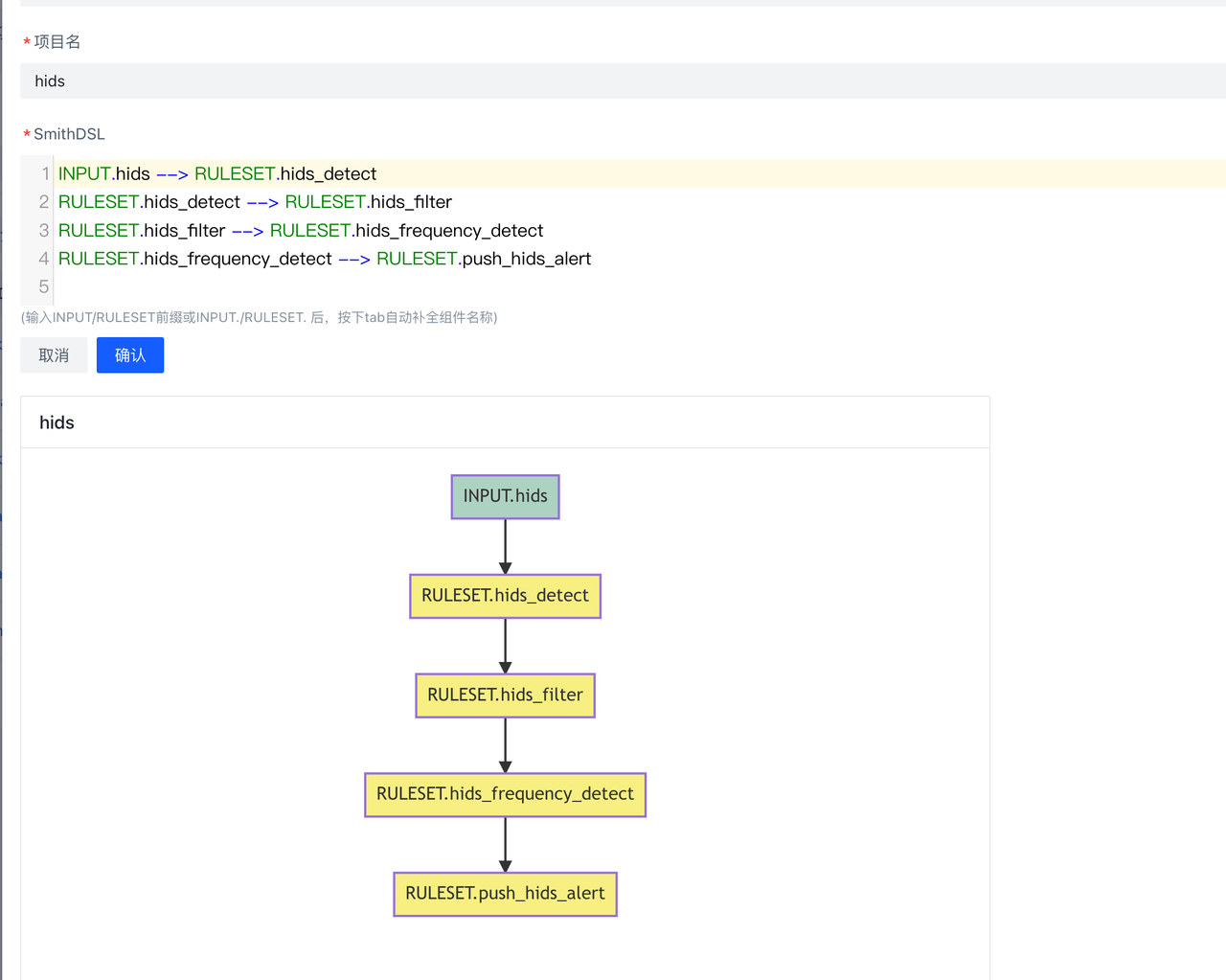

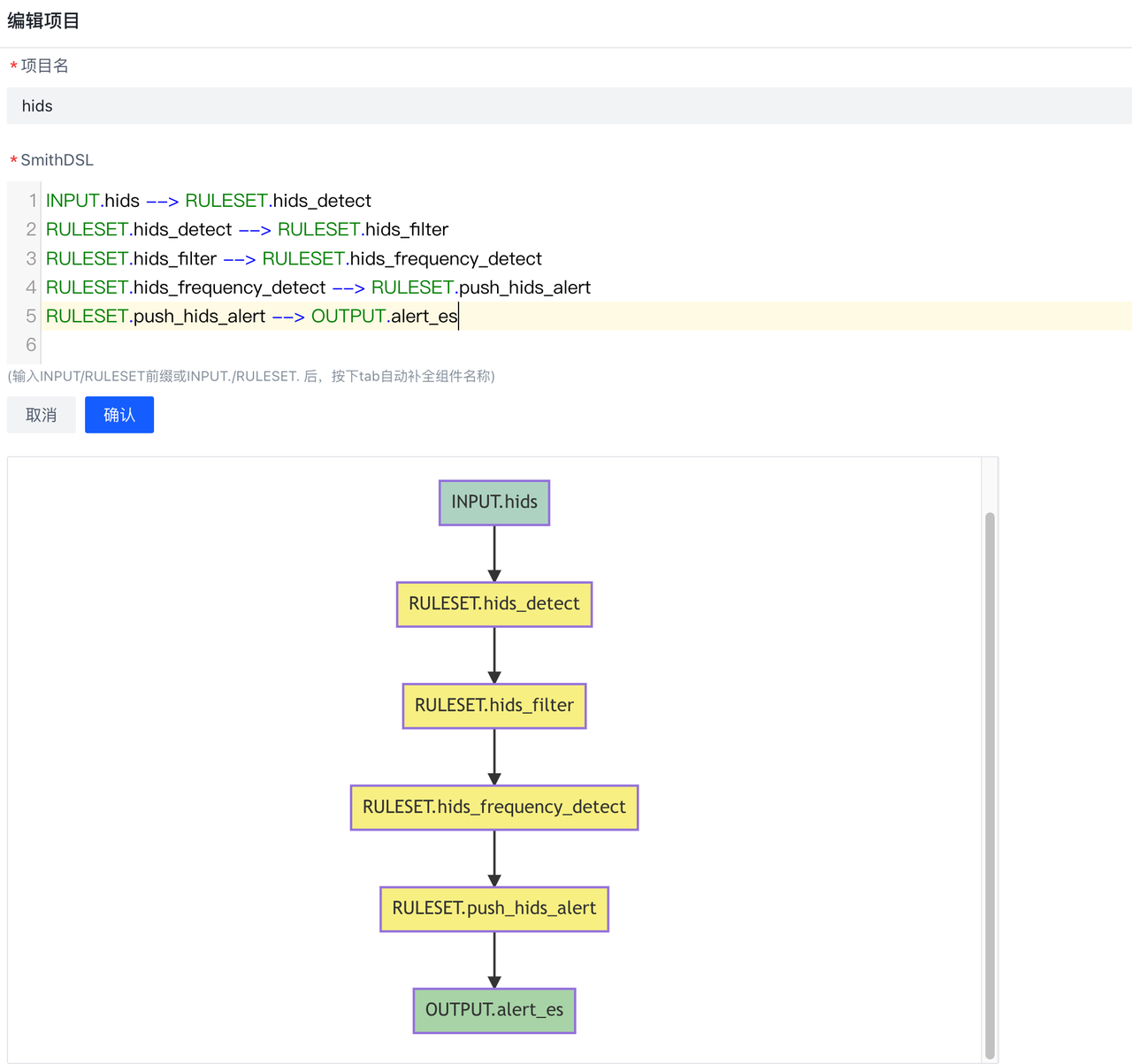

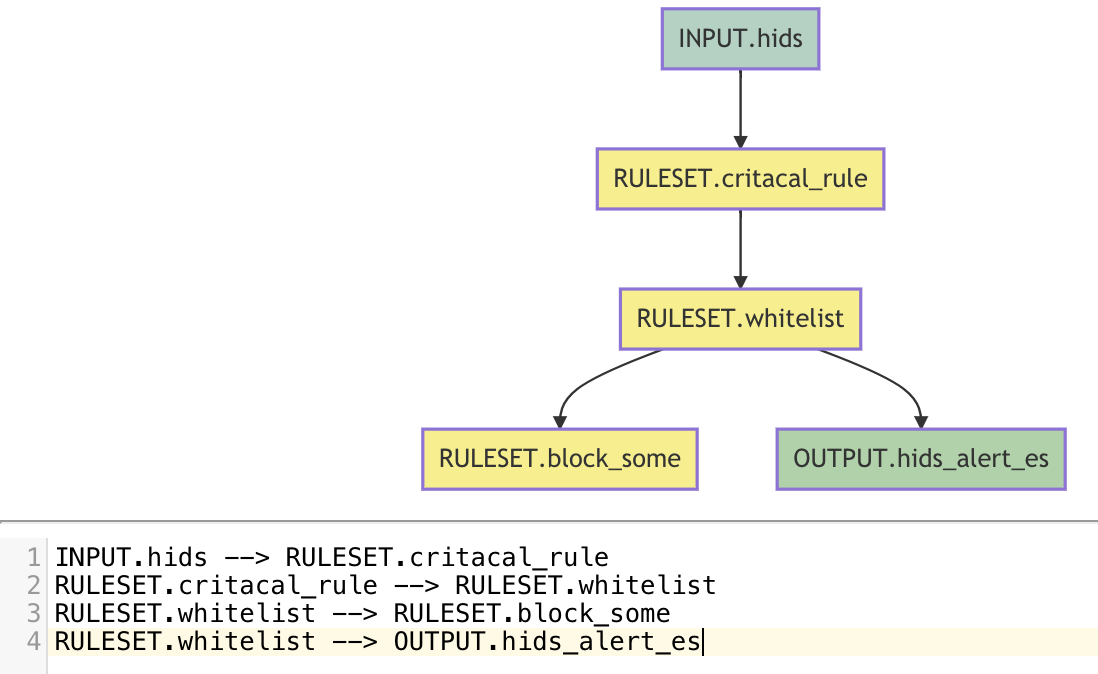

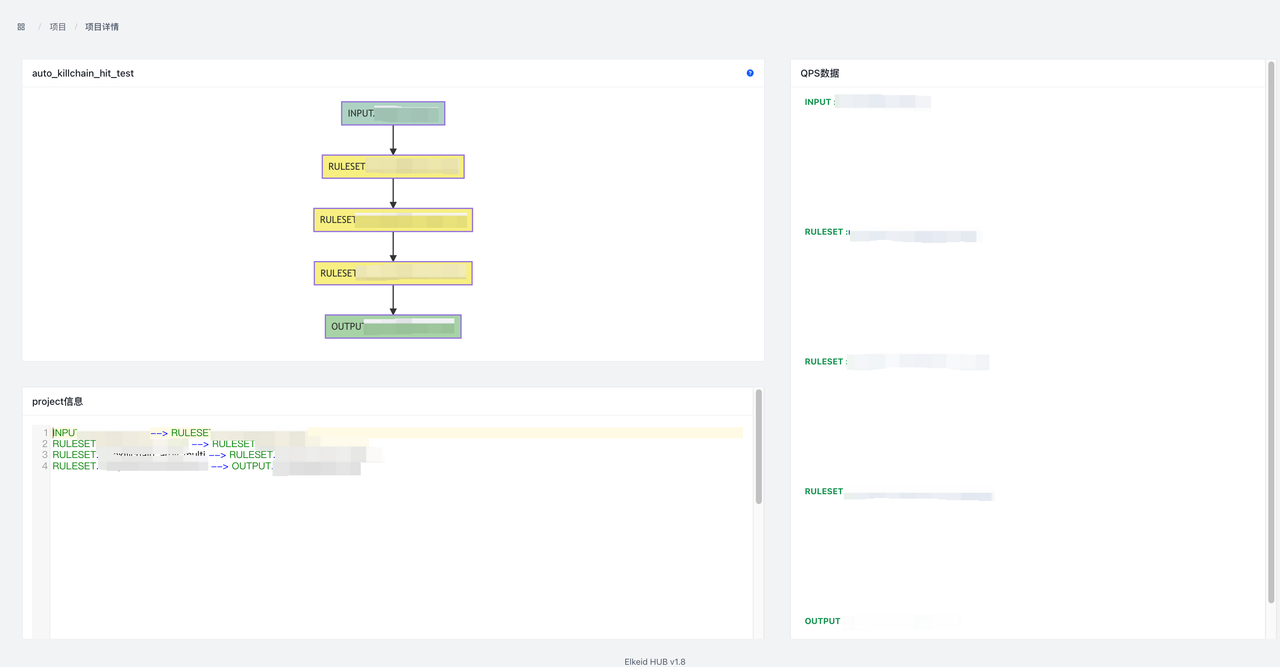

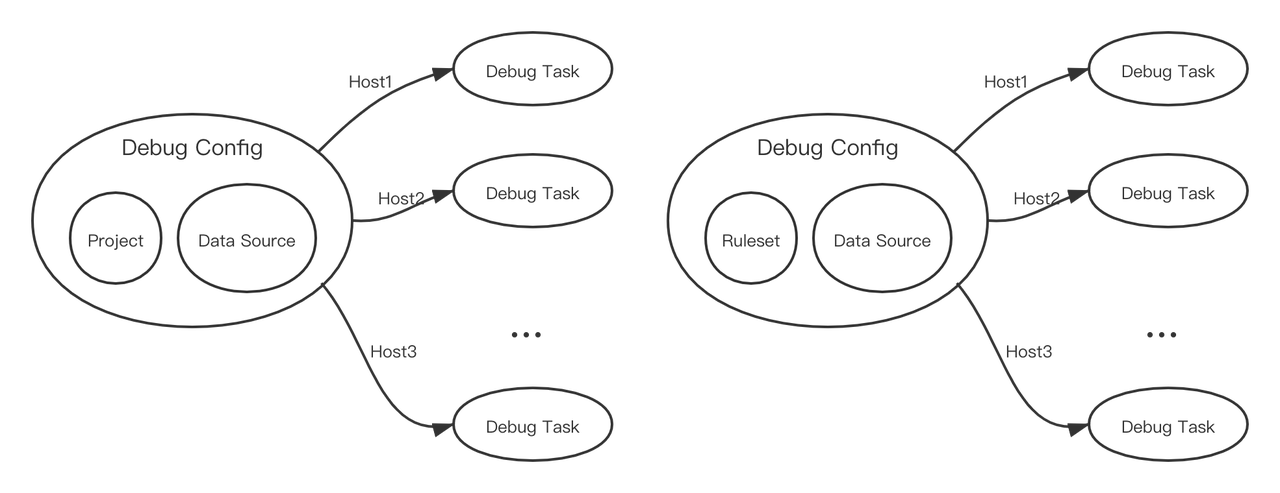

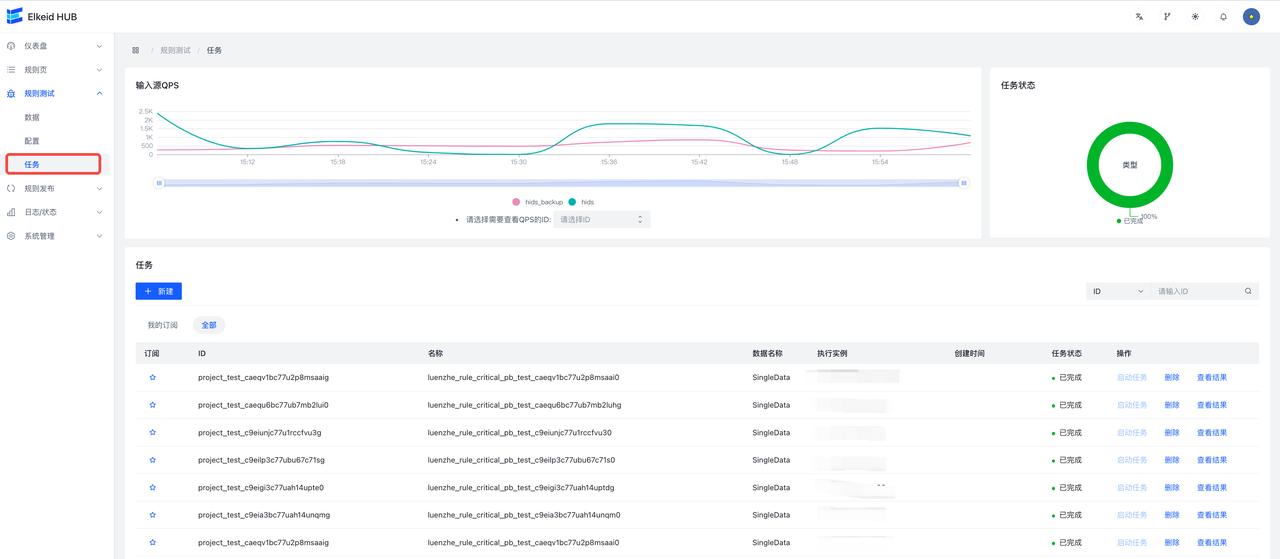

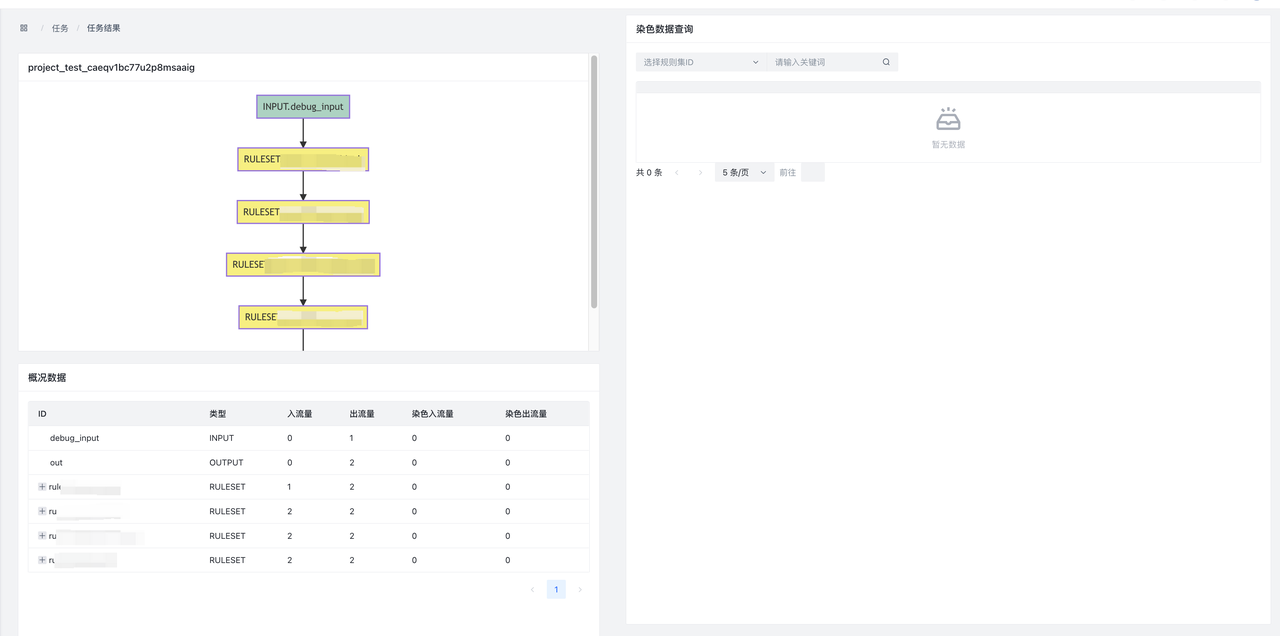

下图即为hids告警处理的过程:数据按照dsl的顺序,依次经过RULESET.hids_detect、RULESET.hids_filter等规则进行处理,最后再通过RULESET.push_hids_alert推送到CWPP console.

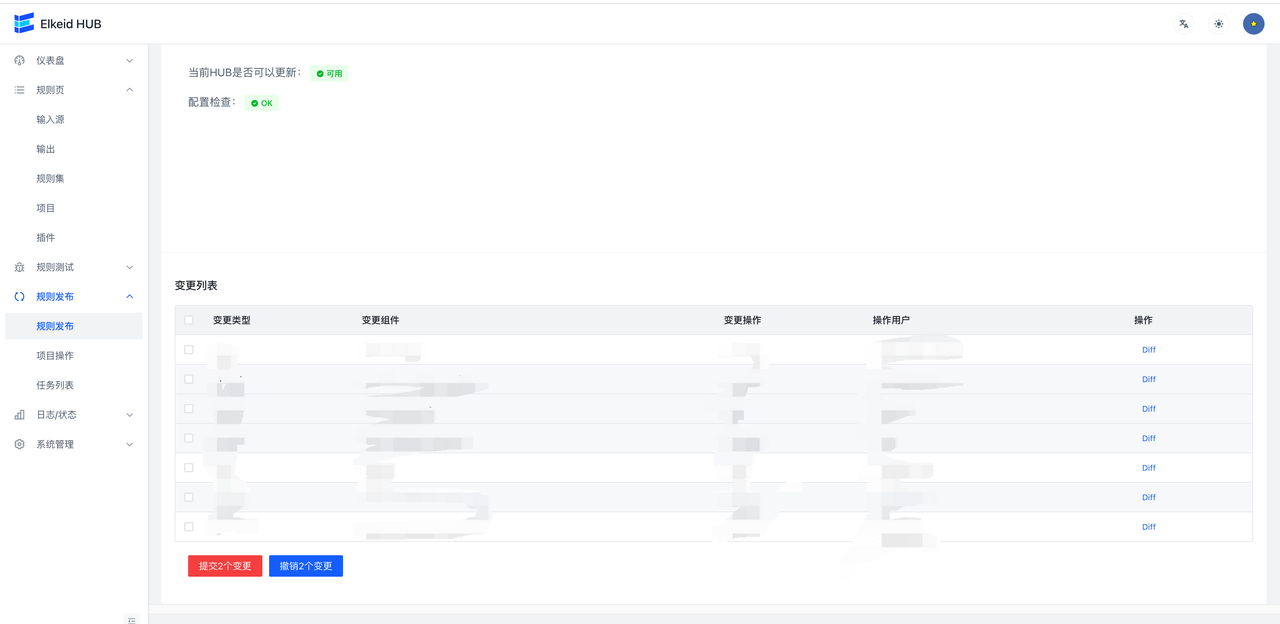

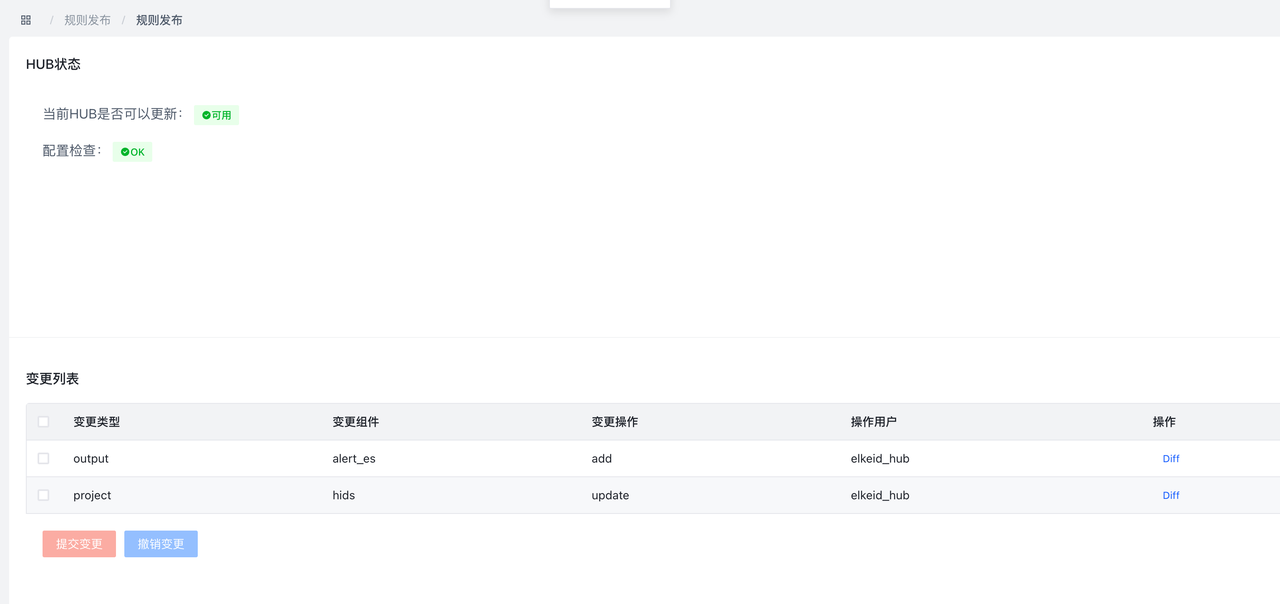

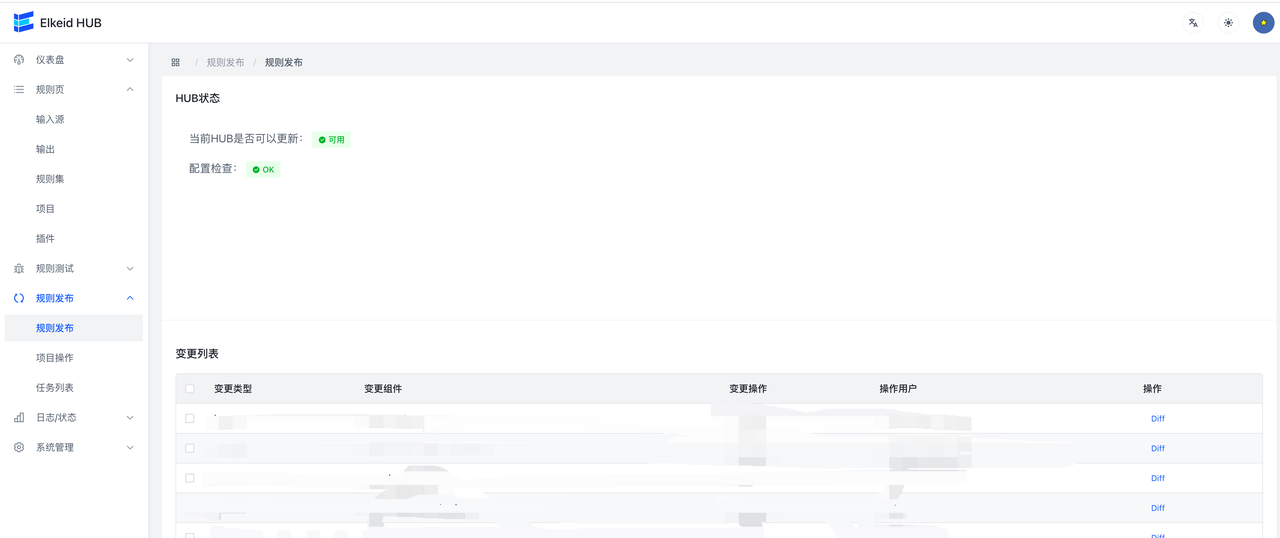

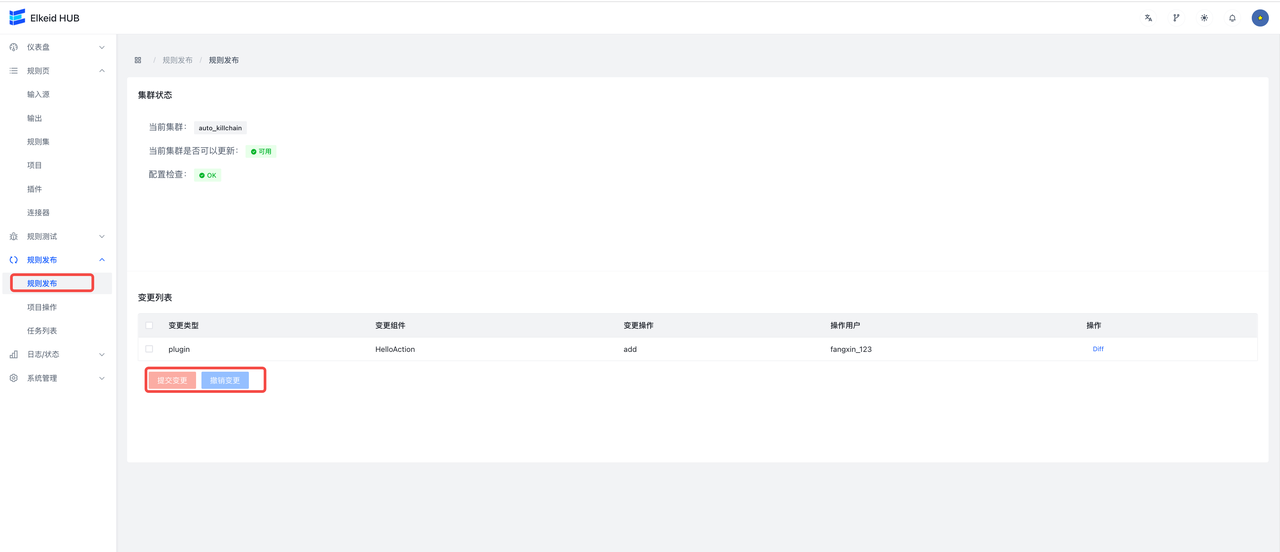

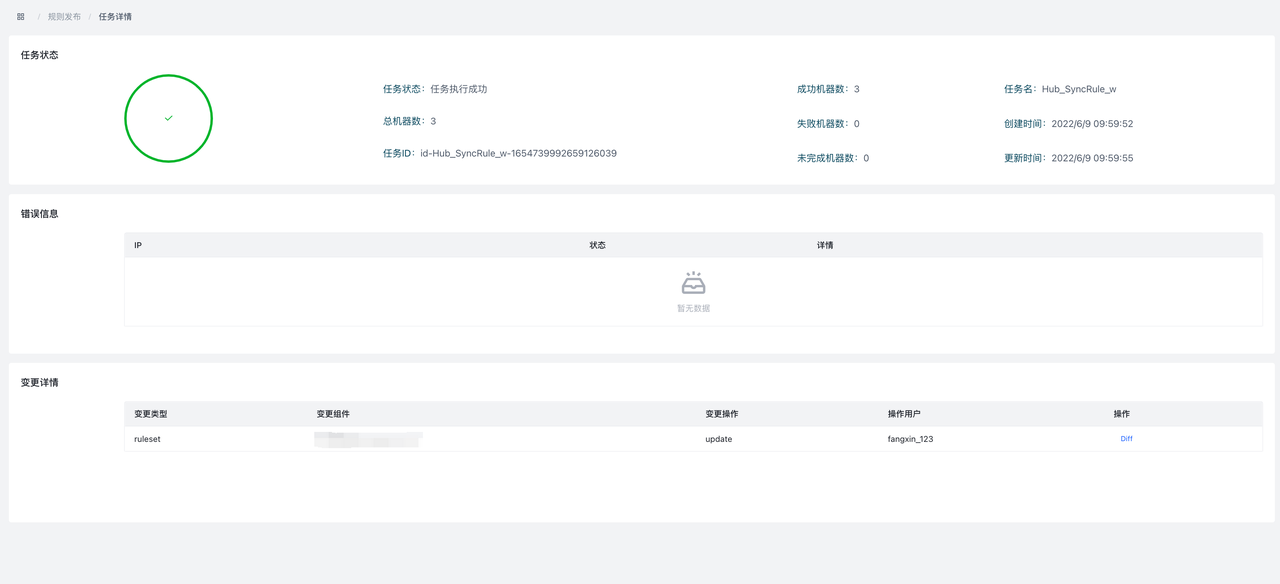

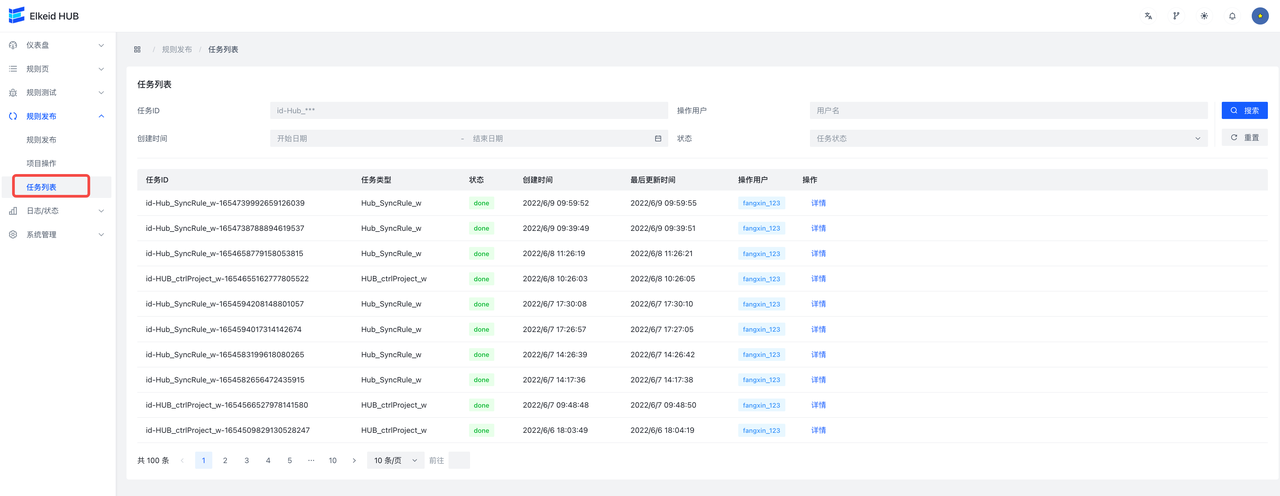

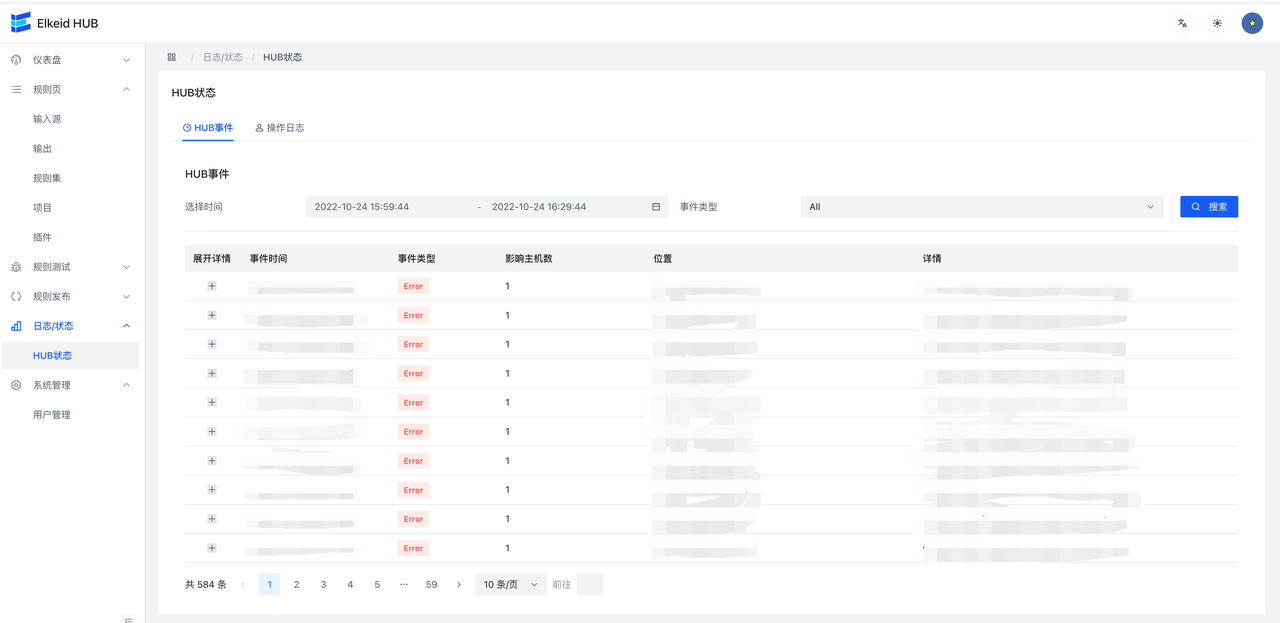

完成以上步骤后,进入规则发布页面,会显示出刚才修改的全部内容,每一个条目对应着一个组件修改,点击 Diff 可以查看修改详情。检查无误后,点击提交,将变更下发到HUB集群。

任务提交后,会自动跳转到任务详情页面,显示当前任务执行进度。

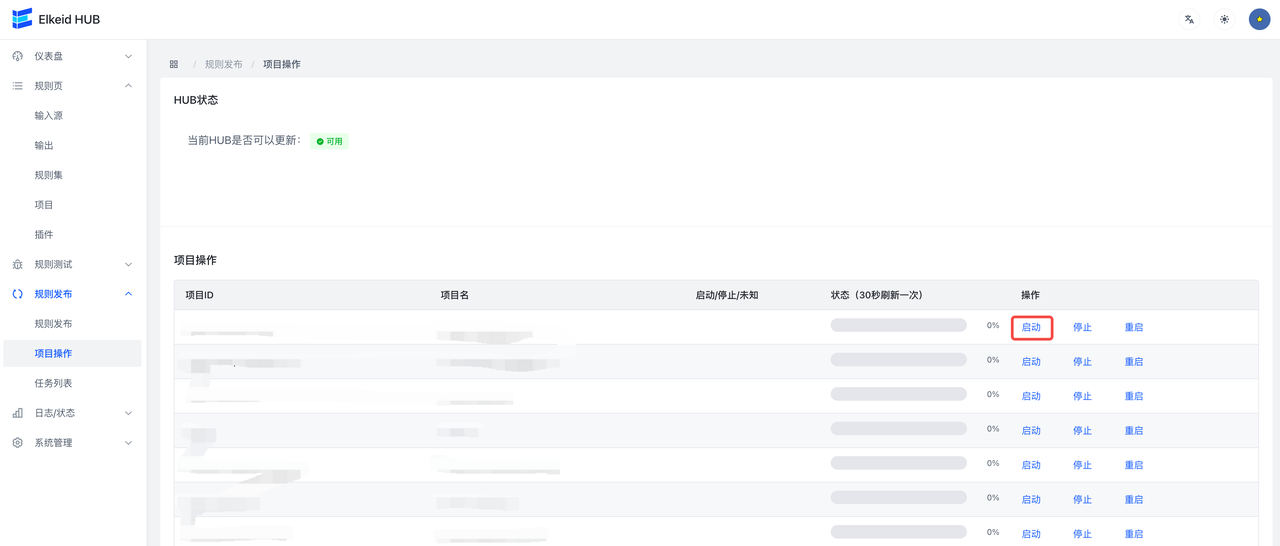

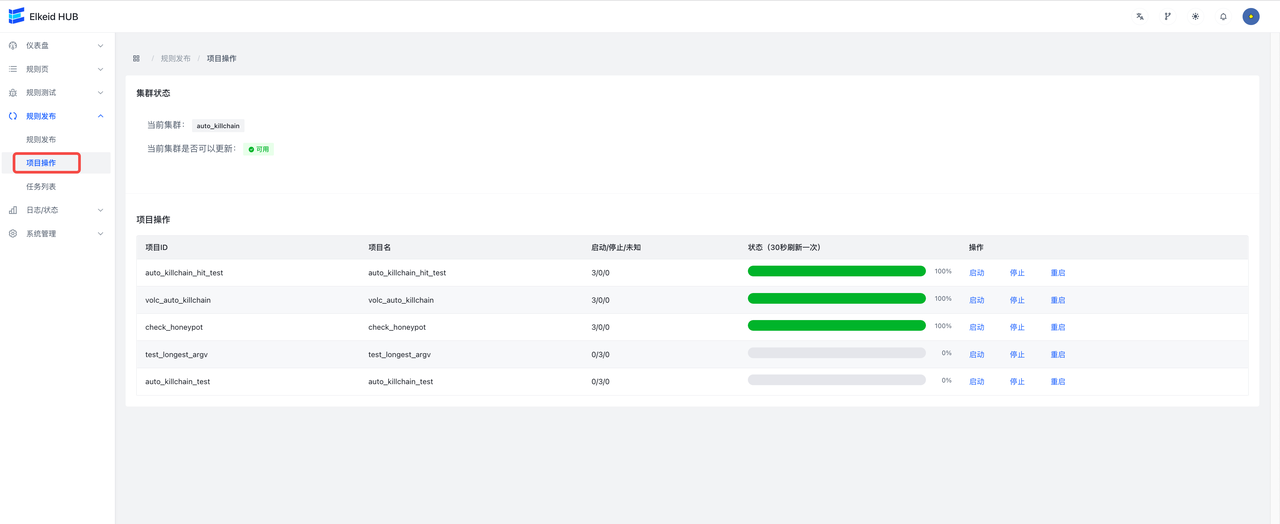

配置下发完成后,需要启动刚才新建的两个项目,进入规则发布->项目操作页面,分别启动全部已有的 项目。

进阶操作

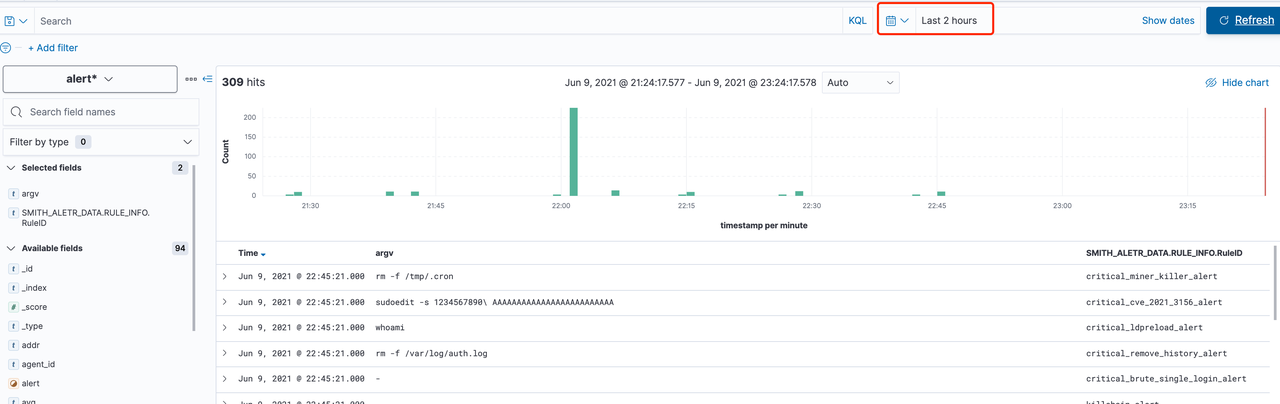

配置ES Index查看报警

此步骤适用于OutputType使用ES的用户,Kafka用户可以自行配置。

| 建议先使用反弹shell等恶意行为触发一下告警流,让至少一条数据先打入ES,从而在Kibana上可以配置Index Pattern。 |

|---|

- 在输出页配置es类型的输出,可开启AddTimestamp,方便在kibana页面配置相关索引

- 编辑hids项目,加入刚编些好的es输出

- 提交变更

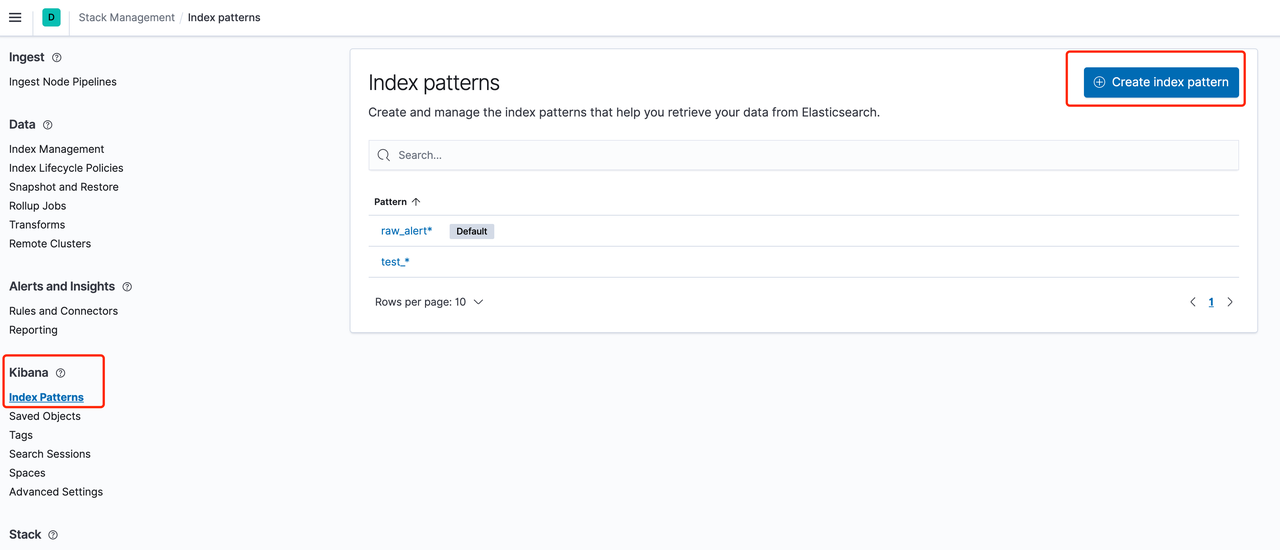

- 首先进入ES 的 stack management,选择kibana 的index patterns,点击 create index patten

- 输入之前填入的ES output index name,以星号 * 作为后缀,这里以默认推荐优先,分别为alert 或者 raw_alert

- 如果这时index中存在数据(即已经触发过告警流),那么应能正确匹配到alert或者 raw_alert index

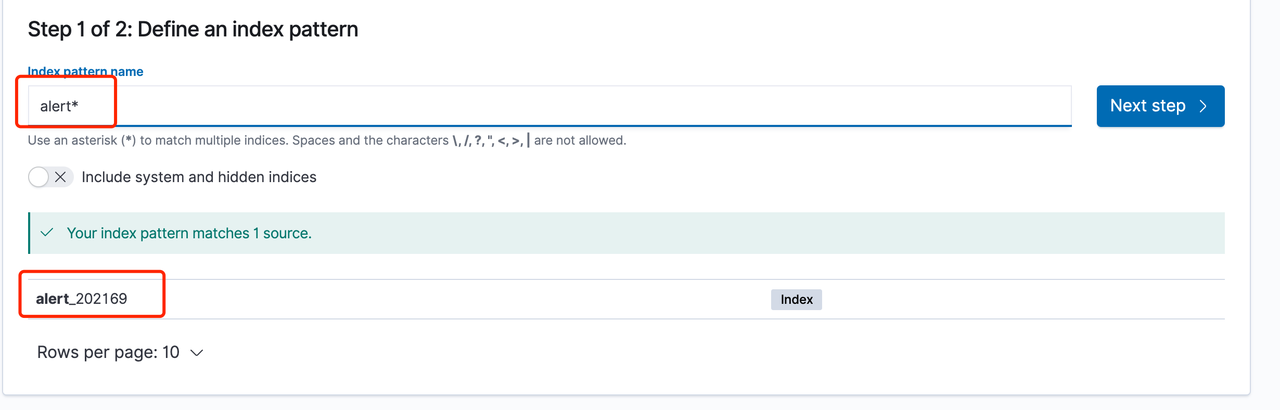

- 配置时间字段

- 如果这时index中存在数据(即已经触发过告警流),那么应能正确匹配到timestamp字段,否则此处无下拉选择框

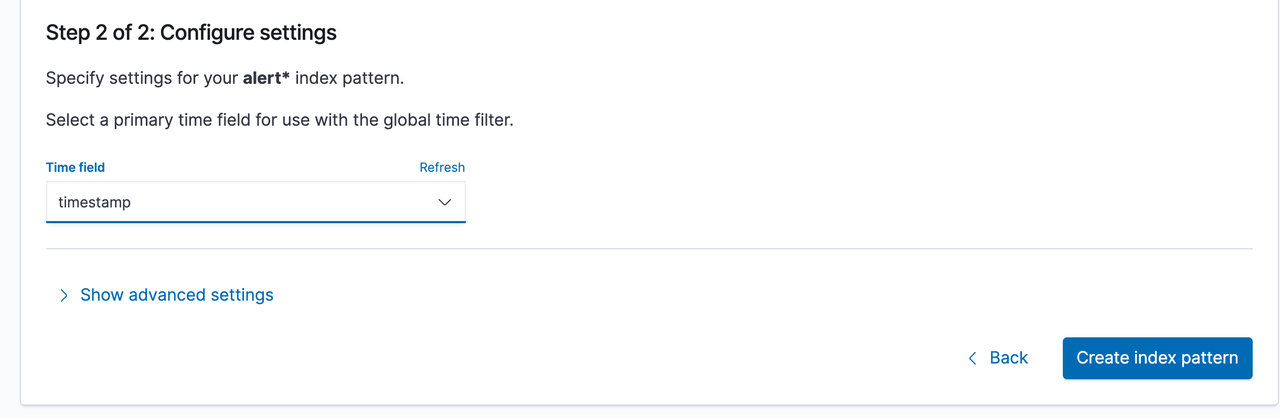

- 去浏览数据

- 进入discover 看板,选择刚才创建的 alert* 看板,调整右侧时间,即可看到告警

示例

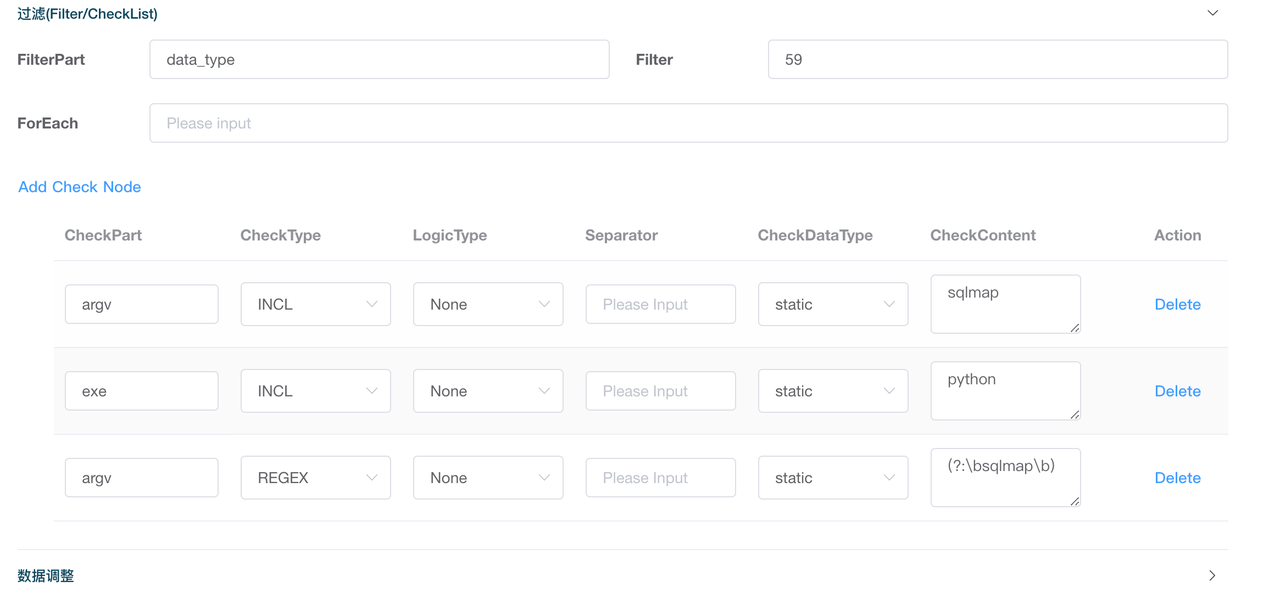

sqlmap检测规则编写

在本教程中,会尝试在前端中编写一条规则,为检查执行sqlmap命令的规则.

在该检测场景中,我们只需要关注Execve相关的信息,因此我添加了data_type为59的过滤字段,因此该规则只会对data_type为59的数据进行处理。之后我添加了一个CheckNode,检查数据中argv字段中是否包含'sqlmap',编写完的效果如下:

可以看到分别设置了三个CheckNode来进行检测,一是直接检测argv中是否包含sqlmap,二是检测exe字段是否包含python,三是使用正则来进行匹配是否为单独的word,当这三个同时满足,就会触发报警,编写好后,点击保存。

我们单独为该测试规则建立一个Output和Project,如下图所示:

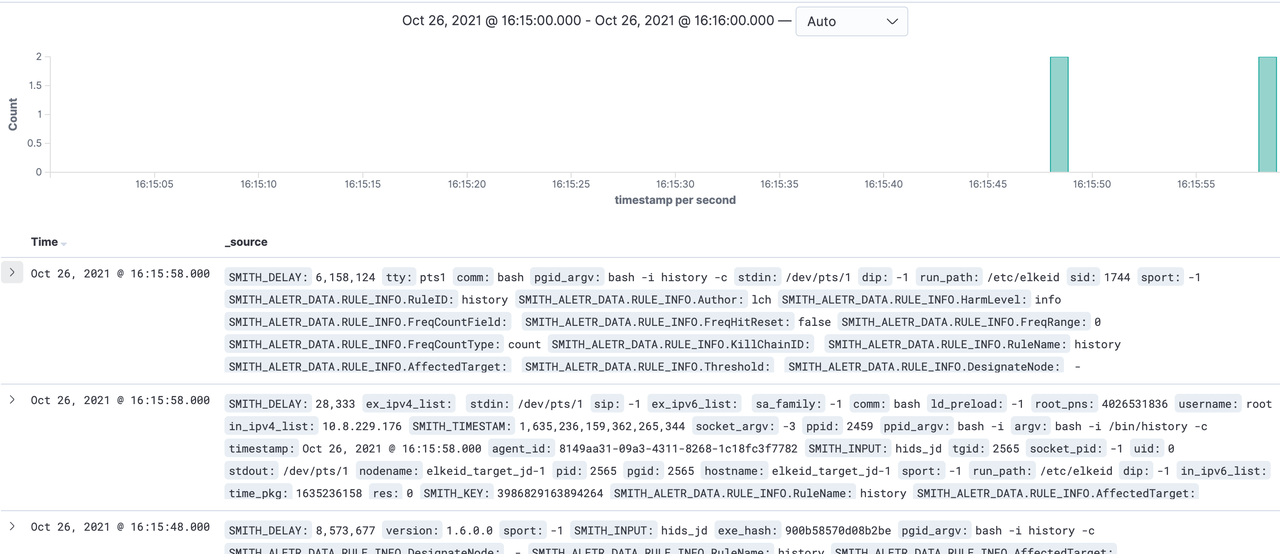

进入测试环境执行sqlmap相关指令,在kibana中添加对应的index pattern,稍微等待一会就可以找到对应的报警结果。

可以看到出现了报警。

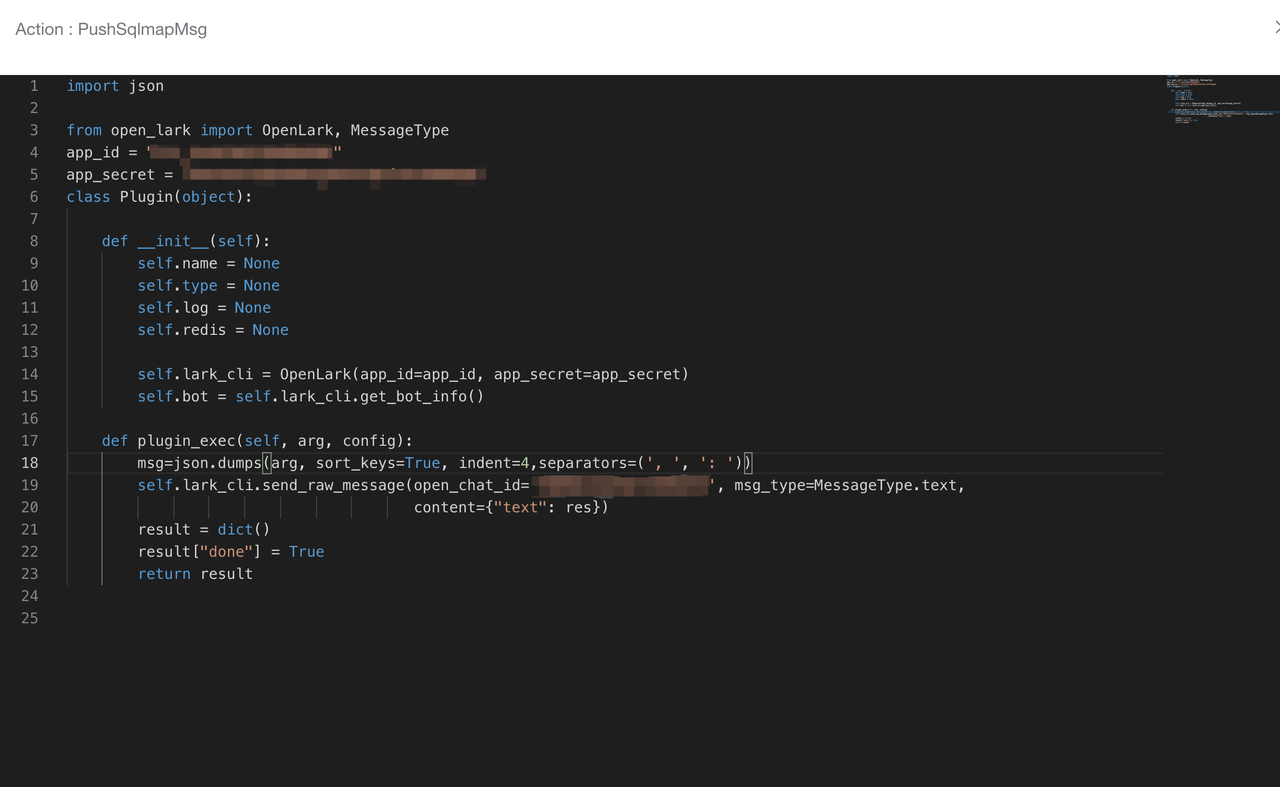

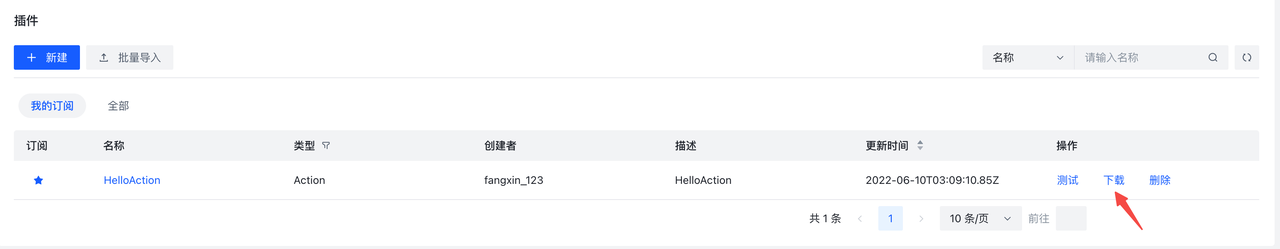

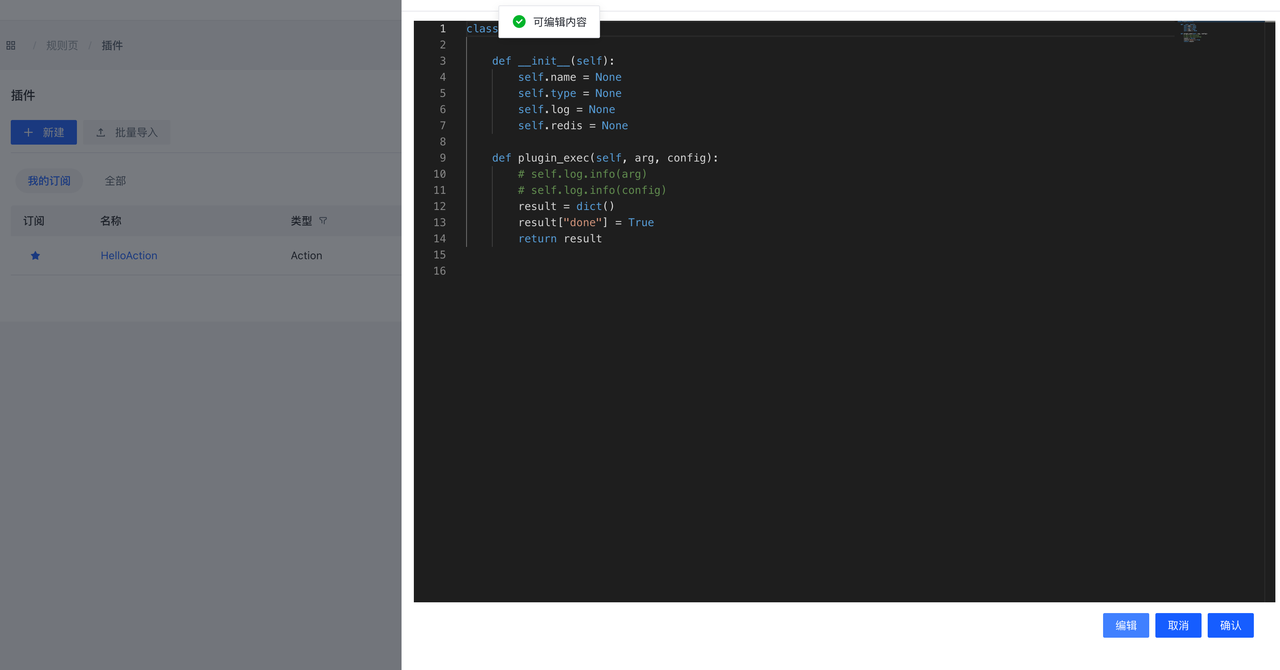

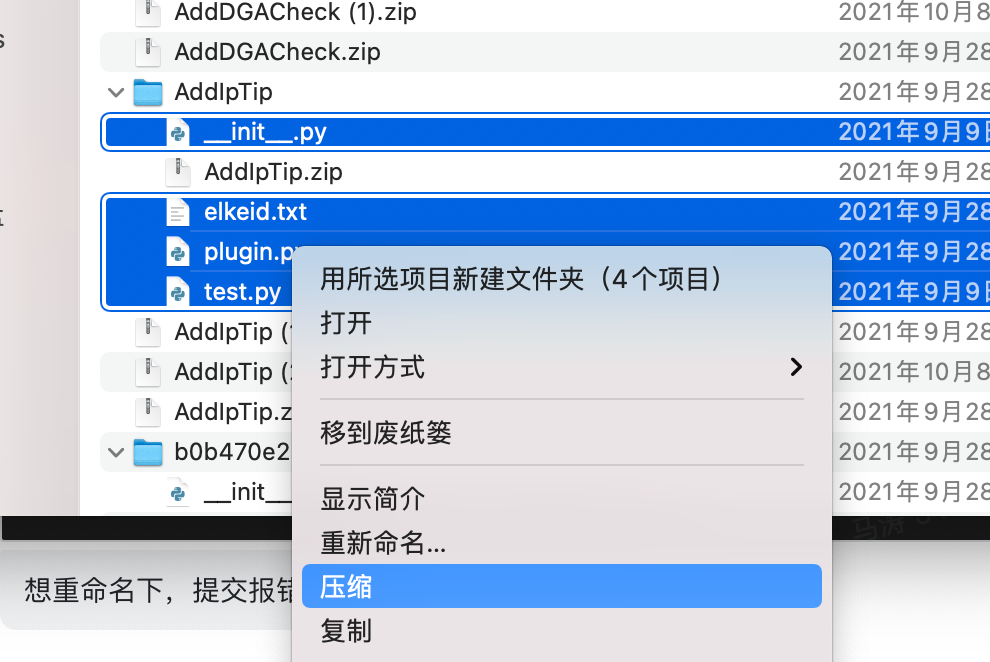

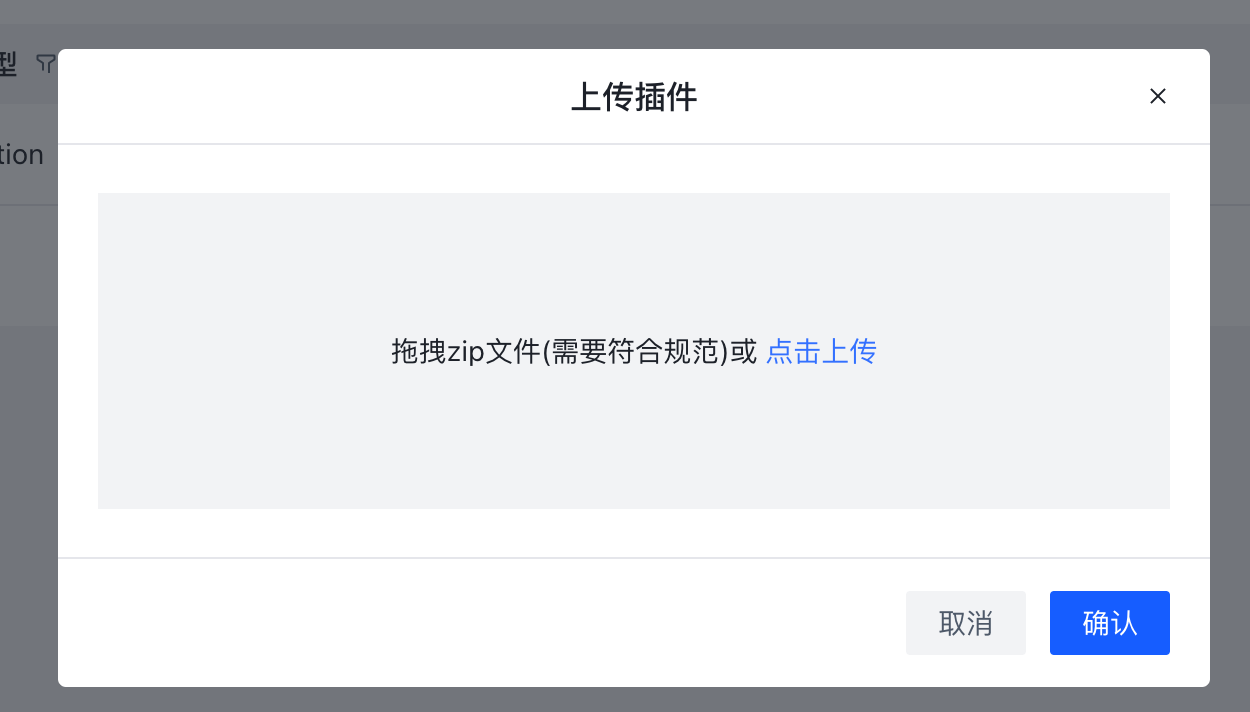

推送飞书插件编写

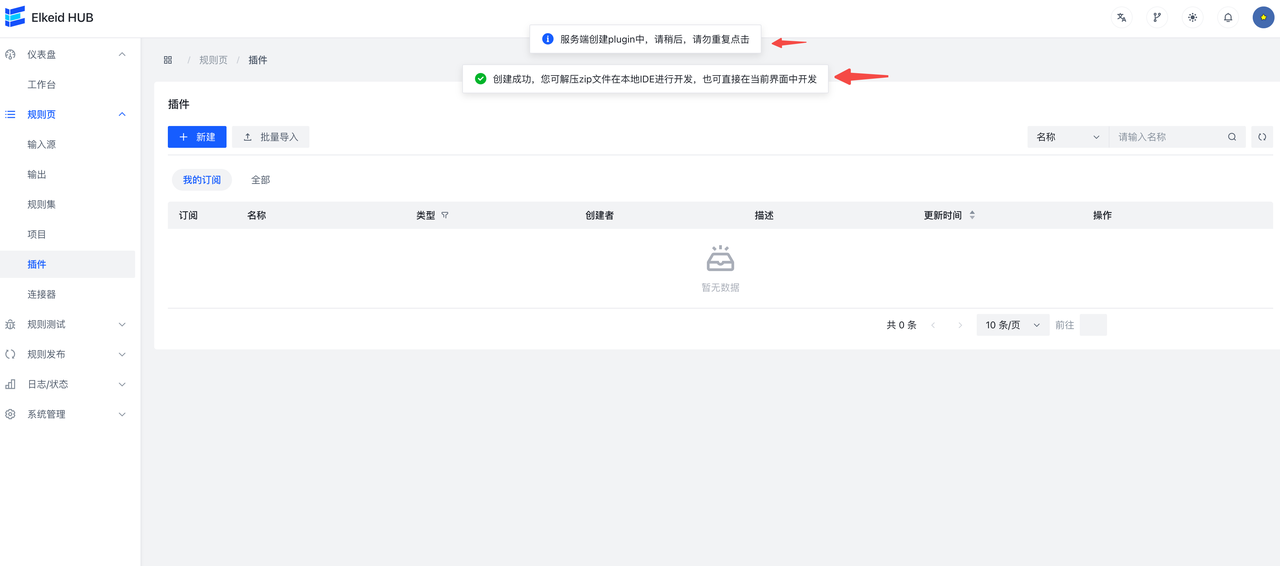

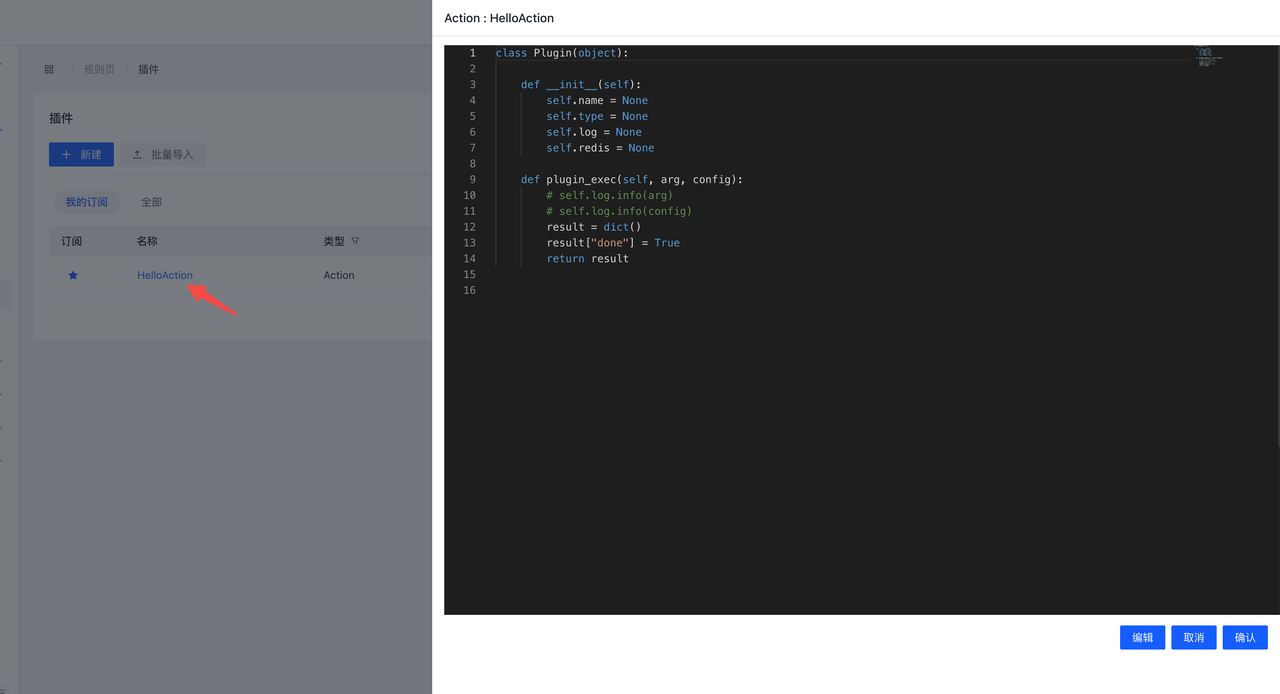

规则写完了,如果我想在发生该事件的时候飞书提醒我该如何实现呢?RuleSet并不支持这项功能,此时可以通过编写插件来实现。

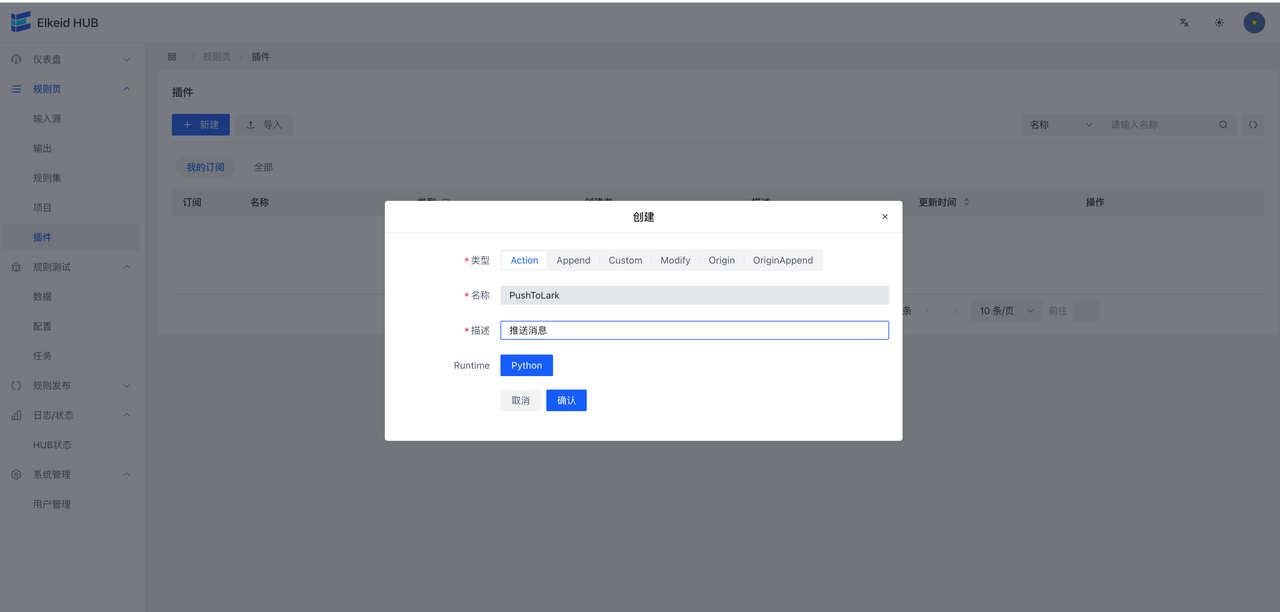

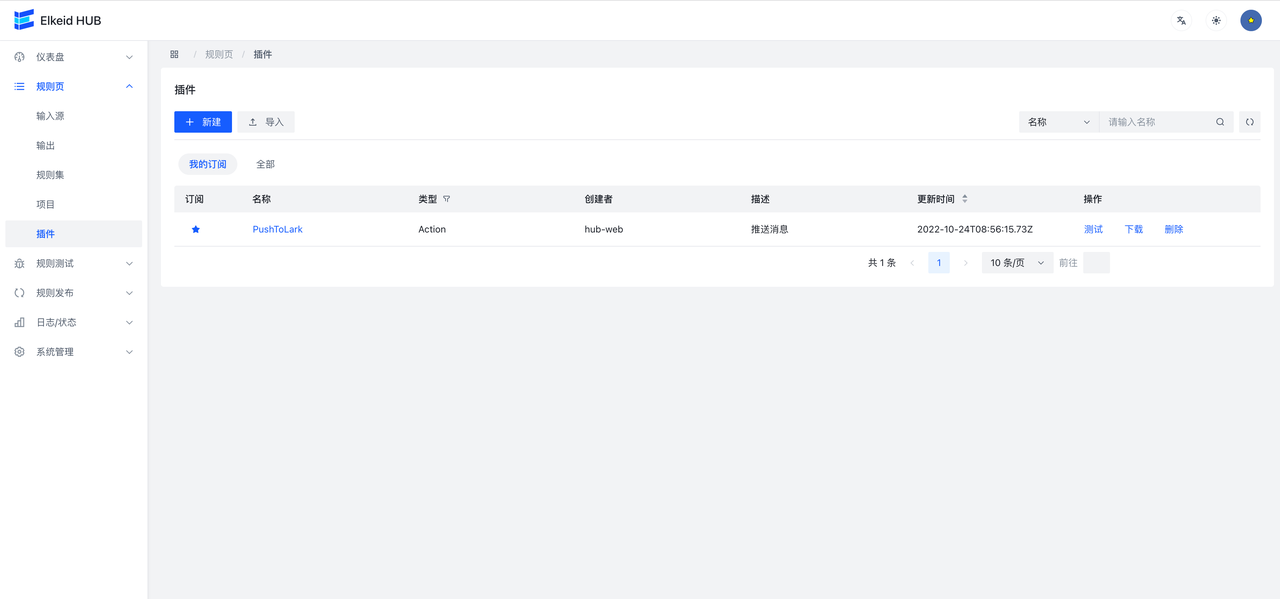

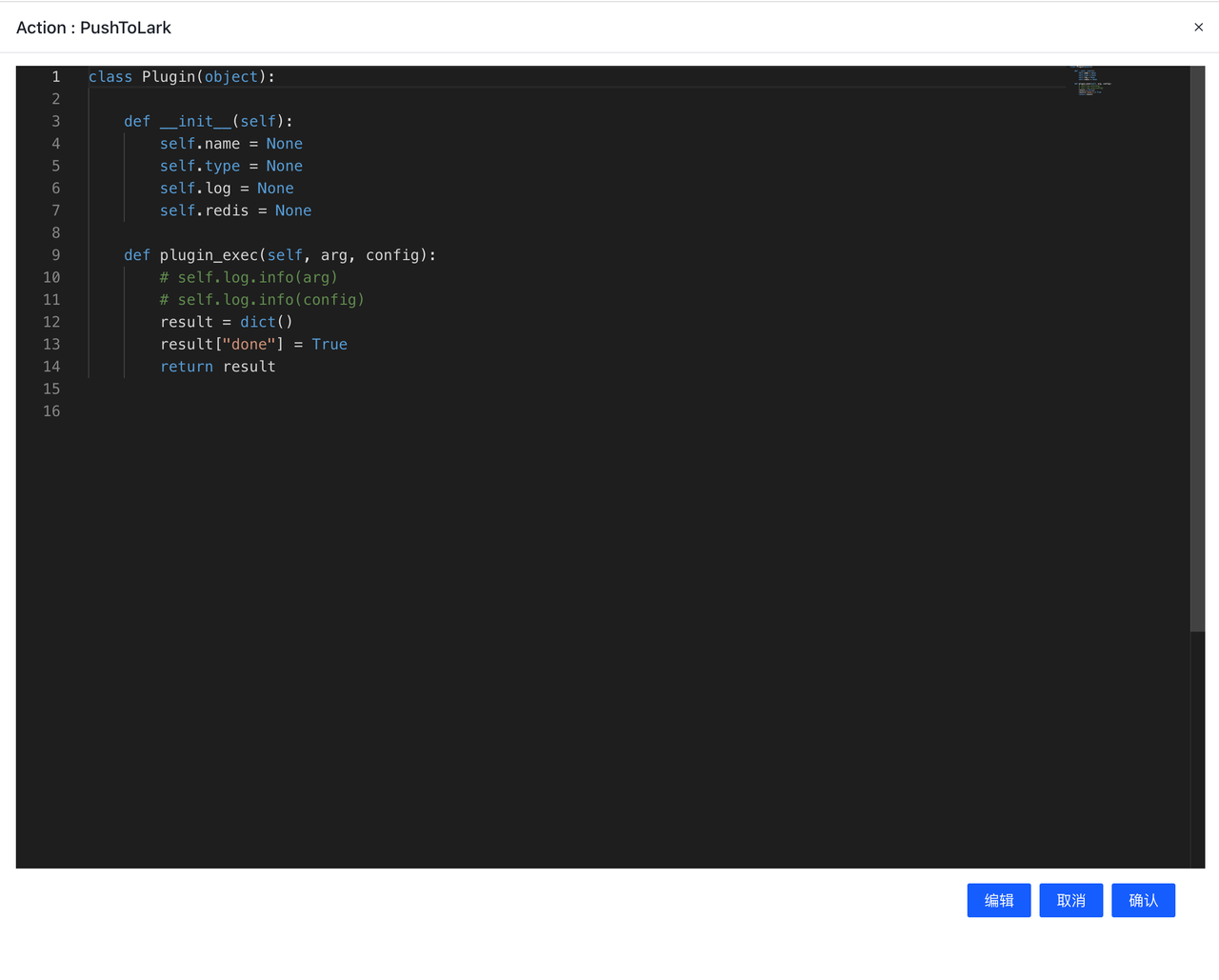

创建并使用Python 插件的步骤如下:

- 点击创建按钮

- 按照需求,填写信息

- 点击确认,完成创建

- 编辑插件

默认为只读状态,需要点击编辑才能进行编辑

编辑后点击保存

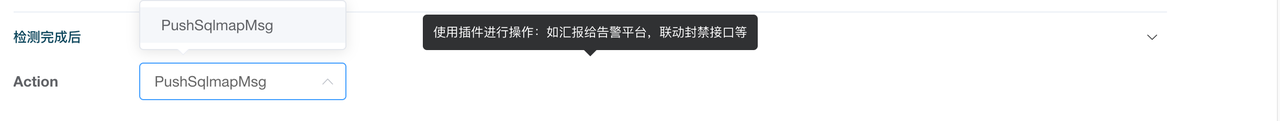

- 在Rule中添加action

- 同策略发布相同,在策略发布界面发布策略

这样每当这个rule的条件被触发,就会调用这个插件进行报警。

Elkeid CWPP Application Runtime Protection (RASP) User Guide

This guide covers the following features:

- Operation and maintenance of application runtime components through CWPP.

- Control the RASP implant probe into the target process to complete the runtime behavior acquisition.

- Implant configuration

- Blocking/Filtering, Configuration

- View CWPP's alert events.

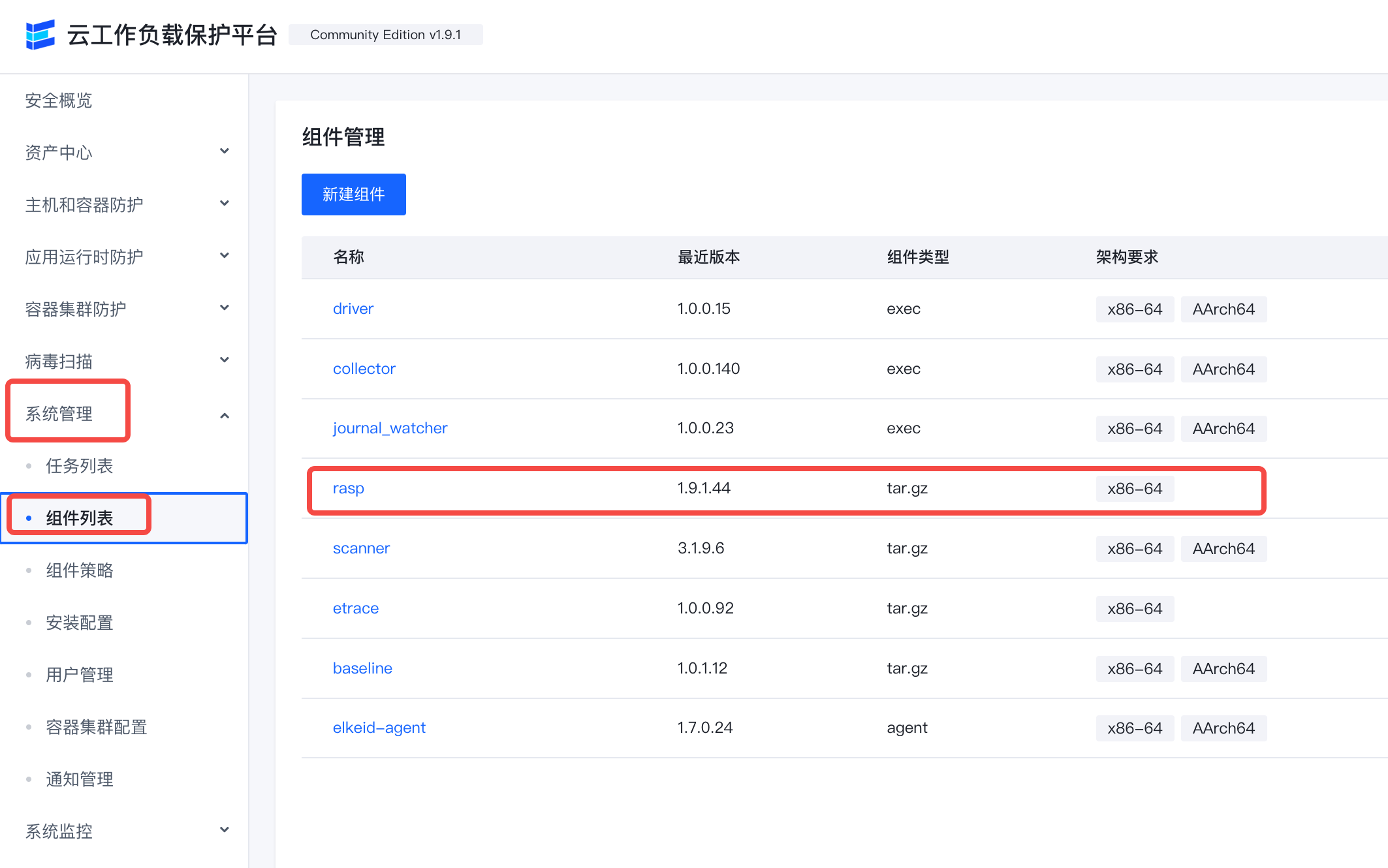

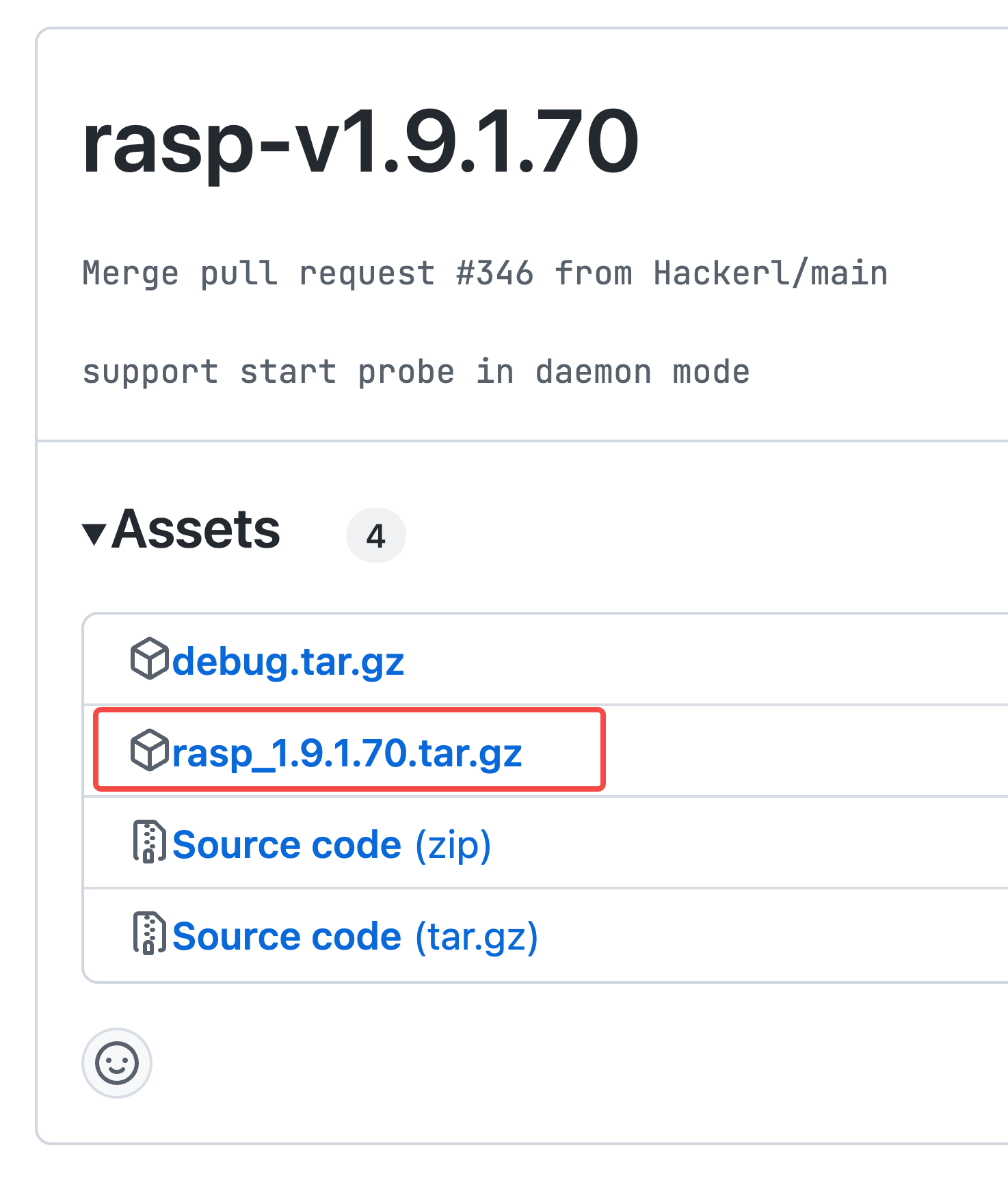

Install/Update RASP Components

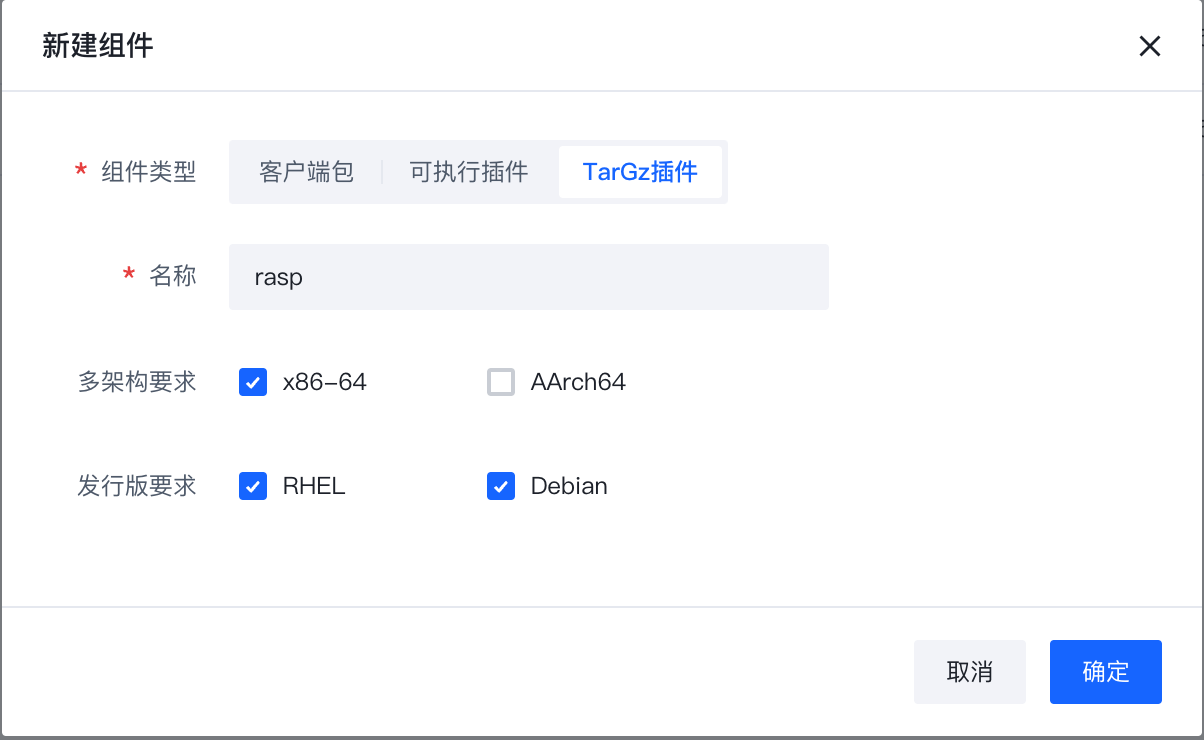

- Make sure the rasp component is included in the component list.

If there is no rasp component, you need to create a new component named rasp.

Note! Due to the Agent mechanism, the plugin name should be the same as the plugin binary name.

Publish the version and upload the compressed package in tar.gz format.

Please use the plugin version 1.9.1.*.

Archive address: bytedance/Elkeid: releases

-

Make sure the RASP component is included in the component policy

-

Synchronize the policy to the machine.

running state

After deploying the RASP component, RASP will automatically analyze the machine process, and the process information that meets the conditions for implantation of the probe will be reported to the running status.

Details link on the right Support viewing process Additional information

Details link on the right Support viewing process Additional information

Configure

Configure which processes will turn on RASP protection

Click New Configuration

The relationship between each form item of each configuration is AND

The relationship between each configuration is or

The relationship between each form item of each configuration is AND

The relationship between each configuration is or

| Form Items | Required or Not | Meaning Explanation | Remarks |

|---|---|---|---|

| Host Labels | No | Delineate the scope of applicable host labels for this configuration | Host labels are consistent with labels in Asset Management |

| IP | No | Match Machine IP | |

| Process command line | No | Regular matching of process command line | |

| environment variables | No | Match the environment variables of the process | Multiple environment variables can be the relationship between multiple environment variables |

| Runtime type | Yes | Which runtime is this configuration suitable for | Multiple selectable |

| Whether to enable injection | Yes | Whether to enable RASP protection for the process of configuring filtering in this article | Default is No |

Each configuration can also be configured with blocking and filtering

- Blocking: regular expression matching of a parameter of a Hook function

- When the regular expression matches, the function throws an exception to block the function from running.

- The function runs normally when the regular expression does not match.

- Filtering: regular expression matching of parameters of a Hook function

- Contains: only report matched parameter data

- does not contain: only report parameter data other than matching to

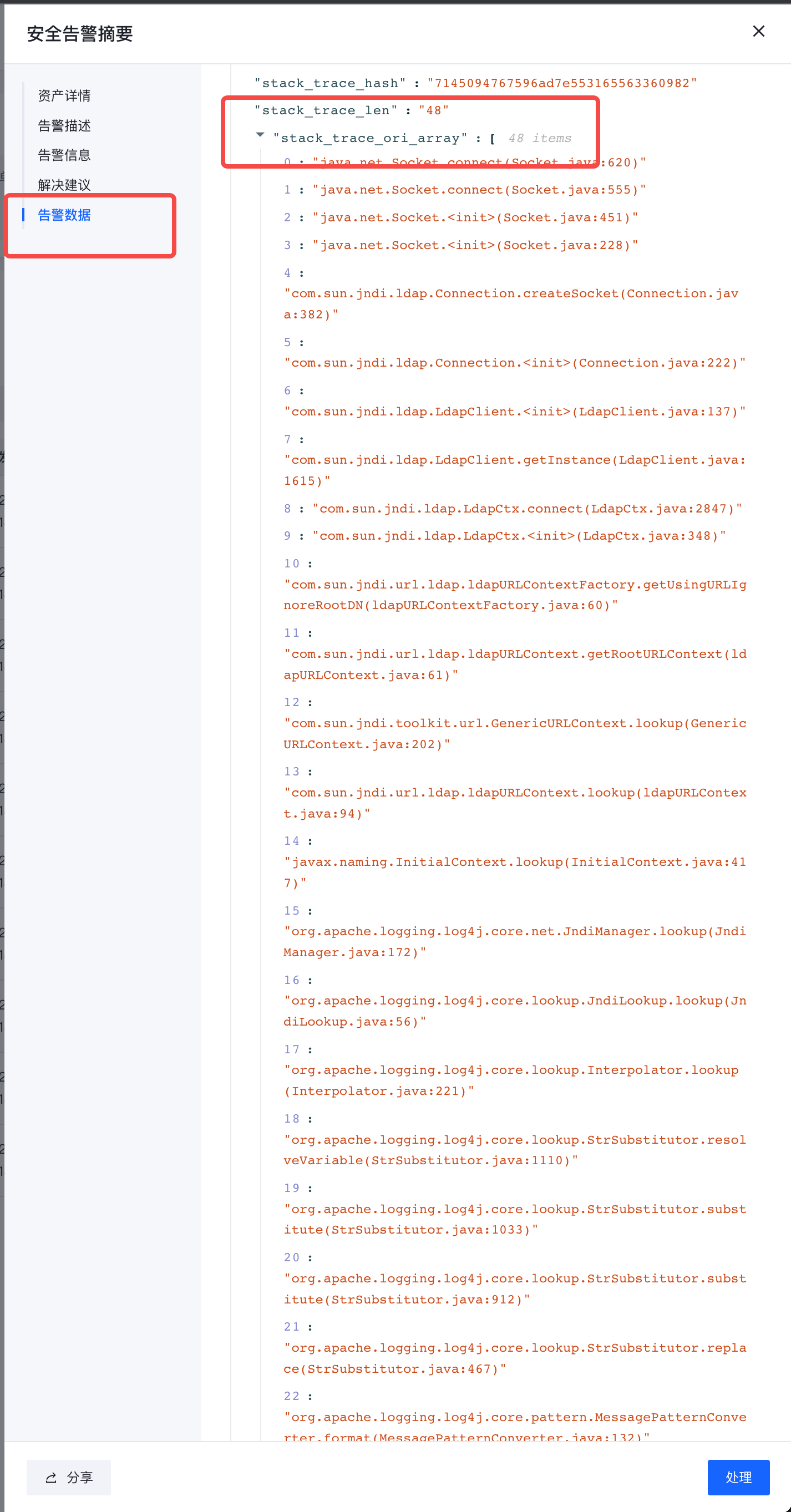

Intrusion detection

After the RASP probe is implanted in the target process, it will continue to report application behavior, and events and alarms will be generated when abnormal behavior is found.

- The alarm data on the right can check parameter details and call stack

社区版与企业版能力差异

Elkeid Console社区版v1.9.1和企业版能力对比

| 功能 | Elkeid Community Edition | Elkeid Enterprise Edition |

| Linux 数据采集能力 | ✅ | ✅ |

| RASP 探针能力 | ✅ | ✅ |

| K8s Audit Log 采集能力 | ✅ | ✅ |

| Agent 控制面 | ✅ | ✅ |

| 主机状态与详情 | ✅ | ✅ |

| 勒索诱饵 | 🙅♂️ | ✅ |

| 资产采集 | ✅ | ✅ |

| 高级资产采集 | 🙅♂️ | ✅ |

| 容器集群资产采集 | ✅ | ✅ |

| 暴露面与脆弱性分析 | 🙅♂️ | ✅ |

| 主机/容器 基础入侵检测 | 少量样例 | ✅ |

| 主机/容器 行为序列入侵检测 | 🙅♂️ | ✅ |

| RASP 基础入侵检测 | 少量样例 | ✅ |

| RASP 行为序列入侵检测 | 🙅♂️ | ✅ |

| K8S 基础入侵检测 | 少量样例 | ✅ |

| K8S 行为序列入侵检测 | 🙅♂️ | ✅ |

| K8S 威胁分析 | 🙅♂️ | ✅ |

| 告警溯源(行为溯源) | 🙅♂️ | ✅ |

| 告警溯源(驻留溯源) | 🙅♂️ | ✅ |

| 告警白名单 | ✅ | ✅ |

| 多告警聚合能力 | 🙅♂️ | ✅ |

| 威胁处置(进程) | 🙅♂️ | ✅ |

| 威胁处置(网络) | 🙅♂️ | ✅ |

| 威胁处置(文件) | 🙅♂️ | ✅ |

| 文件隔离箱 | 🙅♂️ | ✅ |

| 漏洞检测 | 少量情报 | ✅ |

| 漏洞情报热更新 | 🙅♂️ | ✅ |

| 基线检查 | 少量基线 | ✅ |

| RASP 热补丁 | 🙅♂️ | ✅ |

| 病毒扫描 | ✅ | ✅ |

| 用户行为日志分析 | 🙅♂️ | ✅ |

| 插件管理 | ✅ | ✅ |

| 系统监控 | ✅ | ✅ |

| 系统管理 | ✅ | ✅ |

| Windows 支持 | 🙅♂️ | ✅ |

| 蜜罐 | 🙅♂️ | 🚘 |

| 主动防御 | 🙅♂️ | 🚘 |

| 云查杀 | 🙅♂️ | 🚘 |

| 防篡改 | 🙅♂️ | 🚘 |

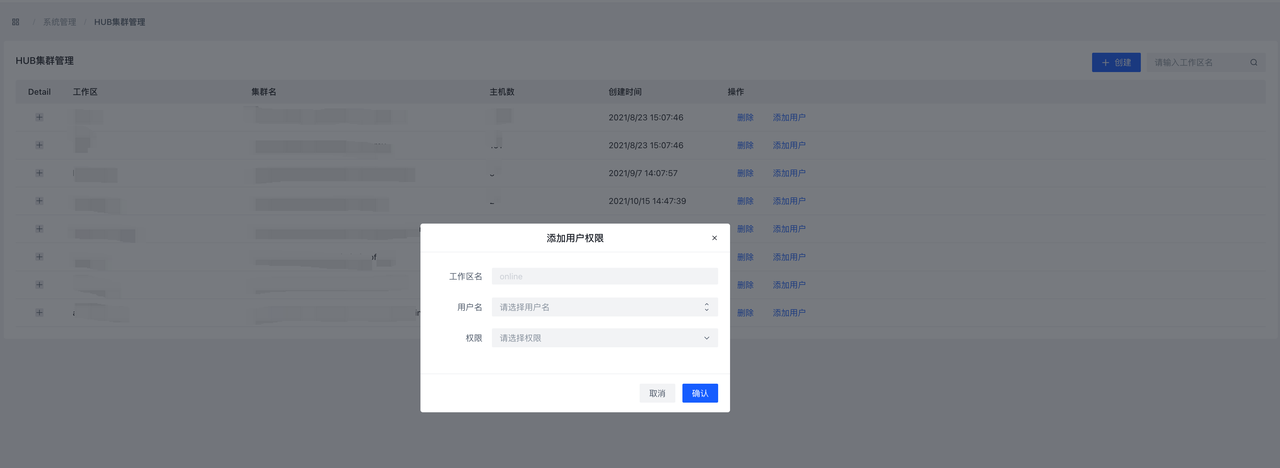

Elkeid HUB 社区版和企业版能力对比

| 功能 | Elkeid HUB Community Edition | Elkeid HUB Enterprise Edition |

| 完全规则编写能力(详见Elkedi HUB社区版使用指南): 基础检测(等于/包含/以……开头/正则等) 阈值/频率/GROUP BY检测 多关键词检测 数组/复杂结构检测 CEP 节点检测能力 字段添加/修改/删除 插件联动能力 |

✅ |

✅ |

| 系统/用户管理能力 | ✅ | ✅ |

| 集群部署能力 | ❌ | ✅ |

| 多工作空间 | ❌ | ✅ |

| 输入/输出/规则集/项目组建操作能力 | ✅ | ✅ |

| 负载、规则监控能力 | ✅ | ✅ |

| 组件数据抽样能力 | ✅ | ✅ |

| 规则测试能力 | ✅ | ✅ |

| 数据表(MYSQL/REDIS/ClickHouse/Mongodb/ES)消费/规则关联能力 | ❌ | ✅ |

| 自定义插件能力 | ✅ | ✅ |

| 插件供外部调用能力 | ❌ | ✅ |

| 日志/事件监控能力 | ✅ | ✅ |

| 溯源/持久化能力 | ❌ | ✅ |

开源策略说明

HIDS开源策略列表

| 告警ID | 告警名 | 描述 | 告警类型 | 数据类型 | 等级 |

|---|---|---|---|---|---|

| hidden_module_detect | Hidden kernel module | Hidden Kernel Module Detected | 后门驻留 | Hooks | critical |

| bruteforce_single_source_detect | Bruteforce from single-source | Bruteforce from single source address | 暴力破解 | Log Monitor | medium |

| bruteforce_multi_source_detect | Bruteforce from multi-sources | Bruteforce from multiple source addresses | 暴力破解 | Log Monitor | medium |

| bruteforce_success_detect | Bruteforce success | Bruteforce login attempt ended with succesful password login | 暴力破解 | Log Monitor | critical |

| binary_file_hijack_detect1 | Binary file hijack | Common binary file hijacking, file creation detection | 变形木马 | execve | medium |

| binary_file_hijack_detect2 | Binary file hijack | Common binary file Hijacking, file renaming detection | 变形木马 | execve | critical |

| binary_file_hijack_detect3 | Binary file hijack | Common binary file hijacking, file linkage detection | 变形木马 | execve | critical |

| user_credential_escalation_detect | User credential escalation | Non-root user escalate to root privilege | 提权攻击 | Log Monitor | medium |

| privilege_escalation_suid_sgid_detect_1 | User credential escalation | Non-root user escalete privilege with suid/sgid | 提权攻击 | Log Monitor | medium |

| privilege_escalation_suid_sgid_detect_2 | User credential escalation | Non-root user escalete privilege with suid/sgid | 提权攻击 | execve | medium |

| reverse_shell_detect_basic | Reverse shell | Reverse Shell With Connection | 代码执行 | execve | critical |

| reverse_shell_detect_argv | Reverse shell | Reverse-shell-like argv during execution | 代码执行 | execve | high |

| reverse_shell_detect_exec | Reverse shell | Reverse shell with exec | 代码执行 | execve | high |

| reverse_shell_detect_pipe | Reverse shell | Reverse shell with pipe | 代码执行 | execve | high |

| reverse_shell_detect_perl | Reverse shell | Reverse shell with Perl | 代码执行 | execve | high |

| reverse_shell_detect_python | Reverse shell | Reverse shell with Python | 代码执行 | execve | high |

| bind_shell_awk_detect | Bind shell with awk | Suspecious bind shell with awk | 代码执行 | execve | high |

| pipe_shell_detect | Double-piped reverse shell | Double-piped reverse shell | 代码执行 | execve | high |

| suspicious_rce_from_consul_service_detect | Suspecious RCE like behavior | Suspecious RCE like behaviors from Consul service | 试探入侵 | execve | high |

| suspicious_rce_from_mysql_service_detect | Suspecious RCE like behavior | Suspecious RCE like behaviors from mysql service | 试探入侵 | execve | high |

| dnslog_detect1 | Suspecious query to dnslog | Suspecious dnslog like query on hosts | 试探入侵 | execve | high |

| dnslog_detect2 | Suspecious query to dnslog | Suspecious dnslog like query on hosts | 试探入侵 | execve | high |

| container_escape_mount_drive_detect | Container escape with mounted drive | Unnecessary behavior inside contianer, mount drive | 提权攻击 | execve | high |

| container_escape_usermode_helper_detect | Container escape with usermodehelper | Suspecious contianer escape with usermode helper | 提权攻击 | execve | high |

| signature_scan_maliciou_files_detect | Malicious files | Detected abnormal files with maliciou singnature | 静态扫描 | execve | high |

RASP开源策略列表

| 规则名称 | 运行时 | 规则描述 |

|---|---|---|

| JSP Command Execution | JVM | Discover the behavior of command execution from java server pages |

| Log4j Exploit | JVM | Detected exploit process for log4j |

| WebShell Behavior Detect | JVM | Suspected WebShell-like behavior found in JVM runtime |

| Command Execution Caused By FastJson Deserialization | JVM | FastJson deserializes attacker-constructed data, resulting in command execution |

| Command Execution In preg_replace Function | PHP | Unusual behavior of php preg_replace function for command execution |

| BeHinder WebShell Detect | PHP | BeHinder WebShell detect by PHP runtime stack trace |

K8S开源策略列表

k8s 开源策略列表

| 策略一级类别 | 策略二级类别 | 策略三级类别 / 告警名称(风险名称) | 告警描述 | 告警类型 | 严重等级 | ATT&CK ID | 风险说明 | 处置建议(含关注字段) |

|---|---|---|---|---|---|---|---|---|

| 异常行为 | 认证/授权失败 | 匿名访问 | 匿名用户访问 | 试探入侵 | high | T1133 | 检测到匿名用户访问集群,可能有人对集群进行探测攻击。 | 1. 通过 UserAgent,操作,请求 URI 等字段判断该操作是否是敏感操作,如果是则可能是有人对集群进行攻击,请通过源 IP 字段以及该 IP 关联的资产信息等来定位发起者身份,进一步排查。 2. 如果不是,则可以考虑对其进行加白处理(注意:建议结合多个字段进行加白,避免导致漏报) 关注字段:UserAgent,账户/模拟账户,动作,资源 |

| 认证失败 | 枚举/获取 secrets,认证失败 | 试探入侵 | low | T1133 | 枚举、获取集群保密字典(Secret)时出现认证失败。攻击者可能会尝试获取集群 secrets 用于后续攻击。 | 1. 请先结合客户端的 UserAgent、账户/模拟账户等字段初步判断是否为业务、研发/运维的行为 2. 如果是重复出现的预期行为,且经排查后判断安全风险可控,可以考虑对其进行加白(注意:建议结合多个字段进行加白,避免导致漏报) 3. 如果是非预期行为,请通过源 IP 字段以及该 IP 关联的资产信息等来定位发起者身份,进一步排查 关注字段:UserAgent, 账户/模拟账户,动作,资源名字 |

||

| 授权失败 | 枚举/获取 secrets,授权失败 | 试探入侵 | medium | T1133 | 枚举、获取集群保密字典(Secret)时出现授权失败。攻击者可能会尝试获取 secrets 用于后续攻击。 | 1. 请先结合客户端的 UserAgent、账户/模拟账户等字段初步判断是否为业务、研发/运维的行为 2. 如果是重复出现的预期行为,且经排查后判断安全风险可控,可以考虑对其进行加白(注意:建议结合多个字段进行加白,避免导致漏报) 3. 如果是非预期行为,请通过源 IP 字段以及该 IP 关联的资产信息等来定位发起者身份,进一步排查 关注字段:UserAgent, 账户/模拟账户,动作,资源名字 |

||

| 凭据滥用 | 凭据滥用 | 利用 kubectl 滥用 ServiceAccount | 试探入侵 | critical | T1078, T1133 | 通过 kubectl 客户端工具以 SA 账户身份访问 k8s API Server。攻击者窃取到某个 SA token 后,然后通过 kubectl 工具,附带窃取的 token 向 API Server 发起请求来进行攻击。 | 1. 请先通过UserAgent、账户/模拟账户、动作、资源等字段确认是否为预期业务行为 2. 如果是重复出现的预期行为,且经排查后判断安全风险可控,可以考虑对其进行加白(注意:建议结合多个字段进行加白,避免导致漏报) 3. 如果是非预期行为,请通过源 IP 字段以及该 IP 关联的资产信息等来定位发起者身份,进一步排查 关注字段:UserAgent,账户/模拟账户,动作,资源 |

|

| 外部代码执行 | 外部代码执行 | 与 API Server 交互,在 pods 内执行命令 | 代码执行 | medium | T1609 | 通过 pods/exec (即 kubectl exec 对应的子资源)在容器内执行任意命令(创建交互式 bash、执行其他命令)。攻击者可能会通过创建 pods/exec 子资源在容器中执行任意命令,从而实现横向移动攻击、凭据窃取等。本策略记录所有的 pods/exec 行为。 | 1. 请先通过UserAgent、账户/模拟账户、执行命令等字段确认是否为预期业务行为 2. 如果是重复出现的预期行为,且经排查后判断安全风险可控,可以考虑对其进行加白(注意:建议结合多个字段进行加白,避免导致漏报) 3. 如果是非预期行为,请通过源 IP 字段以及该 IP 关联的资产信息等来定位发起者身份,进一步排查 关注字段:UserAgent,账户/模拟账户,执行命令 |

|

| 威胁资源 | Workloads 部署 | 特权容器 | 创建具有特权容器的工作负载 | 提权攻击 | critical | T1611, T1610 | 监测到有特权容器创建。攻击者可能会通过创建特权容器来横向移动并获取宿主机的控制权。业务在部署服务时,也可能会创建特权容器,如果容器被攻击,则可以轻易实现逃逸,因此非必要不创建。 | 1. 请先通过容器所属的业务等字段确认是否为预期业务行为 2. 如果是重复出现的预期行为,且经排查后判断安全风险可控,可以考虑对其进行加白(注意:建议结合多个字段进行加白,避免导致漏报) 3. 如果是非预期行为,请通过源 IP 字段以及该 IP 关联的资产信息等来定位发起者身份,进一步排查 关注字段:容器所属的业务,UserAgent, 账户/模拟账户 |

| 挂载宿主机敏感文件 | 创建挂载宿主机敏感文件的工作负载 | 提权攻击 | critical | T1611, T1610 | 创建的容器挂载了宿主机上的敏感目录或文件,比如根目录目录,/proc目录等。 攻击者可能会创建挂载宿主机敏感目录、文件的容器来提升权限,获取宿主机的控制权并躲避检测。当合法的业务创建挂载宿主机敏感目录、文件的容器时,也会给容器环境带来安全隐患。 针对前者需要进一步排查异常,针对后者需联系业务进行持续的安全合规整改。 |

1. 请先通过容器所属的业务等字段确认是否为预期业务行为 2. 如果是重复出现的预期行为,且经排查后判断安全风险可控,可以考虑对其进行加白(注意:建议结合多个字段进行加白,避免导致漏报) 3. 如果是非预期行为,请通过源 IP 字段以及该 IP 关联的资产信息等来定位发起者身份,进一步排查 关注字段:容器所属的业务,UserAgent,账户/模拟账户,镜像 |

||

| RoleBinding、ClusterRoleBinding 创建 | 创建不安全的 ClusterRole | 创建绑定大权限 ClusterRole 的 ClusterRoleBinding | 后门驻留 | high | T1078 | 创建的 ClusterRoleBinding 绑定了敏感的 ClusterRole,即将某个用户、用户组或服务账户赋予敏感的 ClusterRole 的权限。攻击者可能会为持久化、隐蔽性而创建绑定大权限 ClusterRole 的 ClusterRoleBinding。集群管理员或运维人员也可能会因安全意识不足而创建绑定大权限 ClusterRole 的 ClusterRoleBinding。根据权限最小化原则和 k8s 安全攻防实践,此类 ClusterRoleBinding 会给集群引入较大的安全风险,因此应该极力避免。 | 1. 请先结合客户端的 UserAgent、账户/模拟账户等字段初步判断是否为业务、研发/运维的行为 2. 如果是运维人员在进行角色绑定,则可以将告警设置为已处理。 3. 如果是重复出现的预期行为,且经排查后判断安全风险可控,可以考虑对其进行加白(注意:建议结合多个字段进行加白,避免导致漏报) 4. 如果是非预期行为,请通过源 IP 字段以及该 IP 关联的资产信息等来定位发起者身份,进一步排查 关注字段:UserAgent, 账户/模拟账户,主体名字,角色名字 |

|

| 漏洞利用行为 | N/A | 疑似 CVE-2020-8554 | 疑似存在通过创建、更新 Service 的 externalIPs 来利用 CVE-2020-8554 的利用行为 | 信息搜集 | high | T1557 | 检测到 CVE-2020-8554 的利用特征——创建、更新 Service 并设置 externalIPs。此漏洞的利用途径之一为 创建、更新 Service 时设置了恶意 spec.externalIPs 从而实现中间人攻击。根据实践,Service 的 ExternalIP 属性很少被使用。因此当发生这种行为时,需要运营人员进一步核实 ExternalIP 是否为合法的 IP 地址。 | 1. 请先通过UserAgent、账户/模拟账户等字段以及原始日志中的 requestObject.spec.externalIPs 的值确认是否为预期业务行为 2. 如果是重复出现的预期行为,且经排查后判断安全风险可控,可以考虑对其进行加白(注意:建议结合多个字段进行加白,避免导致漏报) 3. 如果是非预期行为,请通过源 IP 字段以及该 IP 关联的资产信息等来定位发起者身份,进一步排查 关注字段:UserAgent, 账户/模拟账户, requestObject.spec.externalIPs |

K8S开源策略编写说明

数据源

K8S策略基于K8S Audit Logs数据,具体的Audit Policy可以在平台中下载。在Console中配置好后,数据会经Agent Center上开启的Webhook写入到Kafka的k8stopic。也可以直接使用HUB中自带的k8s INPUT作为数据源。

开源策略说明

Project

在v1.9.1社区版中,我们编写了一部分示例策略用于开源,对应的HUB Project为kube_example和kube_workload。kube_example存放的策略为基础策略,kube_workload存放的为需要对数据进行处理后再进行检测的策略。

- kube_example

INPUT.k8s --> RULESET.kube_detect

RULESET.kube_detect --> RULESET.kube_alert_info

RULESET.kube_alert_info --> RULESET.kube_add_cluster_info

RULESET.kube_add_cluster_info --> RULESET.kube_push_alert

- kube_workload

INPUT.k8s --> RULESET.kube_workloads

RULESET.kube_workloads --> RULESET.kube_filter_workloads

RULESET.kube_filter_workloads --> RULESET.kube_handle_workloads

RULESET.kube_handle_workloads --> RULESET.kube_detect_workloads

RULESET.kube_detect_workloads --> RULESET.kube_alert_info

RULESET.kube_alert_info --> RULESET.kube_add_cluster_info

RULESET.kube_add_cluster_info --> RULESET.kube_push_alert

Ruleset

下面为一些调用HUB内置插件的规则集进行补充说明,其余规则可以直接在HUB前端进行查看。

kube_alert_info规则集对检出的告警添加告警数据字段,同时调用Kube_add_info插件添加告警的基础信息。该插件为HUB内置Modify插件,因此可以按需调用。

kube_add_cluster_info规则集调用Manager接口通过集群id获取集群信息,该流程通过调用KubeAddClusterInfo插件实现。该插件为HUB内置Modify插件。

kube_push_alert规则集调用Manager接口推送告警,该流程通过调用KubePushMsgToLeader插件实现。该插件为HUB内置Action插件。

下图为告警内容说明:

workload相关检测策略通过Python Plugin进行实现,该插件于kube_handle_workloads中调用。

workload相关检测策略通过Python Plugin进行实现,该插件于kube_handle_workloads中调用。

编写建议

在编写其余告警策略时,需要分别调用kube_alert_info、kube_add_cluster_info、kube_push_alert进行告警的信息填充,集群信息添加,告警的推送。如果新增告警类型,需要在kube_alert_info中进行添加,补充相关字段。

Elkeid - Bytedance Cloud Workload Protection Platform

Elkeid is an open source solution that can meet the security requirements of various workloads such as hosts, containers and K8s, and serverless. It is derived from ByteDance's internal best practices.

With the business development of enterprises, the situation of multi-cloud, cloud-native, and coexistence of multiple workloads has become more and more prominent. We hope that there can be a set of solutions that can meet the security requirements under different workloads, so Elkeid was born.

Introduction

Elkeid has the following key capabilities:

-

Elkeid not only has the traditional HIDS (Host Intrusion Detection System) ability for host layer intrusion detection and malicious file identification, but also can well identify malicious behaviors in containers. The host can meet the anti-intrusion security requirements of the host and the container on it, and the powerful kernel-level data collection capability at the bottom of Elkeid can satisfy the desire of most security analyst for host-level data.

-

For the running business Elkeid has the RASP capability and can be injected into the business process for anti-intrusion protection, not only the operation and maintenance personnel do not need to install another Agent, but also the business does not need to restart.

-

For K8s itself, Elkeid supports collection to K8s Audit Log to perform intrusion detection and risk identification on the K8s system.

-

Elkeid's rule engine Elkeid HUB can also be well linked with external multiple systems.

Ekeid integrates these capabilities into one platform to meet the complex security requirements of different workloads, while also achieving multi-component capability association. What is even more rare is that each component undergoes massive byte-beating. Data and years of combat testing.

Elkeid Community Edition Description

It should be noted that there are differences between the Elkeid open source version and the full version. The current open source capabilities mainly include:

- All on-device capabilities, that is, on-device data/asset/partial collection capabilities, kernel-state data collection capabilities, RASP probe parts, etc., and are consistent with the internal version of ByteDance;

- All backend capabilities, namely Agent Center, service discovery, etc., are consistent with the internal version of ByteDance;

- Provide a community edition rule engine, namely Elkeid HUB, and use it as an example with a small number of strategies;

- Provides community version of Elkeid Console and some supporting capabilities.

Therefore, it is necessary to have complete anti-intrusion and risk perception capabilities, and it is also necessary to construct policies based on Elkeid HUB and perform secondary processing of the data collected by Elkeid.

Elkeid Architecture

Elkeid Host Ability

- Elkeid Agent Linux userspace agent,responsible for managing various plugin, communication with Elkeid Server.

- Elkeid Driver Driver can collect data on Linux Kernel, support container runtime , communication with Elkeid Driver Plugin.

- Elkeid RASP Support CPython、Golang、JVM、NodeJS、PHP runtime probe, supports dynamic injection into the runtime.

- Elkeid Agent Plugin List

- Driver Plugin: Responsible for managing Elkeid Driver, and process the driver data.

- Collector Plugin: Responsible for the collection of assets/log information on the Linux System, such as user list, crontab, package information, etc.

- Journal Watcher: Responsible for monitoring systemd logs, currently supports ssh related log collection and reporting.

- Scanner Plugin: Responsible for static detection of malicious files on the host, currently supports yara.

- RASP Plugin: Responsible for managing RASP components and processing data collected from RASP.

- Baseline Plugin: Responsible for detecting baseline risks based on baseline check policies.

- Elkeid Data Format

- Elkeid Data Usage Tutorial

Elkeid Backend Ability

- Elkeid AgentCenter Responsible for communicating with the Agent, collecting Agent data and simply processing it and then summing it into the MQ, is also responsible for the management of the Agent, including Agent upgrade, configuration modification, task distribution, etc.

- Elkeid ServiceDiscovery Each component in the background needs to register and synchronize service information with the component regularly, so as to ensure that the instances in each service module are visible to each other and facilitate direct communication.

- Elkeid Manager Responsible for the management of the entire backend, and provide related query and management API.

- Elkeid Console Elkeid Front-end

- Elkeid HUB Elkeid HIDS RuleEngine

Elkeid Function List

| Ability List | Elkeid Community Edition | Elkeid Enterprise Edition |

|---|---|---|

| Linux runtime data collection | :white_check_mark: | :white_check_mark: |

| RASP probe | :white_check_mark: | :white_check_mark: |

| K8s Audit Log collection | :white_check_mark: | :white_check_mark: |

| Agent control plane | :white_check_mark: | :white_check_mark: |

| Host Status and Details | :white_check_mark: | :white_check_mark: |

| Extortion bait | :ng_man: | :white_check_mark: |

| Asset collection | :white_check_mark: | :white_check_mark: |

| Asset Collection Enhancements | :ng_man: | :white_check_mark: |

| K8s asset collection | :white_check_mark: | :white_check_mark: |

| Exposure and Vulnerability Analysis | :ng_man: | :white_check_mark: |

| Host/Container Basic Intrusion Detection | few samples | :white_check_mark: |

| Host/Container Behavioral Sequence Intrusion Detection | :ng_man: | :white_check_mark: |

| RASP Basic Intrusion Detection | few samples | :white_check_mark: |

| RASP Behavioral Sequence Intrusion Detection | :ng_man: | :white_check_mark: |

| K8S Basic Intrusion Detection | few samples | :white_check_mark: |

| K8S Behavioral Sequence Intrusion Detection | :ng_man: | :white_check_mark: |

| K8S Threat Analysis | :ng_man: | :white_check_mark: |

| Alarm traceability (behavior traceability) | :ng_man: | :white_check_mark: |

| Alarm traceability (resident traceability) | :ng_man: | :white_check_mark: |

| Alert Whitelist | :white_check_mark: | :white_check_mark: |

| Multi-alarm aggregation capability | :ng_man: | :white_check_mark: |

| Threat Repsonse (Process) | :ng_man: | :white_check_mark: |

| Threat Repsonse (Network) | :ng_man: | :white_check_mark: |

| Threat Repsonse (File) | :ng_man: | :white_check_mark: |

| File isolation | :ng_man: | :white_check_mark: |

| Vulnerability discovery | few vuln info | :white_check_mark: |

| Vulnerability information hot update | :ng_man: | :white_check_mark: |

| Baseline check | few baseline rules | :white_check_mark: |

| Application Vulnerability Hotfix | :ng_man: | :white_check_mark: |

| Virus scan | :white_check_mark: | :white_check_mark: |

| User behavior log analysis | :ng_man: | :white_check_mark: |

| Agent Plugin management | :white_check_mark: | :white_check_mark: |

| System monitoring | :white_check_mark: | :white_check_mark: |

| System Management | :white_check_mark: | :white_check_mark: |

| Windows Support | :ng_man: | :white_check_mark: |

| Honey pot | :ng_man: | :oncoming_automobile: |

| Active defense | :ng_man: | :oncoming_automobile: |

| Cloud virus analysis | :ng_man: | :oncoming_automobile: |

| File-integrity monitoring | :ng_man: | :oncoming_automobile: |

Front-end Display (Community Edition)

Security overview

K8s security alert list

K8s pod list

Host overview

Resource fingerprint

intrusion alert overwiew

Vulnerability

Baseline check

Virus scan

Backend hosts monitoring

Backend service monitoring

Console User Guide

Quick Start

Contact us && Cooperation

Lark Group

About Elkeid Enterprise Edition

Elkeid Enterprise Edition supports separate intrusion detection rules(like the HIDS, RASP, K8s) sales, as well as full capacity sales.

If interested in Elkeid Enterprise Edition please contact elkeid@bytedance.com

Elkeid Docs

For more details and latest updates, see Elkeid docs.

License

- Elkeid Driver: GPLv2

- Elkeid RASP: Apache-2.0

- Elkeid Agent: Apache-2.0

- Elkeid Server: Apache-2.0

- Elkeid Console: Elkeid License

- Elkeid HUB: Elkeid License

404StarLink 2.0 - Galaxy

Elkeid has joined 404Team 404StarLink 2.0 - Galaxy

About Elkeid Agent

Agent provides basic capability support for components on the host, including data communication, resource monitoring, component version control, file transfer, and host basic information collection.

Agent itself does not provide security capabilities, and operates as a system service as a plugin base. The policies of various functional plugins are stored in the server-side configuration, and after the Agent receives the corresponding control instructions and configuration, it will open, close, and upgrade itself and the plugins.

Bi-stream gRPC is used for communication between Agent and Server, and mutual TLS verification is enabled based on self-signed certificates to ensure transport security. Among them, the flow of information in the direction of Agent -> Server is called data flow, and the flow of information in the direction of Server -> Agent is generally control flow, using different message types of protobuf. The Agent itself supports client-side service discovery, and also supports cross-Region level communication configuration. It realizes that an Agent package can be installed in multiple network isolation environments. Based on a TCP connection at the bottom layer, two data transmissions, Transfer and FileOp, are realized in the upper layer. The service supports the data reporting of the plugin itself and the interaction with the files in the Host.

Plugins, as security capability plugins, generally have a "parent-child" process relationship with the Agent. Using two pipes as the cross-process communication method, the plugins lib provides two plugin libraries for Go and Rust, which are responsible for encoding and sending plugin-side information. It is worth mentioning that after the plugin sends data, it will be encoded as Protobuf binary data. After the Agent receives it, there is no need to decode it twice, and then splices the Header feature data in the outer layer and transmits it directly to the server. Generally, the server does not need to Decoding is directly transmitted to the subsequent data stream, and decoding is performed when used, which reduces the additional performance overhead caused by multiple encoding and decoding in data transmission to a certain extent.

The Agent is implemented in Go. Under Linux, systemd is used as a guardian to control resource usage by cgroup restrictions. It supports aarch64 and x86-64 architectures. It is finally compiled and packaged as deb and rpm packages for distribution. The formats are in line with systemd, Debian, and RHEL specifications. , which can be directly provided to the corresponding software repository for subsequent version maintenance. In subsequent versions, Agent for Windows platform will be released.

Runtime Requirements

Most of the functions provided by Agent and Plugin need to run at the host level with root privileges. In containers with limited privileges, some functions may be abnormal.

Quick Start

Through the complete deployment of elkeidup, you can directly obtain the installation package for Debian/RHEL series distributions, and deploy according to the commands of the Elkeid Console - Installation Configuration page.

Compile from source

Dependency Requirements

Confirm related configuration

- Make sure that the three files

ca.crt,client.key, andclient.crtin thetransport/connectiondirectory are the same as the files with the same name in the Agent Center'sconfdirectory. - Make sure the parameters in the

transport/connection/product.gofile are properly configured:- If it is a manually deployed Server:

serviceDiscoveryHost["default"]needs to be assigned to the intranet listening address and port of the ServiceDiscovery service or its proxy, for example:serviceDiscoveryHost["default"] = "192.168.0.1: 8088"privateHost["default"]needs to be assigned to the intranet listening address and port of the AgentCenter service or its proxy, for example:privateHost["default"] = "192.168.0.1: 6751"- If there is a public network access point of the Server,

publicHost["default"]needs to be assigned to the external network listening address and port of the AgentCenter service or its proxy, for example:publicHost[ "default"]="203.0.113.1:6751"

- If the Server is deployed through elkeidup, the corresponding configuration can be found according to the

~/.elkeidup/elkeidup_config.yamlfile of the deployed Server host:- Find the IP of the Nginx service in the configuration file, the specific configuration item is

nginx.sshhost[0].host - Find the IP of the ServiceDiscovery service in the configuration file, the specific configuration item is

sd.sshhost[0].host serviceDiscoveryHost["default"]needs to be assigned the IP of the ServiceDiscovery service and set the port number to 8088, for example:serviceDiscoveryHost["default"] = "192.168.0.1 :8088"privateHost["default"]needs to be assigned the IP of the Nginx service, and set the port number to 8090, for example:privateHost["default"] = "192.168.0.1:8090"

- Find the IP of the Nginx service in the configuration file, the specific configuration item is

- If it is a manually deployed Server:

Compile

Chage to the root directory of agent source code, execute:

BUILD_VERSION=1.7.0.24 bash build.sh

During the compilation process, the script will read the BUILD_VERSION environment variable to set the version information, which can be modified according to actual needs.

After the compilation is successful, in the output directory of the root directory, you should see 2 deb and 2 rpm files, which correspond to different systems and architectures.

Version Upgrade

- If no client component has been created, please create a new component in the Elkeid Console-Component Management page.

- On the Elkeid Console - Component Management page, find the "elkeid-agent" entry, click "Release Version" on the right, fill in the version information and upload the files corresponding to the platform and architecture, and click OK.

- On the Elkeid Console - Component Policy page, delete the old "elkeid-agent" version policy (if any), click "New Policy", select the version just released, and click OK. Subsequent newly installed Agents will be self-upgraded to the latest version.

- On the Elkeid Console - Task Management page, click "New Task", select all hosts, click Next, select the "Sync Configuration" task type, and click OK. Then, find the task you just created on this page, and click Run to upgrade the old version of the Agent.

License

Elkeid Agent is distributed under the Apache-2.0 license.

System Architecture

Overview

Elkeid Server contains 5 modules::

- AgentCenter (AC) is responsible for communicating with the Agent, collecting Agent data and simply processing and then writing to the Kafka cluster. At the same time, it is also responsible for the management of the Agent, including Agent upgrade, configuration modification, task distribution, etc. In addition, the AC also provides HTTP services, through which the Manager manages the AC and the Agent.

- In ServiceDiscovery (SD), each service module needs to register with SD regularly and synchronize service information, so as to ensure that the instances in each service module are visible to each other and facilitate direct communication. Since SD maintains the status information of each registered service, when a service user requests service discovery, SD will perform load balancing. For example, the Agent requests a list of AC instances, and SD directly returns the AC instance with the least load pressure.

- Manager is responsible for managing the entire back-end and providing related query and management interfaces. Including the management of the AC cluster, monitoring the status of the AC, and managing all agents through the AC, collecting the running status of the agent, and delivering tasks to the agent. At the same time, the manager also manages real-time and offline computing clusters.

- Elkeid Console: Elkeid web console。

- Elkeid HUB : Elkeid HIDS RuleEngine。

In short, AgentCenter collects Agent data, real-time/offline calculation module analyzes and processes the collected data, Manager manages AgentCenter and computing module, ServiceDiscovery connects all services and nodes.

Features

- Backend infrastructure solutions for million-level Agent

- Distributed, decentralized, highly available cluster

- Simple deployment, few dependencies and easy maintenance

Deployment document

Build

- AgentCenter(AC): Executing './build.sh' in

Elkeid/server/agent_centerdirectory will generate the product' bin.tar.gz 'in the output directory. - ServiceDiscovery(SD):Executing

Elkeid/server/service_discoverydirectory will generate the product' bin.tar.gz 'in the output directory. - Manager:Executing

Elkeid/server/managerdirectory will generate the product' bin.tar.gz 'in the output directory.

Upgrade

Refer to the backend section of Build Elkeid CWPP from Source to deploy or upgrade.

Console User Guide

License

Elkeid Server are distributed under the Apache-2.0 license.

About Elkeid(AgentSmith-HIDS) Driver

Elkeid Driver is a one-of-a-kind Kernel Space HIDS agent designed for Cyber-Security.

Elkeid Driver hooks kernel functions via Kprobe, providing rich and accurate data collection capabilities, including kernel-level process execve probing, privilege escalation monitoring, network audition, and much more. The Driver treats Container-based monitoring as a first-class citizen as Host-based data collection by supporting Linux Namespace. Compare to User Space agents on the market, Elkeid provides more comprehensive information with massive performance improvement.

Elkeid has already been deployed massively for HIDS usage in world-class production environments. With its marvelous data collection ability, Elkeid also supports Sandbox, Honeypot, and Audition data requirements.

Notice

DO NOT insmod the ko in the production machines if you have not well tested it.

Quick Test

First you need install Linux Headers

# clone and build

git clone https://github.com/bytedance/Elkeid.git

cd Elkeid/driver/LKM/

make clean && make

< CentOS only: run build script instead >

sh ./centos_build_ko.sh

# load and test (should run as root)

insmod hids_driver.ko

dmesg | tail -n 20

test/rst -q

< "CTRL + C" to quit >

# unload

rmmod hids_driver

Pre-build Ko

How To Get

If all urls failed, please build elkeid kernel module yourself.